Text

Longman Mathematical Texts

Integral equations

Longman Mathematical Texts

Edited by Alan Jeffrey and lain Adamson

Elementary mathematical analysis I. T. Adamson

Elasticity R. J. Atkin and N. Fox

Set theory and abstract algebra T. S. Blyth

The theory of ordinary differential equations J. C. Burkill

Random variables L. E. Clarke

Electricity C. A. Coulson and T. J. M. Boyd

Waves C. A. Coulson and A. Jeffrey

Optimization A. H. England and W. A. Green

Integral equations B. L. Moiseiwitsch

Functions of a complex variable E. G. Phillips

Continuum mechanics A. J. M. Spencer

Longman Mathematical Texts

Integral equations

B. L. Moiseiwitsch

Department of Applied Mathematics and Theoretical Physics,

The Queen's University of Belfast

[> r::J [>

[:][:J[:]

[:][:][:]

\ /

Longman

London and New York

Lonwnan Group Lmuted London

Associated companies, branches and representatives

throughout the world

Published in the United States of America

by Longman Inc., New York

@ Longman Group Limited 1977

All rights reserved. No part of this publication may be

reproduced, stored in a retrieval system, or transmitted

in any form or by. any means, electronic, mechanical,

photocopying, recording, or otherwise, without the

prior permission of the Copyright owner.

First published 1977

Library of Congress Cataloging in Publication Data

Moiseiwitsch, Benjamin Lawrence.

Integral equations.

(Longman mathematical texts)

1. Integral equations. 2. Hilbert space.

3. Linear operators. I. Title.

QA431.M57 515'.45 76-10282

ISBN 0-582-44288-5

Printed at The Pitman Press, Bath Ltd.

Preface

Many problems arising in mathematics, and in particular in applied

mathematics or mathematical physics, can be formulated in two

distinct but connected ways, namely as differential equations or as

integral equations. In the former approach the boundary conditions

have to be imposed externally, whereas in the case of integral

equations the boundary conditions are incorporated within the

formulation and this confers a valuable advantage to the latter

method. Moreover the integral equation approach leads quite natur-

ally to the solution of the problem as an infinite series, known as the

Neumann expansion, in which the successive terms arise from the

application of an iterative procedure. The proof of the convergence

of this series under appropriate conditions presents an interesting

exercise in elementary analysis.

Integral equations have been of considerable significance in the

history of mathematics. Thus Laplace and Fourier transforms are

examples of integral equations of the first kind, while another

interesting example is Abel's integral equation which is associated

with Huygens' tautochrone problem and has a singular kernel.

The Hilbert transform also possesses a singular kernel. It arises

from a boundary value problem in a plane and enables the solution

of the Hilbert type of singular integral equation to be derived.

Integral transforms in general often provide a convenient method for

finding the solution to the class of integral equations having kernels

of the difference, or convolution, type.

This book is mainly concerned with linear integral equations

although a brief discussion of a simple type of non-linear equation is

given at the end of the first chapter. The theory of linear integral

equations of the second kind was developed originally by Volterra

and Fredholm. In its more general form the analysis is carried out for

Lebesgue square integrable functions since they form a Hilbert space.

In this volume I have attempted to avoid unnecessary complications

wherever possible by proving results for square integrable functions

without usually specifying the sense in which the integration

vi Preface

is to be carried out. Thus the book can be followed, for the most

part, without distinguishing between Riemann and Lebesgue inte-

gration and this, I hope, will make it suitable for a wider range of

mathematics students.

I have devoted two chapters to Hilbert space and linear operators

in Hilbert space, the integral occurring in linear integral equations

being an example of a linear operator. Hilbert space is of fundamen-

tal importance in mathematical physics since it provides the founda-

tion for an axiomatic formulation of quantum mechanics. For this

reason I have thought it worthwhile to discuss, even if rather briefly,

some more general situations in which we are concerned with

elements or vectors in an abstract Hilbert space acted upon by

completely continuous linear operators, an example of which is the

linear integral operator with square inte rable kernel.

The final chapter is concerned with the theory of Hilbert and

Schmidt on Hermitian integral operators with square integrable

kernels. In this theory the solution of linear integral equations of the

second kind is expanded in terms of the characteristic functions and

values of the kernel. This is a common procedure in theoretical

physics although its mathematical justification is often disregarded.

The book concludes with a short discussion of variational principles

and methods.

The equations are numbered consecutively in each chapter.

When referring to an equation in another chapter, the number of

the chapter is inserted as a prefix but if the referenced equation is in

the same chapter the prefix is omitted.

The mathematical knowledge required to work through this

book, and to do the problems at the end of each c apter, is that

which an undergraduate student should possess as a result of

attending elementary courses in analysis, complex variable and

linear algebra. Thus the book should be suitable for students in their

final year of an honours mathematics or mathematical physics

course. The problems have been chosen so as to illuminate the

theory given in the main text. They are not too difficult and to gain

full advantage from the book the student is strongly advised to tackle

them.

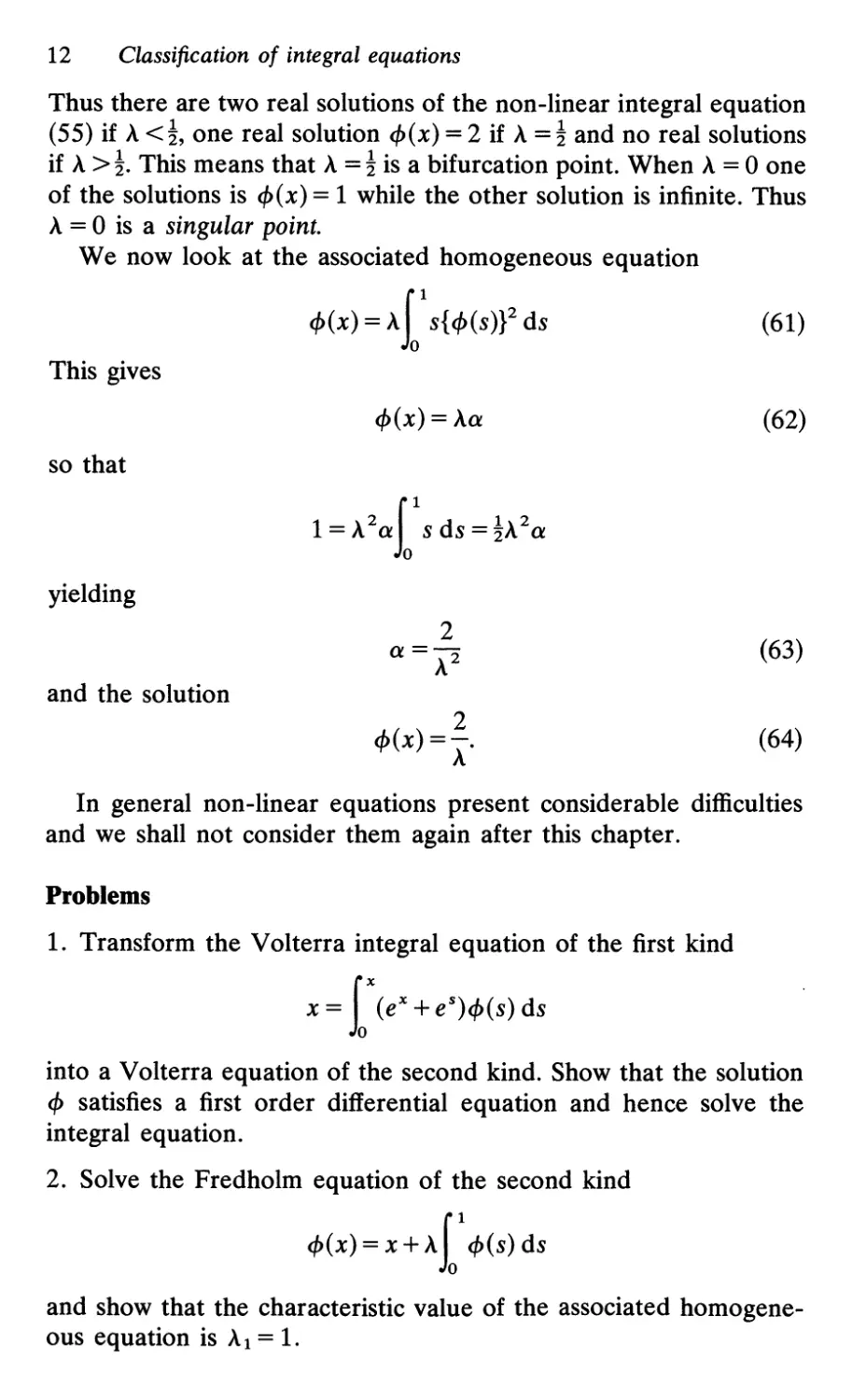

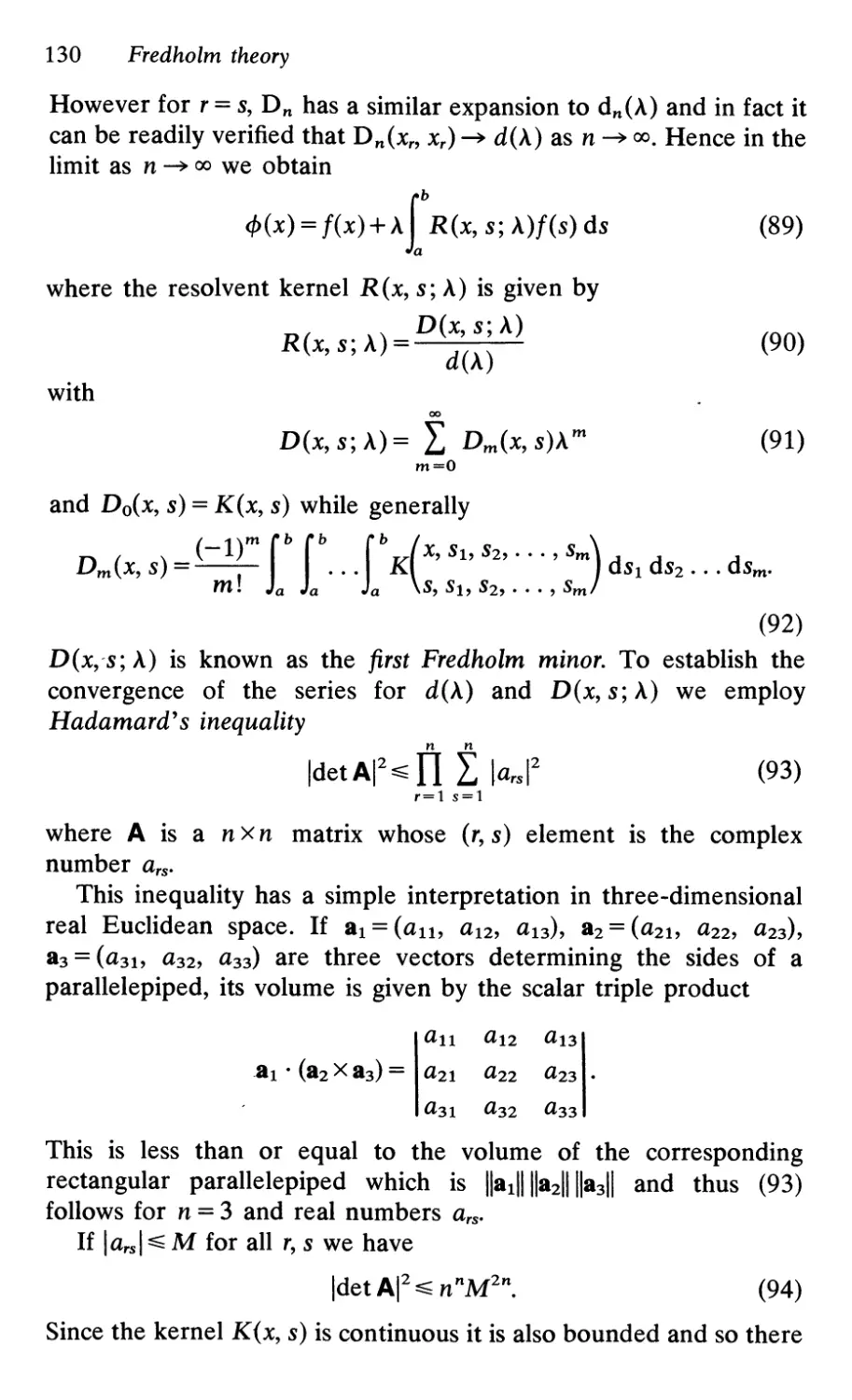

Contents

Preface

1: Classification of integral equations

1.1 Historical introduction

1.2 Linear integral equations

1.3 Special types of kernel

1.3.1 Symmetric kernels

1.3.2 Kernels producing convolution integrals

1.3.3 Separable kernels

1.4 Square integrable functions and kernels

1.5 Singular integral equations

1.6 Non-linear equations

Problems

v

1

3

4

4

5

6

8

9

11

12

2: Connection with differential equations

2.1 Linear differential equations 14

2.2 Green's function 18

2.3 Influence function 20

Problems 22

3: Integral equations of the convolution type

3.1 Integral transforms 24

3.2 Fredholm equation of the second kind 26

3.3 Volterra equation of the second kind 31

3.4 Fredholm equation of the first kind 34

3.4.1 Stieltjes integral equation 34

3.5 Volterra equation of the first kind 36

3.5.1 Abel's integral equation 37

3.6 Fox's integral equation 39

Problems 40

4: Method of successive approximatioDS

4.1 Neumann series

4.2 Iterates and the resolvent kernel

Problems

43

46

51

Vlll Contents

s: Integral equations with singular kernels

5.1 Generalization to higher dimensions

5.2 Green's functions in two and three dimensions

5.3 Dirichlet's problem

5.3.1 Poisson's formula for the unit disc

5.3.2 Poisson's formula for the half plane

5.3.3 Hilbert kernel

5.3.4 Hilbert transforms

5.4 Singular integral equation of Hilbert type

Problems

53

54

55

59

60

61

63

65

67

6: HDbert space

6.1 Euclidean space

6.2 Hilbert space of sequences

6.3 Function space

6.3.1 Orthonormal system of functions

6.3.2 Gram-Schmidt orthogonalization

6.3.3 Mean square convergence

6.3.4 Riesz-Fischer theorem

6.4 Abstract Hilbert space

6.4.1 Dimension of Hilbert space

6.4.2 Complete orthonormal system

Problems

69

71

74

75

76

77

79

80

82

82

83

7: Linear operators in HUbert space

7.1 Linear integral operators 85

7.1.1 Norm of an integral operator 87

7.1.2 Hermitian adjoint 88

7.2 Bounded linear operatbrs 89

7.2.1 Matrix representation 91

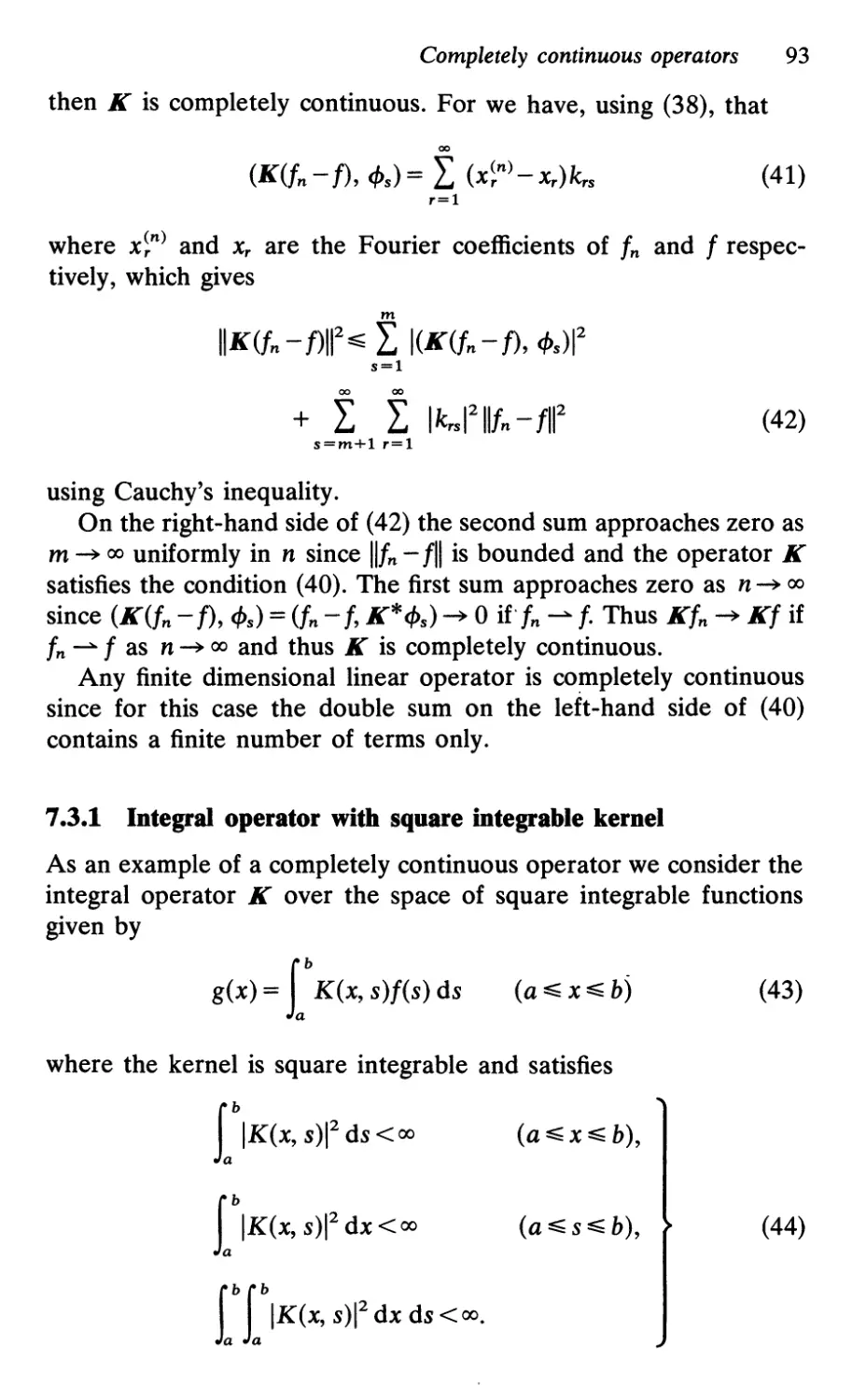

7.3 Completely continuous operators 92

7.3.1 Integral operator with square integrable kernel 93

Problems 95

8: e resolvent

8.1 Resolvent equation

8.2 Uniqueness theorem

8.3 Characteristic values and functions

98

99

101

Contents ix

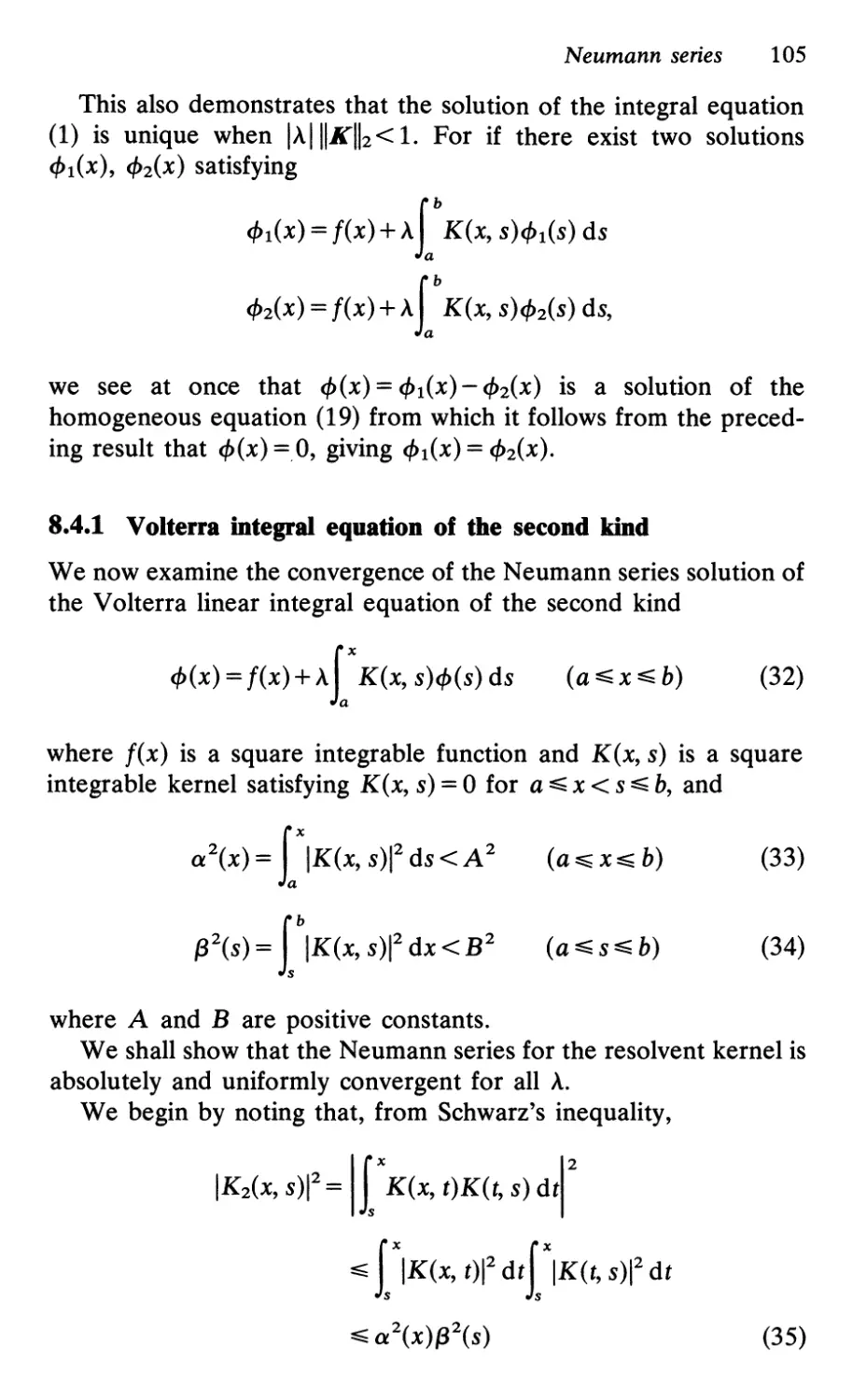

8.4 Neumann series 102

8.4.1 Volterra integral equation of the second kind 105

8.4.2 Bacher's example 109

8.5 Fredholm equation in abstract Hilbert space 109

Problems 111

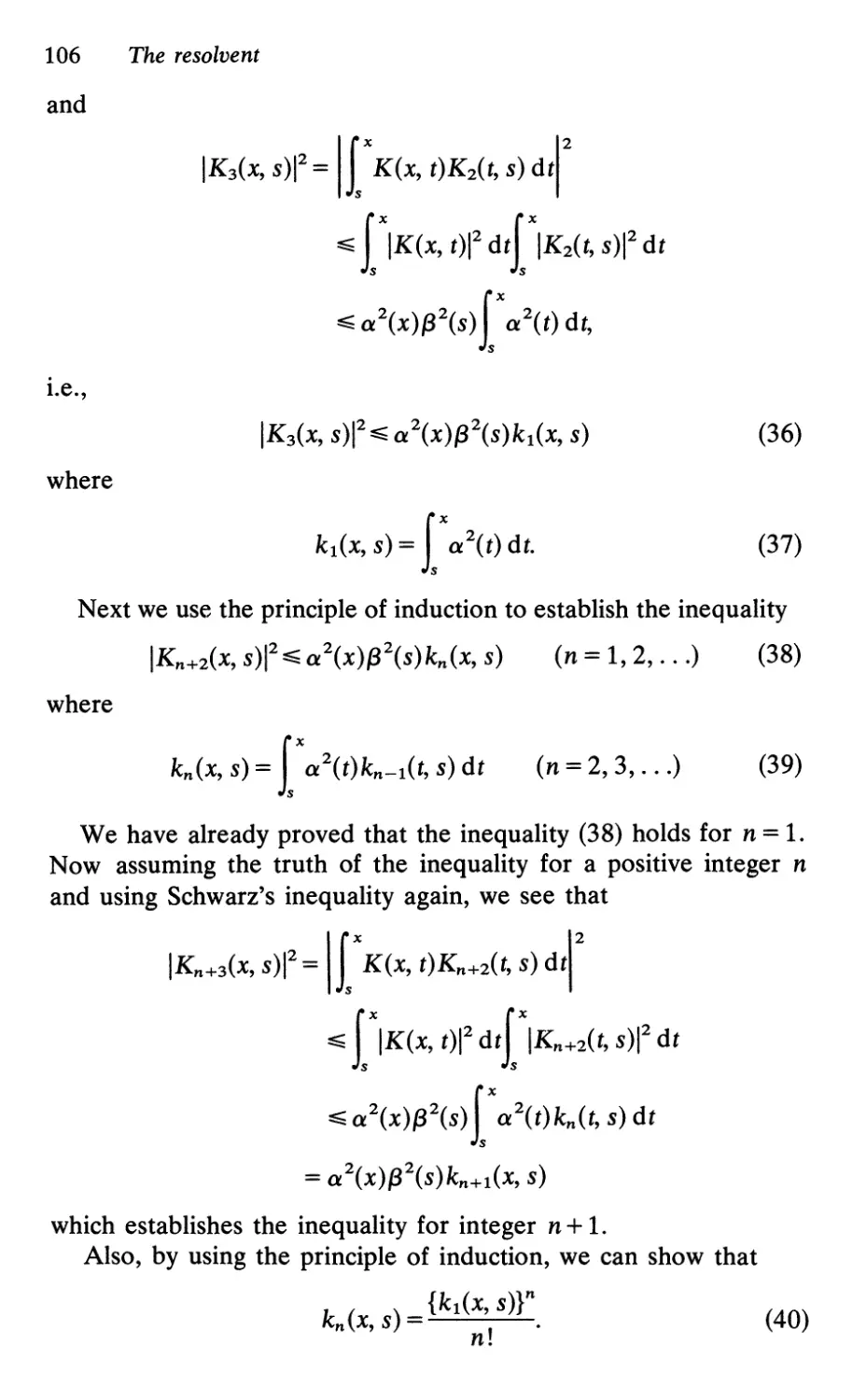

9: Fredholm theory

9.1 Degenerate kernels 114

9.2 Approximation by degenerate kernels 120

9.3 Fredholm';, theorems 121

9.3.1 Fredholm theorems for completely continuous

operators . 125

9.4 Fredholm formulae for continuous kernels 126

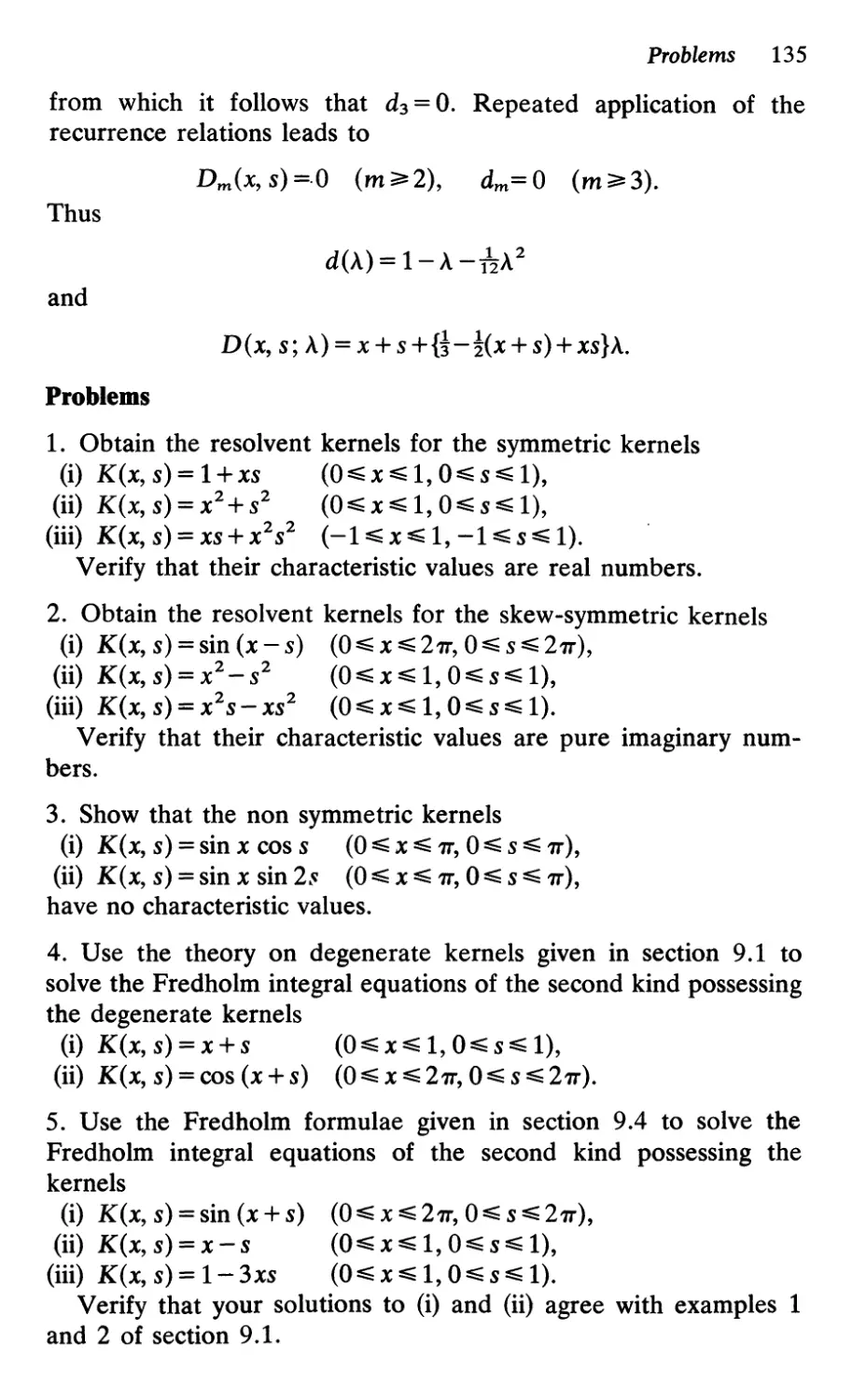

Problems 135

10: HUbert-Schmidt theory

10.1 Hermitian kernels

10.2 Spectrum of a Hilbert-Schmidt kernel

10 3 Expansion theorems

10.3.1 Hilbert-Schmidt theorem

10.3.2 Hilbert's formula

10.3.3 Expansion theorem for iterated kernels

10.4 Solution of Fredholm equation of second kind

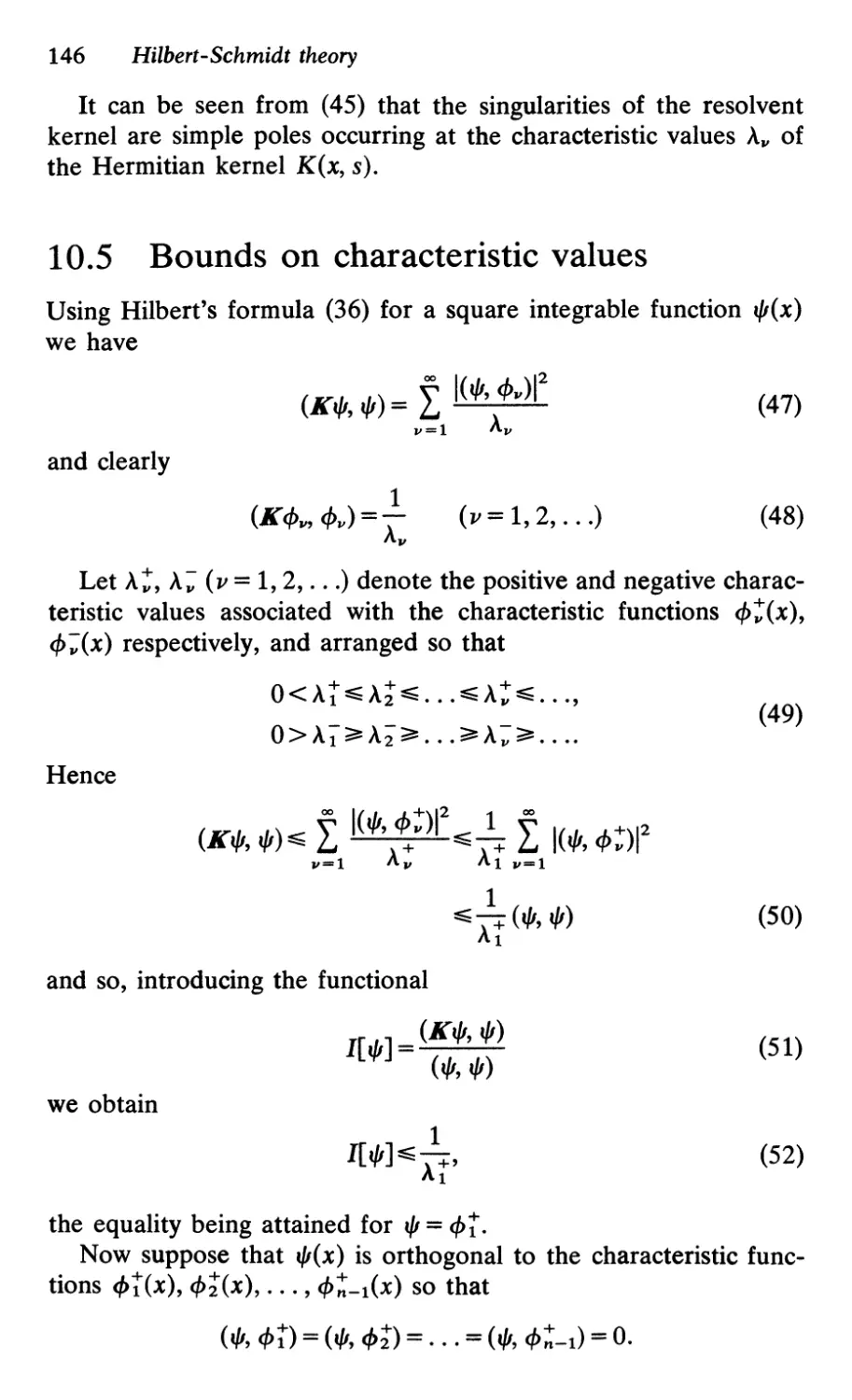

10.5 Bounds on characteristic values

10.6 Positive kernels

10.7 Mercer's theorem

10.8 Variational principles

10.8.1 Rayleigh-Ritz variational method.

Problems

Bibliography

Index

136

136

139

141

143

143

144

146

147

148

150

152

154

157

158

1

Classification

of integral equations

1.1 Historical introduction

The name integral equation for any equation involving the unknown

function cf>(x) under the integral sign was introduced by du Bois-

Reymond in 1888. However, the early history of integral equations

goes back a considerable time before that to Laplace who, in 1782,

used the integral transform

f( x) = {OO e -xs <I> (s) ds ( 1 )

to solve linear difference equations and differential equations.

In connection with the use of trigonometric series for the solution

of heat conduction problems, Fourier in 1822 found the reciprocal

formulae ,- 00

f(x) = \j { sin xs <I>(s) ds, (2)

<I>(s) = {OOSin xs f(x) dx (3)

and

f(x) = roo cos xs <I>(s) ds,

<I>(s) = roo cos xs f(x) dx

(4)

(5)

where the Fourier sine transform (3) and the cosine transform (5)

provide the solutions cf>(s) of the integral equations (2) and (4)

respectively in terms of the known function f(x).

In 1826 Abel solved the integral equation named after him

having the form

f(x) = f(x-s)-a<l>(s) ds (6)

where f(x) is a continuous function satisfying f(a) = 0, and 0 < a < 1.

2 Classification of integral equations

For a =! Abel's integral equation corresponds to the famous

tautochrone problem first solved by Huygens, namely the determi-

nation of the shape of the curve with a given end point along which

a particle slides under gravity in an interval of time which is

independent of the starting position on the curve. Huygens showed

that this curve is a cycloid.

An integral equation of the type

cf>(x) = f(x) + A r k'(x - s)cf>(s) ds (7)

in which the unknown function cf>(s) occurs outside as well as before

the integral sign and the variable x appears as one of the limits of

the integral, was obtained by Poisson in 1826 in a memoir on the

theory of magnetism. He solved the integral equation by expanding

cf>(s) in powers of the parameter A but without establishing the

convergence of this series. Proof of the convergence of such a series

was produced later by Liouville in 1837.

Dirichlet's problem, which is the determination of a function t/1

having prescribed values over a certain boundary surface Sand

satisfying Laplace's equation V 2 t/1 = 0 within the region enclosed by

S, was shown by Neumann in 1870 to be equivalent to the solution

of an integral equation. He solved the integral equation by an

expansion in powers of a certain parameter A. This is similar to the

procedure used earlier by Poisson and Liouville, and corresponds to

a method of successive approximations.

In 1896 Volterra gave the first general treatment of the solution

of the class of linear integral equations bearing his name and

characterized by the variable x appearing as the upper limit of the

integral.

A more general class of linear integral equations having the form

cf>(x) = f(x) + r K(x, s)cf>(s) ds (8)

which includes Volterra's class of integral equations as the special

case given by K(x, s) = 0 for s> x, was first discussed by Fredholm

in 1900 and subsequently, in a classic article by him, in 1903. He

employed a similar approach to that introduced by Volterra in 1884.

In this method the Fredholm equation (8) is regarded as the limiting

form as n 00 of a set of n linear algebraic equations

n

cf>(x r ) = f(x r ) + L K(x r , x s )cf>(x s )5n

s=1

(r = 1, . . . , n)

(9)

Linear integral equations 3

where 5n = (b - a)/n and X r = a + r5n. The solution of these equa-

tions can be readily obtained and Fredholm verified by direct

substitution in the integral equation (8) that his limiting formula for

n 00 gave the true solution.

1.2 Linear integral equations

Integral equations which are linear involve the integral operator

L = r K(x, s) ds (10)

having the kernel K(x, s). It satisfies the linearity condition

L [A 1 cP 1 (s ) + A 2 cP2 (s )] = AIL [ cP 1 (s ) ] + A 2 L [ cP2 (s ) ] ( 11 )

where

L[<f>(s)] = r K(x, s)<f>(s) ds

(12)

and Al and A2 are constants.

Linear integral equations are named after Volterra and Fredholm

as follows:

The Fredholm equation of the first kind has the form

f(x) = rK(X,S)<f>(S)dS (a x b) (13)

Examples are given by Laplace's integral (1) and the Fourier

integrals (2) and (4).

The Fredholm equation of the second kind has the form

<f>(x) = f(x) + r K(x, s)<f>(s) ds (a x b) (14)

while its corresponding homogeneous equation is

<f>(x) = r K(x, s)<f>(s) ds

(a x b)

(15)

The Volterra equation of the first kind has the form

f(x) = fK(X,S)<f>(S)dS (a x b) (16)

An example of such an equation is Abel's equation (6) which,

4 Classification of integral equations

however, has a special feature arising from the presence of a

singular kernel

K(x, s)=(x-s)-a

(0 < a < 1)

with a singularity at s = x.

The Volterra equation of the second kind has the form

<f>(x) = f(x) + r K(x, s)<f>(s) ds

(a x b)

(17)

We see that the Volterra equations can be obtained from the

corresponding Fredholm equations by setting K(x, s) = 0 for

a x < s b.

It can be readily seen also that the Volterra equation (16) of the

first kind can be transformed into one of the second kind by

differentiation, for we have

f x a

f'(x) = K(x, x)cf>(x) + - K(x, s)cf>(s) ds

a ax

so that provided K(x, x) does not vanish in a x b we obtain

f'(x) f X [ a ]

<f>(x) = K(x, x) a ax K(x, s)/ K(x, x) <f>(s) ds

1.3 Special types of kernel

1.3.1 Symmetric kernels

Integral equations with symmetric kernels satisfying

K(s, x) = K(x, s)

(18)

possess certain simplifying features. For this reason it is valuable to

know that the integral equation

o/(x) = g(x) + r P(x, s)/L(s)o/(s) ds

(19)

with the unsymmetrical kernel P(x, s)f.L(s), where however

P(s, x) = P(x, s), can be transformed into the integral equation (14)

with symmetric kernel

K(x, s) = f.L(x) P(x, s) f.L(s) (20)

Special types of kernel 5

by multiplying (19) across by .J I-L(x) and setting

cf>(x) = .J I-L(x) t/1(x) (21)

and

t(x) = .J I-L(x) g(x).

(22)

Real symmetric kernels are members of a more general class

known as Hermitian kernels which are not necessarily real and are

characterized by the relation

K(s, x) = K(x, s),

(23)

the bar denoting complex conjugate. We shall investigate the prop-

erties of integral equations with Hermitian kernels in chapter 10.

1.3.2 Kernels producing convolution integrals

A class of integral equation which is of particular interest has a

kernel of the form

K(x, s) = k(x - s)

(24)

depending only on the difference between the two coordinates x and

s. The type of integral which arises is

L: k(x - s )cf>(s) ds

(25)

called a convolution or folding. This nomenclature comes from the

situation which occurs in Volterra equations where the integral (25)

becomes

r k(x - s)cf>(s) ds

(26)

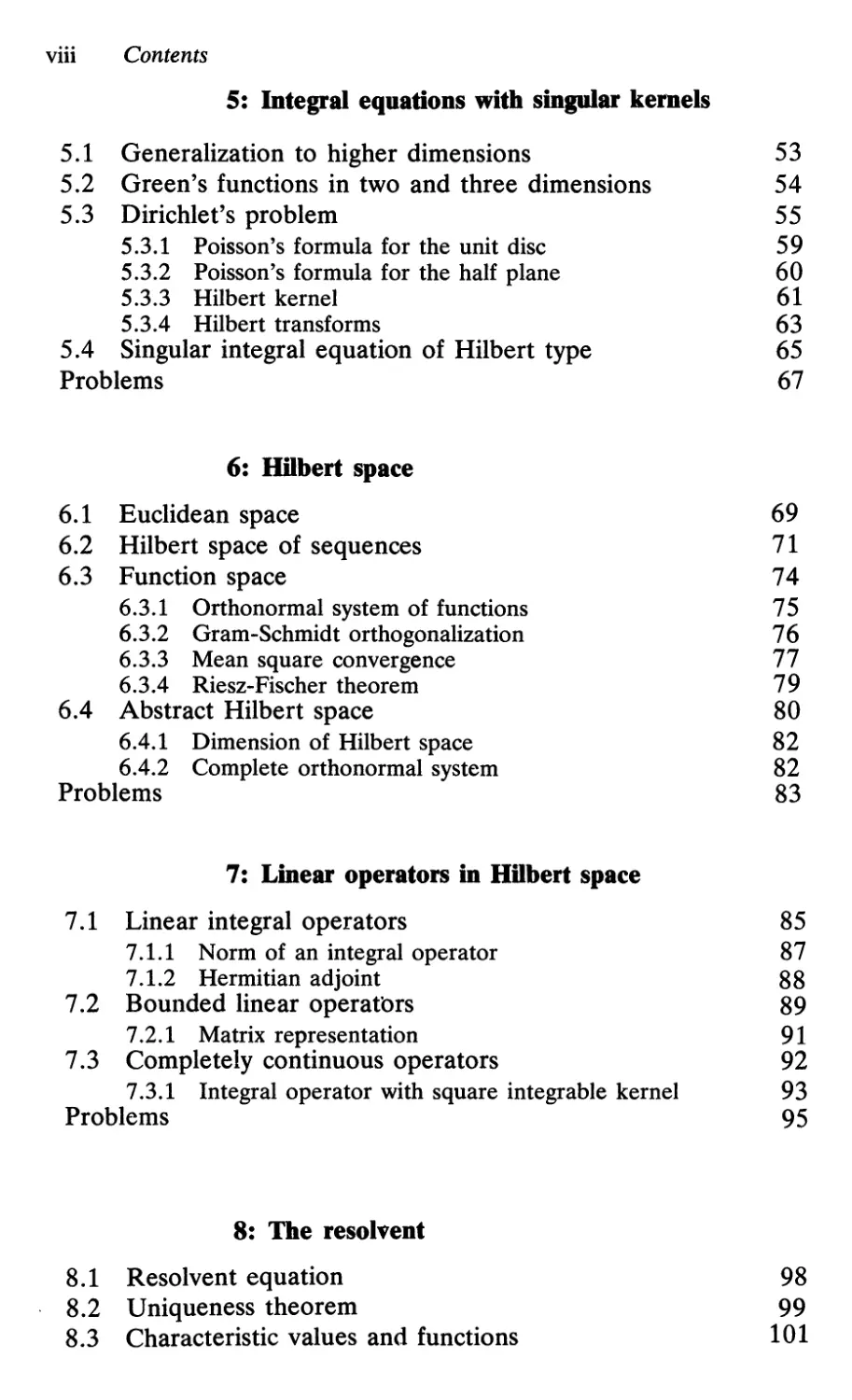

and the range of integration can be regarded as if it were folded at

s = x/2 so that the point s corresponds to the point x - s as shown in

Fig. 1.

o

:

s

:

);

x

x-s

Fig. 1. Convolution or folding

6 Classification of integral equations

Integral equations of the convolution type can be solved by using

various kinds of integral transform such as the Laplace and Fourier

transforms and will be discussed in detail in chapter 3.

1.3.3 Separable kernels

Another interesting type of integral equation has a kernel possessing

the separable form

K(x, s) = AU(X)V(s)

(27)

The Fredholm integral equation of the second kind (14) now

becomes

<f>(x) = f(x)+ AU(X) r v(s)<f> (s) ds

and can be readily solved exactly. Thus we have, on multiplying (28)

(28)

across by v(x) and integrating:

r v(x)<f> (x) dx = r v(x) f(x) dx + A r v(x) u(x) dx r v(s)<f> (s) ds

which gives

J b v(x) <f>(x) dx = S v x) dx

a 1- AS v(x)u(x) dx

(29)

so that

<f>(x) = f(x) + AU(X)J f(s) ds (30)

1- AS v(t)u(t) dt

We see that the solution (30) can be expressed in the form

<f>(x) = f(x) + A r R (x, s; A)f(s) ds (31)

where

R( . \ ) = u(x)v(s)

X,S,I\ -

1- AS v(t)u(t) dt

and is called the solving kernel or resolvent kernel.

(32)

Special types of kernel 7

The homogeneous equation (15) ,corresponding to (14) becomes

in the present case

cf>(X) = AU(X) r V(S ) cf>(S) ds.

The solution cP(x) of equation (33) must satisfy

(33)

r v(x) cf>(x) dx = A r v(x) u(x) dx r v(s)cf> (s) ds

The values of A for which the homogeneous equation has solutions

are called characteristic values. There exists just one value of A for

which (33) possesses a solution. This characteristic value Al is given

by

1 = Ai r v(x) u(x) dx,

the associated characteristic solution of (33) being cPl(X) = cu(x)

where c is an arbitrary constant.

The simple separable form (27) is a special case of the class of

degenerate kernels

(34)

n

K(x, s) = A L Ui(X) Vi(S)

i=l

(35)

gIvIng rise to integral equations which can be solved In closed

analytical form as we shall show in chapter 9.

Example 1. As a simple illustration of an integral equation with

separable kernel (27) we consider

cf>(x) = 1 + A r xscf>(s) ds (36)

Here f(x) = 1, K(x, s) = AXS and so u(x) = x and v(s) = s. It follows

that the resolvent kernel is

xs

R (x, s; A) = 1 _ A S5 t 2 d t

xs

(37)

1 - A/3

8 Classification of integral equations

and hence the solution to (36) is

AX [1

cf>(X) = 1 + 1 _ A/3 Jo s ds

=1+ 3h (A 3)

2(3 - A)

(38)

Example 2. Another example of an integral equation with a separ-

able kernel is

cf>(X) = eX + A r eia(x-s)cf>(s) ds

(39)

where f(x) = eX, K(x, s) = Aeia(x-s) so that u(x) = e iax and v(s) = e ias

Then the resolvent kernel is

e ia(x-s)

R(X,S;A)= I-A

(40)

and hence the solution of (39) is

Ae iax i 1 .

cf>(x) = eX + e(1-1a)s ds

I-A 0

AeiaX(el-ia -1)

=e x +

(1- A)(I- ia)

(41)

1.4 Square integrable functions and kernels

Functions cf>(x) which are square integrable in the interval a x b

satisfy the condition

flcf>(XW dx <00

(42)

where the integral is taken to be Riemann, or Lebesgue for greater

generality. In the former case it is said that cf>(x) is an R 2 function

and in the latter case that it is an L 2 function. Continuous functions

are square integrable over a finite interval since they are bounded.

However the converse is not necessarily true, that is square integra-

ble functions need not be continuous or bounded.

Kernels K(x, s) defined in a x b, a s b are said to be

square integrable if they satisfy.

r fIK(X, sW dx ds<oo (43)

Singular integral equations 9

together with

fIK(x, sW ds <00

(a x b)

(44)

and

fIK(x, sWdx<oo

(a s b)

(45)

where the integrals are taken to be Riemann or Lebesgue. The

kernel is then called R 2 or L 2 respectively.

Kernels of particular interest are those which are singular. Thus

consider a Volterra kernel of the form

F(x, s)

K(x,s)= (x-s)a

o (x < s)

where F(x, s) is a continuous function and 0< a < 1. Then IF(x, s)1 M

where M is a constant and so

(x> s)

(46)

f b f b 2 f b f x 1 F( x, s) 1 2

a a IK(x, s)1 dx ds = a dx a ds (X _ S)201

:so: M 2 f dx r ds(x - S)-201

M 2 f b

= dx(x - a)1-2a

1-2a a

M 2 (b _ a)2-2a

2(1- 2a)(1- a)

(a < !)

(47)

and so the double integral is finite if 0 < a <!. However if a ! the

singular kernel (46) is not square integrable.

1.5 Singular integral equations

An integral equation of the type

cf>(x) = f(x) + A r K(x, s )cf>(s) ds

(48)

is said to be singular if the range of definition is infinite e.g.,

0< x < 00 or -00 < x < 00, or if the kernel is not square integrable.

10 Classification of integral equations

Non-singular equations have a discrete spectrum, that IS the

associated homogeneous equation

cf>(X) = A r K(x, s )cf>(s) ds

(49)

has non-trivial solutions cPv(x) for a finite or at most a countable,

although infinite, set of characteristic values Av of the parameter A.

Also each characteristic value Av has a finite rank (or index), that is

it has a finite number of linearly independent characteristic func-

tions cP l)(X),..., cP )(x).

However if the integral equation is singular by virtue of having an

infinite range of definition, the spectrum of values of A may include

a continuous segment. For example it may be readily verified that

the homogeneous equation

cf>(x) = A L e-1x-s1cf>(s) ds

(50)

has solutions of the form

cP(x) = cle- v'1-2,\, X+ c2e v'1-2,\, X

(51)

for the continuous spectrum of values 0 < A < 00. Equation (50) is of

the convolution or difference type and will be discussed further in

section 3.2 of the chapter on integral equations of the convolution

type.

Also if the integral equation has an infinite range of definition the

characteristic values may have an infinite rank. Thus consider the

equation

cf>(x) = A [00 cos xs cf>(s) ds

(52)

with kernel

K(x, s) = cos xs

Using the Fourier cosine transform (5) and its reciprocal formula (4)

we have

(53)

Acf>(x) = [00 cos xs cf>(s) ds

and so

cf>(X) = A 2cf>(X)

Non-linear equations 11

giving A 2 = 1, or A = :f: 1 as characteristic values. Their ranks are

infinite since it can be shown without difficulty that

( ) 1'7T -ax a ( )

cf> x = -V 2 e :f: a 2 +x2 x >0

(54)

are characteristic functions corresponding to the characteristic val-

ues A = :f: 1 for all values of a> O. The kernel (53) belongs to a class

of singular kernels known as Weyl kernels.

1.6 Non-linear equations

In the case of non-linear equations, the spectrum of characteristic

values A may have the interesting property that the number of

solutions of the integral equation changes as we pass through

particular values of A known as bifurcation points.

As an example we consider the simple non-linear equation

cf>(x) - A[ s{ cf>(s)Y ds = 1

(55)

and investigate its real solutions cf>(x). We put

[S{cf>(S)}2 ds = a

(56)

in which case we have

cf>(x) = 1 + Aa

(57)

so that

a = (1 + Aa)2 [s ds = t(1 + Aa)2

This yields

A 2 a 2 +2(A-1)a + 1 = 0

(58)

which gives

1- A :f:v'(A -1)2- A 2

a= 2

A

(59)

Hence

1 :f: v' 1- 2A

cf>(x) = A .

(60)

12 Classification of integral equations

Thus there are two real solutions of the non-linear integral equation

(55) if A <!, one real solution cP(x) = 2 if A =! and no real solutions

if A >!. This means that A =! is a bifurcation point. When A = 0 one

of the solutions is cP(x) = 1 while the other solution is infinite. Thus

A = 0 is a singular point.

We now look at the associated homogeneous equation

cp(x) = A[ s{cp(S)}2 ds

(61)

This gives

cP(x) = Aa

(62)

so that

1 = A 2 a {\ ds =!A 2a

yielding

2

a=-

A 2

(63)

and the solution

2

cP(x) =-.

A

(64)

In general non-linear equations present considerable difficulties

and we shall not consider them again after this chapter.

Problems

1. Transform the Volterra integral equation of the first kind

x= f(ex+eS)cp(S)dS

into a Volterra equation of the second kind. Show that the solution

cP satisfies a first order differential equation and hence solve the

integral equation.

2. Solve the Fredholm equation of the second kind

cp(x) = x + A[ cp(s) ds

and show that the characteristic value of the associated homogene-

ous equation is A 1 = 1.

Problems 13

3. Solve the Fredholm equation

cp(x) = 1 + A[ eX+scp(s) ds

and show that the characteristic value of the associated homogene-

ous equation is At = 2/( e 2 -1).

4. Solve the Fredholm equation

cp(x) = 1 + A[xnsmcp(s) ds

and show that the characteristic value of the associated homogene-

ous equation is At = m + n + 1.

5. Solve the Fredholm equation

cp(x) = x + AfT sin nx sin ns cp(s) ds

where n is an integer, and show that the characteristic value of the

associated homogeneous equation is At = 2/11'.

6. Show that the singular integral equation

cp(x) = A -J! rOSin xs cp(s) ds

has characteristic solutions

( ) r;. -ax X

cf> X = -V "2 e :f: a 2 + X2

associated with characteristic values A = :f: 1 for all a > O.

(X> 0)

7. Solve the non-linear equation

cp(x) = 1 + A[{cp(S)}2 ds

and show that A = * is a bifurcation point while A = 0 is a singular

point of the spectrum of characteristic values.

2

Connection with

differential equations

2.1 Linear differential equations

We first observe that the first order differential equation

dcf>

dx = F(x, cf»

(a x b)

(1)

can be written immediately as the Volterra integral equation of the

second kind

cp(x) = cp(a)+ r F[s, cp(s)] ds.

(2)

As an interesting but simple example of the above we consider

the following problem, solved by Johannes Bernoulli for the n = 3

case:

To find the equation y = cf>(x) of the curve joining a fixed origin

o to a point P such that the area under the curve is l/nth of the

area of the rectangle OXPY having OP as diagonal for all points P

on the curve (see Fig. 2).

Evidently this problem is equivalent to solving the homogeneous

V olterra integral equation of the second kind

I x 1

cf>(s) ds = - xcf>(x)

o n

(3)

for the unknown function cf>(x) where c/>(O) = O.

This equation can be solved by converting it into the differential

equation

1

<!>(x) = - {<!>(x) + x<!>'(x)}

n

Linear differential equations 15

y p

V

o

<P (x)

<P (s)

s

x

x

Fig. 2. Bernoulli's problem for n = 3; y = x 2 .

or more simply

n-1

cf>'(x) = cf>(x)

x

(4)

which has the solution

<f>(x) = Ax n - 1

(5)

For the case n = 3 solved by Bernoulli the curve is a parabola

y = Ax 2 .

We next consider the second order differential equation

d 2 <f>

dxz =F(x,cP) (a x b) (6)

16 Connection with differential equations

which can be expressed as

4>(x) = 4>(a) + (x - a)4>'(a) + r (x - s)F[s, 4>(s)] ds, (7)

this particular form being obtained on carrying out an integration by

parts.

Taking a = 0 and b - a = I this may be rewritten as

4>(x) = 4>(0) + 4>( I) - 4>(0) x

I

+ f(X - s)F[s, 4>(s)] ds +} r (s -l)F[s, 4>(s)] ds (8)

sInce

14>'(0)= 4>(1) - 4>(0) + r (s -1)F[s, 4>(s)] ds.

If the differential equation is linear and we write

F[x, cf>(x)] = r(x) - q(x)cP(x)

(9)

we obtain the Fredholm integral equation of the second kind

4>(x) = f(x)+ r K(x, s)q(s)4>(s) ds

(10)

where

cf> ( I) - cf> ( 0 ) i I

f(x) = 4>(0) + 1 x - 0 K(x, s)r(s) ds

(11)

and

K(x, s) =

s(l-x)

1

x(l- s)

I

(s x)

(s x)

(12)

In the particular case of a flexible string having mass p (x) per unit

length, stretched at tension T and vibrating with angular frequency

w, we have q(x) = w 2 p(x)/T and r(x) = O. If the string has fixed ends

at x = 0 and I, the transverse displacement cP(x) satisfies cP(O) = cP(l) = 0

so that f(x) = O. Thus we arrive at the homogeneous Fredholm

Linear differential equations 17

integral equation

cf>(x) = r K(x, s)q(s)cf>(s) ds

(13)

An important distinction between the differential equation and

the equivalent integral equation approach should be observed. In

the case of the differential equation formulation of a physical

problem the boundary conditions are imposed separately whereas

the integral equation formulation contains the boundary conditions

implicitly. Thus, for example, we see at once from (13) and (12) that

cf>(x) vanishes at x = 0 and x = I.

Let us now consider the second order linear differential equation

Lv(x) = r(x)

(0 x I)

(14)

where L is the linear differential operator given by

L= d {P(X) ddJ +q(x)

(15)

and p(x) has no zeros in the range of definition 0 x I.

We shall treat this equation somewhat differently from the previ-

ous equations by setting

dZv

cf> (x) = dx z .

(16)

Then we have

dv r x

dx = v'(O) + Jo cf>(s) ds

(17)

and

v (x) = v (0) + xv' (0) + r (x - s) cf> (s) ds,

from which it follows that the differential equation may be ex-

pressed as the Volterra integral equation of the second kind

(18)

cf>(x) = f(x) + r K(x, s)cf>(s) ds

(19)

where

f(x) = {p(x)}-l[r(x) - v'(O)p'(x) -{v(O) + xv'(O)}q(x)] (20)

18 Connection with differential equations

and

K(x, s) = {p(X)}-l[(S - X)q(X) - p'(X)].

(21)

2.2 Green's function

We consider the second order linear differential equation

Lv(x) = r(x)

(a x b),

(22)

where L is the linear differential operator (15), and suppose that its

solution v(x) satisfies homogeneous boundary conditions at x = a

and x = b, namely

av(a) + {3v'(a) = 0

av(b) + {3v'(b) = 0

(23)

where a and {3 are prescribed constants.

Let

LVi(X)=O

(i=1,2)

(24)

where Vl(X) and V2(X) are two linearly independent functions which

satisfy the boundary conditions (23) prescribed at x = a and b

respectively. We shall show that the solution of (22) may be

expressed in the form

v(x) = r G(x, s)r(s) ds

(25)

where

G(x, s) =

1

A Vl(X)V2(S)

1

A V2(X)Vl(S)

(x s)

(x s)

(26)

and A is a certain constant. G(x, s) is called the Green's function.

The method we shall use involves the Dirac delta function 8(x)

which vanishes for x 0 and satisfies

r 8(x-s)r(s) ds = r(x)

(27)

Clearly 5(x) is not a function as defined in the usual sense but

Green's function 19

belongs to a class known as generalized functions. A useful represen-

tation of the Dirac function is

8(x) = lim I(h, x)

h O

(28)

where I(h, x) is the impulse function defined as

1

I(h, x) = h

o

(O x h)

otherwise.

(29)

Thus the representation (28) vanishes for x ¥= 0 and has infinite

magnitude at x = O. Further

lim f oo I(h, x) dx = 1, (30)

h 0 -00

since the integral is unity for all values of h, and we write this as

L 8(x)dx=1

(31)

although care has to be exercised when interchanging the order of

the limiting operation and the integration.

Now (25) has to provide a solution of (22) and so

LG(x, s) = 8(x - s). (32)

It follows that

r S + B

! Js-e LG(x, s) dx = 1

(33)

which leads to

[ a ] X=S+B 1

lim - G(x, s) =-,

B O ax X=S-B p(s)

(34)

that is,

Vl(S)V (S)-V2(S)vHs) = p )

(35)

or

Vl (s)

V (s)

V2(S)

v (s )

A

-

p(s)

(36)

20 Connection with differential equations

where the determinant on the left-hand side is called the Wronskian.

For A to be non-vanishing it is evident that Vl and V2 must be

linearly independent.

As a simple example we consider the operator

d 2

L=-c- (O x l) (37)

dx 2

and the end conditions v(O):c v(l) = O. Then p(x) = -c and Vl(X) = x,

V2(X) = 1- x so that A = cl giving for the Green's function

x(l- s)

cl

G(x, s) =

(1- x)s

cl

(x s)

(x s)

(38)

2.3 Influence function

.

We consider a string of length 1 at tension T. The displacement

G(x, s) of the string at the point with coordinate x, resulting from

the application of a unit force perpendicular to the string at the

point with coordinate s, is called the influence function.

Now the displa ement G(s, s) of the string at the point s,

assumed to be small, satisfies the equilibrium equation (see Fig. 3):

TG(S,S) e + ,1S )=1

(39)

which yields

G( ) = s(l-s)

s, s Tl

and so it follows that the influence function is given by

x(l- s)

TI

s(l- x)

Tl

(40)

G(x, s) =

(x s)

(x s)

(41)

o s L

Fig. 3. Displaced string.

Influence function 21

Comparison with (38) shows that the influence function 'derived above

is just the Green's function for the linear differential operator

d 2

L=-T-

dx 2

(42)

Now suppose that we apply a loading force F(x) per unit length

to the string. Then the displacement of the string at the point with

coordinate x is given by

cf>(x) = r G(x, s)F(s) ds

(43)

using the principle of superposition. In the case of a horizontal wire

at tension T, possessing mass p(x) per unit length, the loading due

to the force of gravity is F(x) = -gp(x) and so the displacement of

the wire becomes

cf>(x) = - g r G(x, s )p(s) ds.

(44)

As a further example we consider a shaft of length I and mass

p(x) per unit length. If the loading at the point with coordinate s is

given by F(s) per unit length and G(x, s) is the influence function of

the shaft, then the displacement ck(x) at the point x is again given by

(43). Let us now suppose that the shaft is rotating with angular

velocity w. Then the loaqiag due to the rotation is given by the

centrifugal force

- F(s) = w 2 p(s)cp(s),

(45)

and so we obtain the following homogeneous integral equation for

the displacement of the shaft

cf>(x) = w 2 r G(x, s)p(s)cf>(s) ds

(46)

The balancing of the elastic and centrifugal forces can occur only

for certain discrete values of w known as the critical speeds which

correspond to values of w 2 for which the integral equation (46) has

non-vanishing solutions, that is its characteristic values.

22 Connection with differential equations

Problems

1. Solve the Volterra equations of the second kind

(i) cf>(x) = 1 + r cf>(s) ds

(ii) cf>(x) = 1 + 2 r scf>(s) ds

by finding the solutions of the equivalent differential equations.

2. Solve the Volterra equation

xcf>(x) = x n + r cf>(s) ds

3. Solve the Volterra equation

cf>(x) = f(x) +,\ r (x - s)cf>(s) ds

for (i) f(x) = 1, (ii) f(x) = x and A = :t: 1.

4. Solve the Volterra equation

cf>(x) = f(x) +,\ r sin (x - s)cf>(s) ds

for (i) f(x) = x and A = 1, (ii) f{x) = e- x and A = 2.

5. Show that the Volterra equation of the first kind

fX(x-s)n-l

f(x) = Jo (n -1)! cf>(s) ds

has the continuous solution cP(s) = f(n)(s) when f(x) has continuous

derivatives f'(x), f"(x),..., f(n)(x), and f(x) and its first n-1

derivatives vanish at x = o.

6. Show that the nth order linear differential equation

dny n dn-ry

d n -'\L a,.(x) d n-r - f(x)

X r=l X

where y(O) = y'(O) = · · · = y(n-l)(o) = 0, is equivalent to the Volterra

Problems 23

equation of the second kind

r x n a (x)(x-s)r-l

«f>(x) = f(x) + A Jo r l r (r-1)! «f>(s) ds

where cP(x) = dny/dx n .

7. Show that the solution of the differential equation

d2cP

+ W2cP = F[x, cP(x)] (O x l)

dx

obeying the end conditions cP(O) = cf>(l) = 0, satisfies the integral

equation

«f>(x) = r G(x, s)F[s, «f>(s)] ds

where

G( ) = { -(w sin wl)-l sin wx sin w.(l- s) (x s)

x, s -(w sin wl)-l sin w(l- x) sin ws (x s)

is the Green's function for the linear differential operator d 2 /dx 2 + w 2 .

3

Integral equations

of the convolution type

In the present chapter we shall be concerned with integral equations

whose kernels have the form

K(x,s)=k(x-s)

(1)

which is a function only of the difference between the two coordi-

nates x and s. The method of solution generally involves the use of

integral transforms. Hence before proceeding to consider the vari-

ous kinds of convolution type integral equations we shall briefly

discuss the more important forms of integral transform.

3.1 Integral transforms

We have already mentioned Laplace and Fourier transforms in the

historical introduction given in chapter 1.

The Laplace transform of a function cP(x) is given by

<I>(u) = l°Oe-uxq,(X)dX (2)

and the basic result which enables us to solve integral equations of

the convolution type is the convolution theorem which states that if

K(u) is the Laplace transform of k(x) then K(u)<I>(u) is the Laplace

transform of

r k(x - s)q,(s) ds (3)

To verify that this is correct, without any attempt at a rigorous

argument, we see that the Laplace transform of (3) is

1 00 e- UX dx r k(x - s)q,(s) ds = 1 00 e-utk(t) d{OO e-USq,(s) ds

= K(u)<I>(u)

on setting t = x - s and noting that we may take k(x) to vanish for

x<O.

Integral transforms 25

In addition to the sine and cosine transforms (2) and (4) referred

to in section 1.1, we have the exponential Fourier transform of a

function cP (x) defined as

<I>(u) = foo e iux 4>(x) dx' (4)

v2Tr -00

and the reciprocal formula

1 f oo .

cP(x) = r;:- e-1UX<I>(u) duo

v 2Tr -00

Then, if K(u) is the Fourier transform of k(x), the convolution

theorem for Fourier transforms states that .J2Tr K(u)<I>(u) is the

Fourier transform of

(5)

L k(x - s)4>(s) ds (6)

which can be easily verified as for the Laplace transform.

Another valuable integral transform is the Mellin transform

<1>( u) = [00 x U - l 4>(x) dx (7)

and the corresponding convolution theorem states that K(u)<I>(l- u)

is the Mellin transform of

[00 k(xs)4>(s) ds

where K(u) is the Mellin transform of k(x). In fact, again without

any attempt at rigour, we have that the Mellin transform of (8) is

[00 x u - 1 dx [00 k(xs)4>(s) ds = [00 t u - 1 k(t) d{OO s-u4>(s) ds

= K(u)<I>(l- u)

(8)

on setting t = xs.

Throughout the following sections we shall assume that all the

functions arising in the integral equations satisfy suitable conditions

which permit all the transformations to be performed with validity.*

* For details see E. C. Titchmarsh, Introduction to the Theory of Fourier

Integrals, 2nd ed., Oxford University Press, 1948.

26 Integral equations of the convolution type

3.2 Fredholm equation of the second kind

We consider firstly Fredholm equations of the type

cf>(x) = f(x)+ f k(x - s)cf>(s) ds (-00< X <00) (9)

A formal solution of this equation can be obtained by introducing

the Fourier transforms

1 f oo

<I>(u) = - eiUX<f>(x) dx,

J2; -00

1 f oo

F(u) = r:::- eiUXf(x) dx,

"2,,, -00

1 f oo

K(u) = r:::- eiUXk(x) dx.

"2,,, -00

Using the convolution theorem

(10)

(11)

(12)

1 f oo

K(u)<I>(u) = T r;:- k(x - s)c/>(s) ds

"2,,, -00

(13)

where T denotes the Fourier integral operator

1 f oo

T=- e iux dx

J2; -00 '

(14)

we obtain

<I>(u) = F(u) + 2", K(u)<I>(u)

(15)

as a result of operating with T on both sides of (9). This gives

<I>(u) = F(u)

1- ../2", K(u)

(16)

which provides the solution to the integral equation (9) in the form

<f>(x) = T- 1 [<I>(u)]

1 f oo e-iXUP(u)

= J2Tr -00 1- ../2Tr K(u) du

(17)

on using the reciprocal formula (5).

Fredholm equation of the second kind 27

Let us now consider the homogeneous equation

4>(x) = L k(x - s)4>(s) ds.

(18)

The solution to this can be written

n

cP(x) = L L cv,pxP-le-iwvX

v p=l

(19)

where the cv,p are constants, the W v are the zeros of 1- .J2'Tr K(u)

and n is the order of the multiplicity of the zero W v .

Thus we have that

1 = L k(t)eu"pt dt

(20)

on setting u = W v in (12), and

o = L k(t)tP-1ei"'pt dt

(p = 2, . . . , n)

(21)

on differentiating both sides of (12) p -1 times with respect to u and

setting u = W v . Hence

f 00 ( t ) P-l

1 = Lx> k(t) 1- x eu"pt dt

(22)

and so, setting t = x - s, we obtain

xp-1e-i"'px = L k(x - s)sP-1e-u"ps ds (23)

which shows that each term in the sum on the right-hand side of

(19) is a solution of (18).

Example 1.

As a first example we consider the case

f(x) = e- 1xl

k(x) = {,\ x

(x < 0)

(x >0)

(24)

(25)

Then

F(u) = fco e-lxl+iUX dx

J2; -00

12 1

=V ; 1+u 2

(26)

28 Integral equations of the convolution type

while

K(u) = r e X + iUX dx

5;, -r¥J

A 1

-

5;, 1 + iu

(27)

and so it follows that the solution is given by

1 f r¥J e-iXu du

4>(x) = 7T -co (1- iu)(1-'\ + iu)

Then if 0 < A < 1 we may apply Cauchy's residue theorem to

evaluate this integral obtaining the particular solution

(28)

2e- x

cP(x)= 2-'\

2e(l-A)X

2-A

(x > 0)

(x < 0)

(29)

Since there is just one zero, having order of multiplicity 1, of

1- v'21T K(u) = (1- A + iu)/(l + iu) at u = i(l- A), it follows

that the solution of the associated homogeneous equation

4>(x) = ,\ l co e x - s 4>(s) ds

(30)

is just

cP(x) = Ce(l-A)X

as can be readily verified by inspection.

Hence the general solution is given by

2e- x C (l-A)X

+ e

cP(x)= 2-A

( 2 + C ) e(l-A)X

2-A

(31)

(x > 0)

(x < 0)

(32)

where A is confined to the range of values 0 < A < 1.

Example 2. As a second example we discuss the Lalesco - Picard

equation for which

k(x) = Ae- ixi .

(33)

Fredholm equation of the second kind 29

We shall suppose that -f(x) and hence F(u) are unknown, in

which case it is convenient to rewrite the solution (17) in the form

cf>(x) = f(x)+ t: e-iXUP(u)M(u) du

(34)

where

M(u) = K(u)

1-fu K(u)

(35)

and

1 J oo .

f(x) = r:::- e-JXUP(u) duo

v 2", -00

(36)

For then we have, using the convolution theorem for Fourier

transforms

t: e-ixUP(u)M(u) du = t: f(u)m(x - u) du (37)

where

1 J oo .

m(x) = r;:- e-JXUM(u) du,

v 2", -00

(38)

that the solution (34) is given by

cf>(x) = f(x)+ t: f(u)m(x - u) duo

(39)

Now in the present example

K(u)= J'" Ae-lxl+iUX dx

fu -00

= 1:u 2 '

(40)

and so

-

2 A

M(u)= 1T1+u 2 -2A

(41)

30 Integral equations of the convolution type

from which it follows that

1 roo Ae -ixu

m(x) = 1T Lo 1 + u2-2'\ du

A - v'1-2A Ixl

- 1-2A e

(42)

provided A <! and using Cauchy's residue theorem. Hence a par-

ticular solution of the integral equation is

4>(x) = !(x) + ,\ foo !(u)e- v'1-2,\ lx-u l duo (43)

1-2A -00

Turning our attention to the homogeneous equation

4>(x) =,\ L e- 1x - sl 4>(s) ds

(44)

we note that there are two zeros, both having multiplicity of order 1,

of

1+u 2 -2A

1-.J2; K(u) = 1 2

+u

(45)

at u = :t:i l- 2A, so that the solution of (44) takes the form

cP(x) = Cl e - v'1-2A X + c2e v'1-2A X

(46)

where, fo r the i ntegral occurring in (44) to have a meaning, the real

part of 1 - 2A must satisfy

Re 1-2A< 1. (47)

Thus all values of A in the range 0 < A < 00 are allowed, but if A >!

the solution may be more suitably written as

cP(x) = alsin( 2A-1 x)+a2cos( 2A-1 x). (48)

However when A =!, 1- 2Tr K(u) has a single zero at u = 0 with

multiplicity of order 2 in which case the general solution of the

homogeneous equation is

cP(x) = Cl + C2 X .

(49)

V olte"a equation of the second kind 31

3 .3 Volterra equation of the second kind

If we set f(x)=O, k(x)=O, and cf>(x) =0 for x<O in the Fredholm

equation (9) of the second kind considered in section 3.2 we obtain

the integral equation

</>(x) = f(x)+ r k(x -s)</>(s) ds

(x > 0)

(50)

which is a V olterra equation of the second kind with a convolution

type integral.

Equation (50) can be solved most conveniently by introducing the

Laplace transforms

cI>(u) = ro e- UX </> (x) dx,

F( u) = {'" e -UXf( x) dx,

K(u) = r"" e-UXk(x) dx.

Jo .

(51)

(52)

(53)

Applying the convolution theorem for Laplace transforms

K(u)cI>(u) = L r k(x - s)</>(s) ds

. 0

. .

(54)

where L denotes the Laplace integral operator

L = L"" e- ux dx,

(55)

we find that

cI>(u) = F(u) + K(u)cI>(u)

(56)

which yields

F(u)

cI>(u) = 1- K(u)

= F(u)+ F(u)M(u)

(57)

where

M(u) = K(u)

1- K(u)"

(58)

32 Integral equations of the convolution type

Hence the solution of (50) can be written as

«(>(x) = f(x) + r m(x - s)f(s) ds

(59)

where

M(u) = I'O e-UXm(x) dx.

(60)

Example 1. We examine first the simple case where the kernel is

k(x) = { x

(x > 0)

(x < 0)

(61)

Then we have

1 00 A-

K(u)=A- e- ux xdx=2

o u

(62)

so that

A-

M(u)= 2 A-

u -

( 1 1 )

=2 u- -u+

(63)

and hence

( ) ( .J>...x - X )

mx=-e -e .

2

(64)

Thus the solution to the integral equation (50), as given by (59), is

«(>(x)=f(x)+ fXf(s){e (X-S)_e- (X-S)}ds (65)

2 Jo

Example 2. Our next example has the kernel

k(x) = {A X

(x >0)

(x < 0)

(66)

Then

1 00 A

K(u) = A- e(1-u)x dx =-

o u-l

(67)

Volterra equation of the second kind 33

so that

A

M ( u ) -

. u-(A+1)

(68)

and hence

m(x) = Ae(A+l)x. (69)

Thus, using (59) the solution of the integral equation (50) is

«(>(x) = f(x) + Af f(s)e(Hl)(X-S) ds (70)

Example 3. Our last example has the kernel

k(x) = { A s o in x (x > 0) (71)

(x < 0)

Then

1 00 A

K(u) = A e- ux sin x dx = 2 1

o u +

(72)

and so

A

M ( u ) -

- u 2 +1-A

(73)

which yields

A sin ( .J 1- A x)

.J 1-A (A < 1)

m(x) = AX (A = 1)

A

../ sinh ( ../ A -1 x) (A > 1)

A-I

(74)

on using the appropriate Laplace transform formulae. Hence the

solution of the integral equation (50) is given by

A J x

../ f(s) sin { ../ 1- A (x-s)} ds

I-A 0

«(>(x)-f(x)= ff(S)(X-S)dS

A J x

../A-1 0 f(s)sinh{ ../ A-1 (x-s)}ds

(A < 1)

(A = 1)

(A > 1)

(75)

34 Integral equations of the convolution type

3.4 Fredholm equation of the first kind

Here we are concerned with Fredholm equations of the form

f(x) = t: k(x - s )cf>(s) ds

(76)

with an integral of the convolution type on the right-hand side. To

solve this equation we take Fourier transforms. On applying the

convolution theorem (13) this yields

F(u) =.J2; K(u)cI>(u)

(77)

which leads to the formal solution of (76):

1 f oo .

cf>(x) = r;:- e -IXUcI>(u) du

"" 21T -(X)

= roo e- ixu F(u) duo

21T J-oo K(u)

(78)

3.4.1 Stieltjes integral equation

An interesting example of a Fredholm equation of the first kind

possessing a convolution type integral is obtained by applying

Laplace's integral operator

LOO e- xs ds

twice. Thus consider the equation

g(x) = A Loo e- xt d{OO e-tsl{I(s) ds.

(79)

Then, on reversing the order of the integrations, we see that

g(x) = A Loo l{I(s) ds Loo e -(x+s)t dt

= A L oo l{I(s) ds (80)

x+s

which is known as Stieltjes integral equation.

Now setting

x- = e- , s = eO", e1/2 g(e ) = f( ), el/2 t/J(e ) = cf>( ) (81)

Fredholm equation of the first kind 35

we obtain

A f oo cf>(u)

f( ) = 2 h .!( _ ) du.

-00 COS 2 U

(82)

This is a Fredholm equation of the first kind with kernel

A

k( )= 2 hl '

cos 2:

(83)

To solve (82) we require to find the Fourier transforn1 of k( )

which is given by

A f oo eiu

K(u)=- d

J2; -00 e 1/2 + e -1/2

A 1 00 Xiu-(1/2)

=- dx

J2; 0 l+x

(84)

on putting x = e . But it is well known that

1 00 a-1

X dx = '1T

o 1 + x sin '1Ta

(85)

and so we obtain

A ; A ; A ;

K(u) = = = (86)

sin {'1T(iu + !)} cos i'1TU cosh '1TU

Now, making use of the solution (78) to the equation (76), we see

that the solution of our integral equation (82) may be written as

4>( ) = f"" F(u) cosh 1TU e-il;u du

A'1T 2 '1T -00

= 1 f"" F(u){e-i(I;HJT)U + e-i(!;-i....)U} du (87)

2 '1T A J2; -00

where F(u) is the Fourier transform of f( ). Thus

1

cf>( ) = 2'1TA {f( + i'1T) + f( - i'1T)}

(88)

and hence the solution to Stieltjes integral equation (80) is

l . .

tfJ(x) = 2'1TA {g(xel'IT) - g(xe-I'IT)}.

(89)

36 Integral equations of the convolution type

Example. Suppose that

x

In-

a

g(x)= x-a

1

(x;e a)

(x = a)

(90)

a

Then (89) provides the solution

1

l(1(x) = '\'(x + a)

(91)

to Stieltjes equation (80).

3.5 Volterra equation of the first kind

We now discuss Volterra equations of the form

f(x) = f k(x - s )«(>(s) ds (x > 0), (92)

where f(O) = 0 and the integral on the right-hand side is of the

convolution type again. This can be obtained from the Fredholm

equation (76) of the first kind, examined in the previous section, by

taking f(x) = 0, k(x) = 0 and cf>(x) = 0 for x <0.

To solve equation (92) we introduce the Laplace transforms

F(u), K(u) and <I>(u) of f(x), k(x) and cf>(x). Then employing the

convolution theorem for Laplace transforms we obtain

F(u) = K(u)<I>(u)

(93)

and so

«(>(x) = L-l{ : : }

where L -1 denotes the inverse of the Laplace transform.

Example. Consider the integral equation

f(x) = fJo(X-S)«(>(S)dS

(94)

(95)

with a kernel of the convolution type given by

k(x) = Jo(x)

(96)

Volterra equation of the first kind 37

where Jo(x) is the zero order Bessel function satisfying 1 0 (0) = 1.

We can solve this equation by taking Laplace transforms. Since

1

L{Jo(x)} = .J 2

U +1

(97)

it follows that

cI>(u) = U2 + 1 F(u)

using (93). For the special case

f(x) = sin x

(98)

(99)

we have

1

F( u) = 2 1

u +

(100 )

and then

1

<1>( u) = / 2 ·

'\/u +1

We see at once from (97) that for this case

cP(x) = Jo(x) (102)

(101)

which produces the interesting formula

[Jo(X - s)Jo(s) ds = sin x. (103)

3.5.1 Abel's integral equation

The equation solved by Abel has the form

f(x) = [(x-s)-aq,(S)dS (0<a<1) (104)

with f(O) = 0, which is a Volterra integral equation of the first kind

with a singular kernel of the convolution type given by

k(x) = x-a.

(105)

This equation is of c<?nsiderable historical importance since for

a =! it describes the tautochroRe problem introduced briefly in

section 1.1.

38 Integral equations of the convolution type

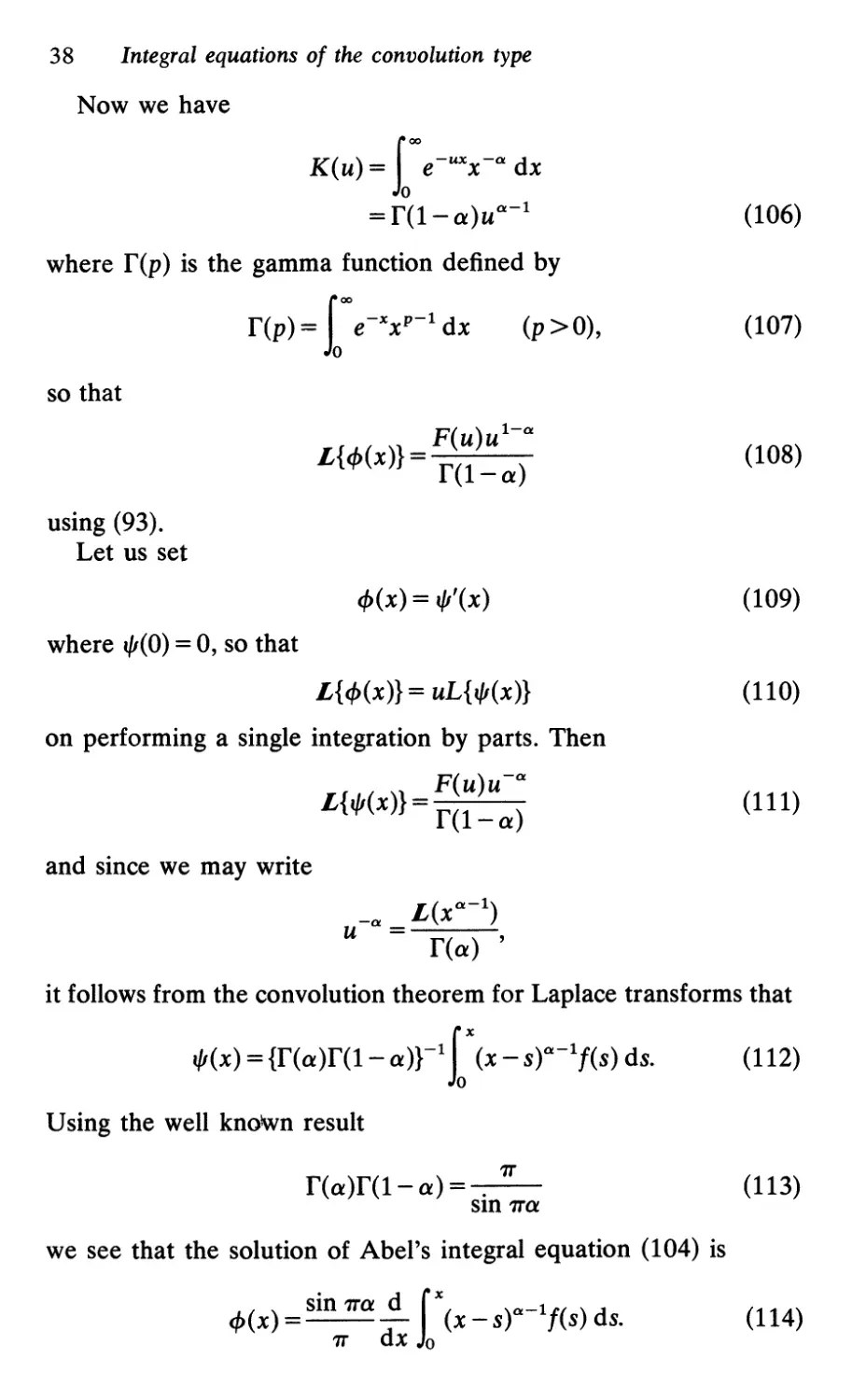

Now we have

K(u) = [00 e-uxx- a dx

= r(l- a)u a - 1

where r(p) is the gamma function defined by

f(p) = [00 e -xx p - 1 dx (p > 0),

(106)

(107)

so that

F(u)u 1 - a

L{«f>(x)}= r(1-a)

(108)

using (93).

Let us set

cf>(x) = t/J'(x)

(109)

where t/J(O) = 0, so that

L{ cf>(x)} = uL{ t/J(x)}

(110)

on performing a single integration by parts. Then

F(u)u- a

L{tf1(x)} = f(l-a)

(111)

and since we may write

-a L(x a - 1 )

u = r(a) ,

it follows from the convolution theorem for Laplace transforms that

tf1(x) = {f(a)r(I- a)}-l r (x - s)"'-lf(s) ds. (112)

U sing the well known result

'1T

r(a)r(l- a) = .

sIn '1Ta

(113)

we see that the solution of Abel's integral equation (104) is

«f>(x) = sin 'ITa r x (x _ s)"'-lf(s) ds. (114)

'1T dx Jo

Fox's integral equation 39

For the particular case a =! corresponding to the tautochrone

problem this solution simplifies to

1 d I x f(s)

cf>(x) = - - d ( )1/2 ds.

'1T X 0 x-s

(115)

3.6 Fox's integral equation

We seek the solution of the Fredholm integral equation of the

second kind having the form

<f>(x) = f(x) + L"" k(xs)<f>(s) ds

(O<x<oo)

(116)

named after Fox. This can be achieved by employing the Mellin

transforms <I>(u), F(u) and K(u) of cf>(x), f(x) and k(x) respectively

defined by (7).

Now we have shown in section 3.1 that K(u)<I>(l- u) is the

Mellin transform of

L"" k(xs)<f>(s) ds

and so we see that

<I>(u) = F(u) + K(u)<I>(l- u)

(11 7)

on taking the Mellin transforms of both sides of (116). Also,

replacing u by 1 - u, we have

<1>(1- u) = F(l- u) + K(l- u)<I>(u)

(118)

which enables us to write

cI>(u) = F(u) + K(u)F(l- u)

1- K(u)K(l- u)

(119)

Thus we have obtained a solution of Fox's integral equation (116)

provided we can derive cf>(x) from its Mellin transform <I>(u).

Example. Let us take as an example

k(x) = A .Jl: sin x

(120)

40 Integral equations of the convolution type

Then the Mellin transform of k(x) is given by

K(u) = A IX> x u - 1 sin x dx

= A f(u) sin u

(121)

and

2A 2 . u u

K(u)K(l- u) = --:;; f(u)f(l- u) SIn 2 cos 2

= A 2f(u)f(1- u) sIn u

=A 2

using (113). Hence, provided A 2 1, we see that

(122)

F(u) A /2 . u

<I>(u) = 1- A 2 + 1- A 2 -y f(u) SIn 2 F(l- u)

making use of (119). But by the convolution theorem for Mellin

transforms established in section 3.1 we know that f( u) sin u F( 1 - u)

is the Mellin transform of IX> sin xsf(s) ds, and so the solution of

Fox's integral equation with kernel given by (120) is

(123)

-

(x) A 2 00.

<fJ(x) = / 2 + 1 2 _ 1 SIn xsf(s) ds

-A -A 0

(O<x<oo)

(124 )

This result can be verified directly by using Fourier's reciprocal

sine formulae (1.2) and (1.3) in the form

2 1 00 1 00

f(x) = - sin xs ds sin stf(t) dt

0 0

Problems

(O<x<oo)

(125)

1. Solve the Lalesco- Picard equation

<fJ(x) = cas JLX + A L e-lx-sl<fJ(s) ds

(A <!)

Problems 41

2. Find the solutions to the Volterra equations of the second kind

<fJ(x) = f(x) =i: r (x - s)<fJ(s) ds

when (i) f(x) = 1, (ii) f(x) = x using the general solution (65), and

verify that they agree with the solutions obtained by solving the

equivalent differential equations (see problem 3 at the end of

chapter 2).

3. Find the solutions to the Volterra equation of the second kind

<fJ(x) = f(x) +,\ r sin (x - s)<fJ(s) ds

when (i) f(x) = x and A = 1,

(ii) f(x) = e- x and A = 2,

using the general solution (75) and verify that they agree with the

solutions obtained by solving the equivalent differential equations

(see problem 4 at the end of chapter 2).

4. If F(u) is the Fourier transform of f(x) show that the transform

of f"(x) is -u 2 F(u) provided f, f' 0 as x :t:oo. Hence show that

f(x) = f e-lx-sl<fJ(s)ds (-00< x <(0)

has the solution

cf>(x) = !{f(x) - f"(x)}

5. If F(u) is the Laplace transform of f(x) show that the transform

of f'(x) is uF(u)-f(O) for u>a provided f(x)e-ax o as x oo.

Hence find the solution of the Volterra equation of the first kind

f(x) = r e -s<fJ(s) ds

where f(O) = o.

6. Use Fourier's sine formulae to verify that the solution of Fox's

integral equation

<fJ(x) = f(x) + ,\ 1"" sin xs<fJ(s) ds

is given by (124).

(O<x<oo)

42 Integral equations of the convolution type

7. Use the method of Mellin transforms to solve Fox's integral

equation when the kernel is

k(X)=A cosx.

Verify the correctness of your solution by using Fourier's cosine

formulae.

4

Method of successive

approximations

4.1 Neumann series

A valuable method for solving integral equations of the second kind

is based on an iterative procedure which yields a sequence of

approximations leading to an infinite series solution associated with

the names of Liouville and Neumann. It is sometimes called the

Liouville-Neumann series but more often it is called the Neumann

.

serIes.

Let us first examine the Fredholm equation

<fJ(x) = f(x) + A r K(x, s)<fJ(s) ds

(a x b)

(1)

and consider the set of successive approximations to the solution

cf> (x) given by

cf>O(x) = cf> (O)(x)

cf>1 (x) = cf> (O)(x) + Acf> (1\X)

cf>2(X) = cf> (O\x) + Acf> (l)(x) + A 2 cf> (2)(X) (2)

and so on, the Nth approximation being the sum

N

cf>N(X) = L A ncf>(n)(x)

n=O

(N = 0, 1, 2, . . .)

(3)

where

cf> (O\x) = f(x),

<fJ(l)(X) = r K(x, s)<fJ(O)(s) ds,

<fJ(2)(X) = r K(x, S)<fJ(l)(S) ds,

(4)

and in general

<fJ(n)(x) = r K(x, s)<fJ(n-l)(s) ds

(n;?; 1).

(5)

44 Method of successive approximations

We see that the sequence of approximations is generated by an

iterative process of successive substitutions on the right-hand side of

(1).

Suppose that f(x) and K(x, s) are continuous functions in the

range of definition so that they are bounded and we may write

If(x)1 m

IK(x, s)I M

(a x b)

(a x b, a s b)

(6)

when m and M are positive constants. Then

1cf>(O)(x)1 = If(x)1 m,

IcfJ(l)(x)l:s;;; flcfJ(O)(s)IIK(X, s)1 ds:S;;; mM(b - a),

IcfJ(2)(x)l:s;;; flcfJ(l)(s)IIK(X, s)1 ds:S;;; mM2(b - a)2,

and in general

1cf>(n)(x)1 mMn(b - a)n.

(7)

Hence

N N 00

L A ncf>(n)(x) L IAlnlcf>(n)(x)1 m L IAlnMn(b - a)n (8)

n=O n=O n=O

and so L =o A ncf>(n\x) is absolutely and uniformly convergent in

a x b if p=IAIM(b-a)<l, that is if

1

IAI< M(b-a)

(9)

since then the series is dominated by the convergent geometric

serIes

f n m

mi.Jp = 1 .

n=O - P

(10)

When condition (9) is satisfied,

00

cf>(x) = LAn cf> (n)(x)

n=O

(11)

is a continuous solution of the integral equation (1).

Neumann series 45

The error made in replacing the exact solution cf> by the Nth

approximation cf>N is given by

00

rN(x) = cf>(x) - cf>N(X) = L A ncf>(n)(x). (12)

n=N+l

It is readily seen that

00

IrN(x)l m L pn_

n=N+l

N+l

mp

1-p

(13)

and so IrN(x)1 o as N oo if p<l.

Now let us turn our attention to the Volterra equation

cfJ(x) =f(x)+ A f K(x, s)cfJ(s) ds.

(14)

Then the previous analysis holds with K(x, s)=O for s>x. But we

have further that

1 cf> (O)(x)1 = If(x)1 m,

14>(1)(x)I";;;; flcfJ(O)(s)IIK(x, s)1 ds";;;; mMf ds = mM(x - a),

fX fX mM 2 (x - a)2

IcfJ (2)(x)I";;;; la IcfJ (l)(S )IIK(x, s)1 ds";;;; mM2la (s - a) ds = 2! '

fX mM 3 fX mM 3 (x - a)3

IcfJ(3)(x)l,,;;;; la IcfJ(2)(s)IIK(x, s)1 ds";;;; 2! la (s - a)2 ds = 3!

and in general, assuming that

IcfJ(n-1)(x)l,,;;;; mMn-l(x - at- 1

(n -I)!

and using the principle of induction, we have

IcfJ(n)(x)l,,;;;; (m , r x (s _ at --I ds = mMn( - at . (15)

n . Ja n.

Hence

f A ncfJ(n)(x) ,,;;;; m f IAlnMn(b - at (16)

n=O n=O n!

and so L =o A ncf>(n)(x) is absolutely and uniformly convergent in

a x b for all values of A since it is dominated by

00 IAlnMn(b _ a)n

m L , =mexp{IAIM(b-a)}. (17)

n=O n.

46 Method of successive approximations

Thus the Neumann series (11) converges for all values of A in the

case of the Volterra equation (14) whereas it converges only for

sufficiently small values of A in the case of the Fredholm equation

(1).

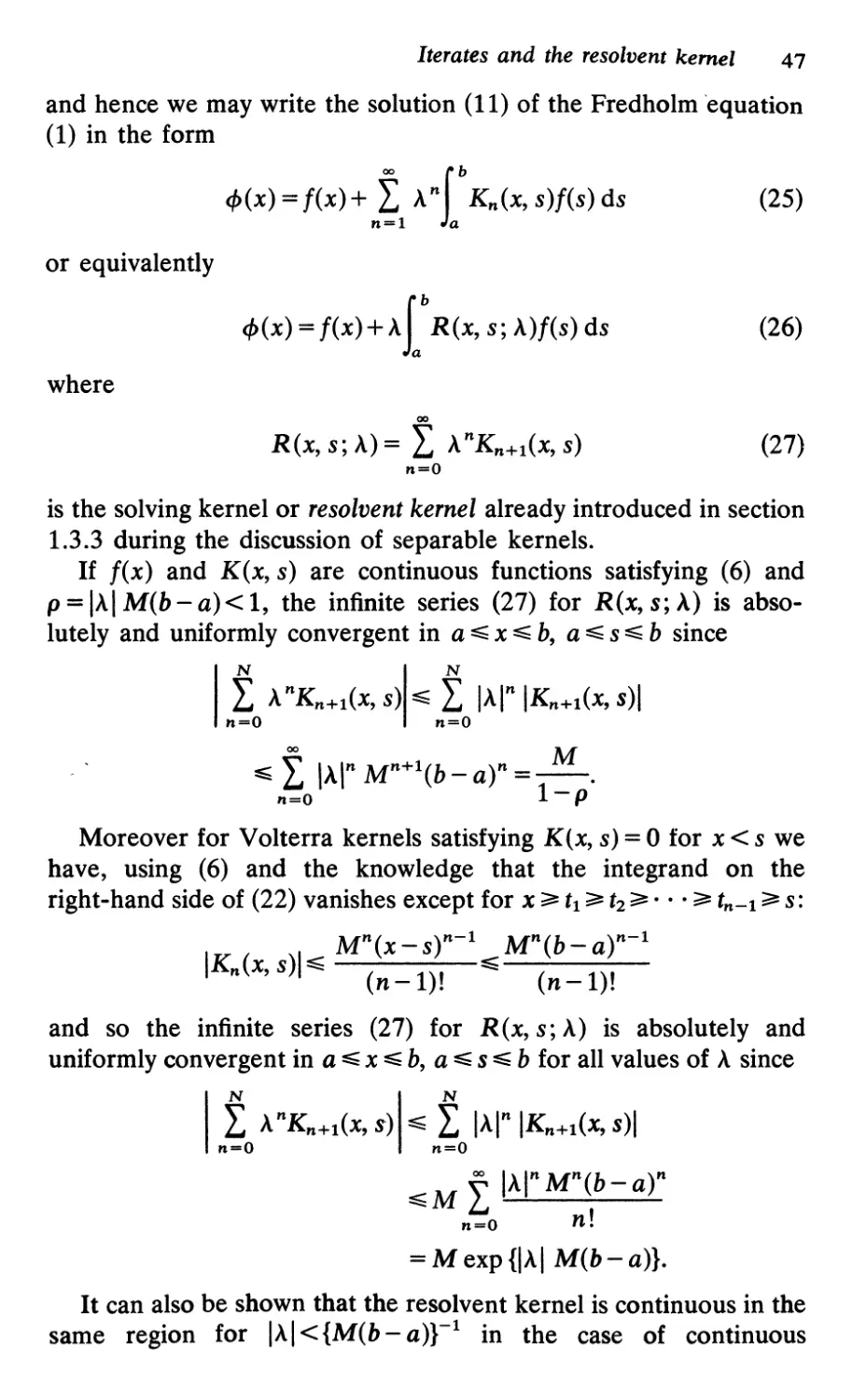

4.2 Iterates and the resolvent kernel

In the previous section we obtained solutions to the Fredholm and

Volterra equations of the second kind in the form of the infinite

series (11). It is convenient to express these solutions in terms of

iterated kernels defined by

K1(x, s) = K(x, s)

Kn(x, s) = r K(x, t)Kn-1(t, s) dt

(n 2)

(18)

(19)

so that

K 2 (x, s) = r K(x, t1)K(ti> s) dtl,

K 3 (x, s) = r K(x, tt)K 2 (tt, s) dt 1 (20)

= r r K(x, t1)K(ti> t 2 )K(t 2 , s) dt 1 dt 2 (21)

and in general

Kn(x, s) = r. · · r K(x, t1)K(tl, t 2 ) · · ·

K(t n -2, tn-1)K(t n - b s) dt 1 · · · dtn-l (22)

It follows at once that the iterated kernels satisfy

Kn(x, s) = r Kp(x, t)Kq(t, s) dt

for any p and q with p+q = n.

Now

(23)

</J(n)(x) = r Kn(x, s)[(s) ds

(24)

Iterates and the resolvent kernel 47

and hence we may write the solution (11) of the Fredholm 'equation

(1) in the form

00 f b

<fJ(X) = f(x)+ n l A n la Kn(x, s)f(s) ds

(25)

or equivalently

<fJ(x) = f(x) + A r R(x, s; A)f(s) ds

(26)

where

00

R(x, s; A) = L A nK n + 1 (x, s)

n=O

(27)

is the solving kernel or resolvent kernel already introduced in section

1.3.3 during the discussion of separable kernels.

If f(x) and K(x, s) are continuous functions satisfying (6) and

p = IAI M(b - a) < 1, the infinite series (27) for R(x, s;'\) is abso-

lutely and uniformly convergent in a x b, a s b since

N N

L A nK n + 1 (x, s) L lAin IKn+t(x, s)1

n=O n=O

f lAin M n +1(b-at= M .

n=O 1 - P

Moreover for Volterra kernels satisfying K(x, s) = 0 for x < s we

have, using (6) and the knowledge that the integrand on the

right-hand side of (22) vanishes except for x t 1 t 2 . · · tn-t s:

M n (x-s)n-1 Mn(b-a)n-1

IKn(x, s)1 (n -1)! (n -1)!

and so the infinite series (27) for R(x, s; A) is absolutely and

uniformly convergent in a x b, a s b for all values of A since

N N

L A nKn+t(x, s) L lAin IKn+t(x, s)1

n=O n=O

00 lAin Mn(b - a)n

ML

n=O n!

= M exp {IAI M(b - a)}.

It can also be shown that the resolvent kernel is continuous in the

same region for IAI<{M(b-a)}-1 in the case of continuous

48 Method of successive approximations

Fredholm kernels, and for all A in the case of continuous Volterra

kernels.

Example 1. As a first example of the Neumann series we consider

the Fredholm equation of the second kind

cf>(x) = f(x) + ,\ r u(x) v(s) cf>(s) ds

(28)

with separable kernel K(x, s) = u(x)v(s). This equation was dis-

cussed previously in section 1.3.3 where an exact solution (1.30)

in closed analytical form was obtained.

Now we have

K1(x, s) = K(x, s) = u(x)v(s)

K 2 (x, s) = r K1(x, t)K1(t, s) dt

b

= u(x) v(s) I v(t) u(t) dt

= au(x)v(s),

where

a = r v(t) u(t) dt,

and if we assume that

Kn(x, s) = an-1u(x) v(s)

then

Kn+i(x, s) = r K1(x, t)Kn(t, s) dt

= an-1u(x) V(S)f v(t)u (t) dt

= anu(x)v(s)

(29)

establishing the general form of the interated kernel Kn+1(x, s) by

the principle of induction.

Iterates and the resolvent kernel 49

Hence the resolvent kernel is

00

R(x,S;A)= L A n K n + 1 (x,S)

n=O

00

= U(X) V(S) L (Aa)n

n=O

(30)

u(x)V(S)

-

1-Aa

(31)

provided IAal < 1. This is in accordance with the exact solution

(1.32) derived previously for all A ¥= a-i.

The homogeneous equation corresponding to (28) is

cf>(x) = A r u(x) v(s) cf>(s) ds

It possesses one characteristic value Ai = a -1 and we see that the

Neumann expansion (30) converges to (31) for IAI<IAll.

Example 2. We now consider the Fredholm equation

cf>(x) = 1 + A r xscf>(s) ds (O::S:; x::S:; 1) (32)

with f(x) = 1 and separable kernel K(x, s) = xs. This was examined

earlier as example 1 of section 1.3.3.

We have

a= {\2dt=i

(33)

so that

xs

K n + 1 (x, s) = 3 n .

Hence the resolvent kernel is

(34)

00

R(x,S;A)= L A n K n + 1 (x,s)

. n=O

00 ( A ) n

= xs L -

n=O 3

xs

(35)

1 - A/3

50 Method of successive approximations

provided IA 1< 3, this being less severe that the condition given by

p < 1.

Thus, using (26) we see that

<fJ(x) = f(x)+ A r R(x, s; A)f(s) ds

= 1 + 1 : /3 r s ds

3Ax

-1+ 2(3-A) (36)

which is in accordance with the exact solution (1.38) derived previ-

ously for all A ¥= 3.

Example 3. Lastly we discuss the Volterra equation with kernel

K(x, s) = { e -s

(x>s)

(s > x)

(37)

This kernel is of the convolution type and was discussed before as

example 2 of section 3.3 where an exact solution in closed analytical

form was derived.

Now

K 2 (x, s) = f' K(x, t)K(t, s) dt

= IX e x - t . e t - s dt

= e X - S I Xd't

= eX-S(x - s) (x> s),

K 3 (x, s) = f'K(X, t)K 2 (t, s) dt

= IX e x - t . et-S(t - s) dt

= ex-sf'(t- s) dt

x-s (x - S )2 ( X > S )

:; e 2!

Problems 51

and in general, assuming that

(x - S)n-l

Kn(x, s) = e X -' (n -1)!

(x>s)

we have

K n + 1 (x, s) = r K(x, t)Kn(t, s) dt

I x ( ) n-l

_ x-t. t-s t - s d

- see (n -I)! t

_ x_srX (t-s)n-l

- e J. (n -1)! dt

( X -- S ) n

x-s

=e

n!

(x > s)

(38)

which establishes the general form of the iterated kernel by the

principle of induction.

Hence the resolvent kernel given' by (27) is

R( . \ ) = f ,\n(x-s)n x-s

x, S , 1\ i..J , e

n=O n.

= e(A+l)(x-s)

(x> s)

(39)

for all '\, which is in agreement with the solution (70) obtained in

section 3.3 since the resolvent kernel vanishes for x < s.

Problems

1. Use the method of successive approximations to solve

<f>(x) = 1 + A r eX+S <f>(s) ds

and verify that your solution agrees with the solution to problem 3

at the end of chapter 1 for ,\ < 2/(e 2 -1).

2. Use the method of successive approximations to solve

<f>(x) = x + A ["Sin nx sin ns<f>(s) ds

where n i . an integer, and verify that your solution agrees with the

solution to problem 5 at the end of chapter 1 for ,\ < 2/ 'IT.

52 Method of successive approximations

3. Obtain the solution in closed analytical form of

<f>(x) = f(x) + f1T K(x, s)<f>(s) ds

where

00

K(x, s) = L an SIn nx cos ns

n=l

and

00

L lanl<oo,

n=l

using the method of successive approximations.

4. Use the method of successive approximations to solve

<f>(x) = 1 + A r <f>(s) ds,

verifying that your solution for A = 1 agrees with that obtained to

problem l(i) at the end of chapter 2.

5. Use the method of successive approximations to obtain the

resolvent kernel for

<f>(x) = f(x) + A r (x - s)<f>(s) ds

Verify that this agrees with the solutions to problem 3 at the end of

chapter 2.

6. Use the method of successive approximations to show that the

resolvent kernel for

<f>(x) = 1 + A r xs<f>(s) ds

is given by

00 ( A ) n (X3 _ s3)n

R(X,S;A)=XSn o 3 n!

and hence solve the integral equation.

5

Integral equations

with singular kernels

5.1 Generalization to higher dimensions

So far in this book we have been concerned solely with integral

equations which involve an unknown function cf>(x) of a single real

variable x. However it is interesting to consider integral equations in

higher dimensions and to this end we suppose that V is a region of

an n-dimensional space and let M, N de-note points in the region V.

Then an integral equation of the second kind takes the form

cP(M) = f(M) + L K(M, N)cP(N) dUN

(1)

where K(M, N) is the kernel, f(M) is a given function, cf>(M) is the

function which we require to find, and the integration in (1) is over

all the points N of the n-dimensional region V.

Also an integral equation of the first kind takes the form

f(M) = L K(M, N)cP(N) dUN.

(2)

A common type of singular kernel is given by

K(M, N) = F( N) (3)

r

where F(M, N) is a bounded function and r is the distance between

the points M and N in the n-dimensional space. This type of kernel

is called polar and as r 0 it approaches an infinite value. It is said

to give rise to an integral equation with a weak singularity if

0< a < n. The polar kernel (3) is square integrable, Le.

L L IK(M, NW dUM dUN < co, (4)

when a is restricted further to the range 0 < a < n12, as we have

shown for the one-dimensional case (n = 1) in section 1.4.

54 Integral equations with singular kernels

5.2 Green's functions in two and three dimen-

.

stons

We now consider the linear second order partial differential equa-

tion

LtfJ = n (5)

and begin by investigating the two-dimensional case for which

iJ2 iJ2

L = + +q(x, y) (6)

iJx iJy

Then the corresponding Green's function G(x, y; s, t) satisfies

LG(x, y; s, t) = S(x - s )S(y - t).

(7)

Integrating over the interior of a circle l' of radius e centered at the

point with coordinates s, t we have

L LG(x, y; s, t) dx dy = 1

(8)

since the integral of the S functions amounts to unity. This leads to

lim f iJG du= 1

B O 'Y iJp

(9)

where du 'represents an .element of arc length and

p2 = (x - S)2 + (y - t)2.

(10)

We now see that

iJG

27Tp- 1

iJp

(11)

as p 0 and so

1

G (x, y; s, t) = 27T In p + g (x, y; s, t)

(12)

where

1

L g( x, y; s, t) = - 27T q (x, y) In p

(13)

and g is chosen so that G satisfies prescribed boundary conditions.

Dirichlet's problem 55

In the three-dimensional case

L = V 2 +q(r)

and the Green's function satisfies

(14)

LG(r, s) = 5(r-s)

(15)

where rand s are the position vectors of the points with coordinates

(x, y, z) and (s, t, u) respectively.

Integrating over the region contained by a sphere S of radius e

centered at the point with position vector s we obtain

Is LG(r, s) dr= 1

(16)

gIvIng

lim! aG dS = 1

8-+0 k aR

(17)

where the integration in (17) is over the surface of the sphere Sand

R 2 = Ir-sl 2 = (x - S)2+(y - t)2+ (z - U)2. (18)

It follows that

41TR 2 aG 1

aR

(19)

as R 0 and hence the Green's function has the form

1

G(r, s) = - 41TR + g(r, s)

(20)

where

q(r)

Lg(r, s) = 41TR

(21)

and we choose g so that G satisfies given boundary conditions.

5.3 Dirichlet's problem

The problem named after Dirichlet is concerned with determining a

function tf1 which has prescribed values f over the boundary r of a

certain simply connected region R and which is harmonic, Le.,

satisfies Laplace's equation, at all interior points of R.

56 Integral equations with singular kernels

We begin by considering the two-dimensional Dirichlet problem

in which we have a plane region R bounded by a closed contour l'

with a continuously turning tangent. Let us suppose that tf/(x, y) is

the harmonic function satisfying

a 2 tf/ a 2 tf/

Vitf/ = -+-= 0

ax 2 ay2

(22)

in R and having the prescribed values given by f(s, t) at all points

(s, t) of 1'. We now express tf/ as the real part of an analytic function

F(z) of the complex variable z = x + iy by putting

tf/(x, y) = Re F(z)

(23)

and look for a solution in the form of the Cauchy-type integral

F(Z)= f p,(C) dC

21Tl 'Y l- z

(24)

where the density IL«() is a real function of the complex variable ,

and the contour l' is directed in the anticlockwise sense. Next we

allow z to approach a point w on the boundary curve l' from the

interior of R. In the limit we obtain

F(w) =!IL(W)+ 2 1 · P f ;(C) dC

1Tl - W

'Y

(25)

where the integral occurring on the right-hand side of (25) is its

Cauchy principal value. (indicated by the prefix P):

'Urn f. p,(C) dC

B O 'Ye l- w

(26)

where I'B is the part of l' outside a circle of radius e centered at the

point w, and the term !IL(W) is the contribution arising from the

singularity at ,= w.