Text

Artificial Intelligence

Structures and Strategies for

Complex Problem Solving

Third Edition

George F. Luger

William A. Stubblefield

Addison Wesley Longman, Inc.

Harlow, England • Reading, Massachusetts • Menlo Park,

California • Berkeley, California • Don Mills, Ontario

Amsterdam • Bonn • Sydney • Tokyo • Milan • Mexico City

© Addison Wesley Longman, Inc. 1998

Addison Wesley Longman, Inc.

One Jacob Way

Reading

MA 01867-3999

USA

and Associated Companies throughout the World.

The rights of George Luger and William Stubblefield to be identified as authors of

this Work have been asserted by them in accordance with the Copyright, Designs

and Patents Act 1988.

All rights reserved. No part of this publication may be reproduced, stored in a

retrieval system, or transmitted in any form or by any means, electronic,

mechanical, photocopying, recording or otherwise, without either the prior written

permission of the publisher or a licence permitting restricted copying in the United

Kingdom issued by the Copyright Licensing Agency Ltd, 90 Tottenham Court

Road, London W1P 9HE.

The programs in this book have been included for their instructional value. They

have been tested with care but are not guaranteed for any particular purpose. The

publisher does not offer any warranties or representations nor does it accept any

liabilities with respect to the programs.

Cover designed by odB Design & Communication, Reading, England

Cover figure by Thomas Barrow, University of New Mexico

Typeset by the authors

Printed and bound in the United States of America

First printed 1997

ISBN 0-805-31196-3

British Library Cataloguing-in-Pubtication Data

A catalogue record for this book is available from the British Library

For my wife, Kathleen, and our children Sarah, David, and Peter,

For my parents, George Fletcher Luger and Loretta Maloney Luger.

Si quid est in me ingenii, judices .. .

Cicero, Pro Archia Poeta

GFL

For my parents, William Frank Stubblefield and Maria Christina Stubblefield.

And for my wife, Merry Christina.

She is far more precious than jewels.

The heart of her husband trusts in her,

and he will have no lack of gain.

Proverbs 31: 10-11

WAS

PREFACE

What we have to learn to do

we learn by doing...

—Aristotle, Ethics

New Colors on an Old Canvas

No one expects a computer science textbook to age as well as Katharine Hepburn, Sean

Connery, or the music of Thelonious Monk. We were nonetheless surprised at just how

soon it became necessary for us to begin work on this third edition. Some sections of the

second edition have endured remarkably well, including topics such as logic, search,

knowledge representation, production systems and the programming techniques devel-

developed in LISP and PROLOG. These remain central to the practice of artificial intelligence,

and required a relatively small effort to bring them up to date and to correct the few errors

and omissions that had appeared in the second edition (through purely random processes

that imply no failure of the authors). However, many chapters, including those on learning,

neural networks, and reasoning with uncertainty, clearly required, and received, extensive

reworking. Other topics, such as emergent computation, case-based reasoning and model-

based problem solving, that were treated cursorily in the first two editions, have grown

sufficiently in importance to merit a more complete discussion. These needed changes are

evidence of the continued vitality of the field of artificial intelligence.

As the scope of the project grew, we were sustained by the support of our publisher,

editors, friends, colleagues, and, most of all, by our readers, who have given our creation

such a long and productive life. We were also sustained by our own excitement at the

opportunity afforded: Scientists are rarely encouraged to look up from their own, narrow

research interests and chart the larger trajectories of their chosen field. Our publisher and

readers have asked us to do just that. We are grateful to them for this opportunity.

Although artificial intelligence, like most engineering disciplines, must justify itself

to the world of commerce by providing solutions to practical problems, we entered the

PREFACE VII

field for the same reasons as most of our colleagues and students: we want to understand

the mechanisms that enable thought. We reject the rather provincial notion that intelli-

intelligence is an exclusive ability of humans, and believe that we can effectively explore the

space of possible intelligences by designing and evaluating intelligent machines. Although

the course of our careers has given us no cause to change these commitments, we have

arrived at a greater appreciation for the scope, complexity and audacity of this undertak-

undertaking. In the preface to our first and second editions, we outlined three assertions that we

believed distinguished our approach to teaching artificial intelligence. It is reasonable, in

writing a preface to this third edition, to return to these themes and see how they have

endured as our field has grown.

The first of these goals was to "unify the diverse branches of AI through a detailed

discussion of its theoretical foundations." At the time we adopted that goal, it seemed that

the main problem was reconciling researchers who emphasized the careful statement and

analysis of formal theories of intelligence (the neats) with those who believed that intelli-

intelligence itself was some sort of grand hack that could be best approached in an application-

driven, ad hoc manner (the scruffies). That simple dichotomy has proven far too simple. In

contemporary AI, debates between neats and scruffies have given way to dozens of other

debates between proponents of physical symbol systems and students of neural networks,

between logicians and designers of artificial life forms that evolve in a most illogical man-

manner, between architects of expert systems and case-based reasoners, and between, those

who believe artificial intelligence has already been achieved and those who believe it will

never happen. Our original image of AI as frontier science where outlaws, prospectors,

wild-eyed prairie prophets and other dreamers were being slowly tamed by the disciplines

of formalism and empiricism has given way to a different metaphor: that of a large, cha-

chaotic but mostly peaceful city, where orderly bourgeois neighborhoods draw their vitality

from wonderful, chaotic bohemian districts. Over the years we have devoted to the differ-

different editions of this book, a compelling picture of the architecture of intelligence has

started to emerge from this city's art and industry.

Intelligence is too complex to be described by any single theory; instead, researchers

are constructing a hierarchy of theories that characterize it at multiple levels of abstraction.

At the lowest levels of this hierarchy, neural networks, genetic algorithms and other forms

of emergent computation have enabled us to understand the processes of adaptation, per-

perception, embodiment and interaction with the physical world that must underlie any form

of intelligent activity. Through some still partially understood resolution, this chaotic pop-

population of blind and primitive actors gives rise to the cooler patterns of logical inference.

Working at this higher level, logicians have built on Aristotle's gift, tracing the outlines of

deduction, abduction, induction, truth-maintenance, and countless other modes and man-

manners of reason. Even higher up the ladder, designers of expert systems, intelligent agents

and natural language understanding programs have come to recognize the role of social

processes in creating, transmitting, and sustaining knowledge. In this third edition, we

have attempted to touch on all levels of this developing hierarchy.

The second commitment we made in the earlier editions was to the central position of

"advanced representational formalisms and search techniques" in AI methodology. This is,

perhaps, the most controversial aspect of our first two editions and of much early work in

AI, with many workers in emergent computation questioning whether symbolic reasoning

VIII PREFACE

and referential semantics have any role at all in thought. Although the idea of representa-

representation as giving names to things has been challenged by the implicit representation provided

by the emerging patterns of a neural network or artificial life, we believe that an under-

understanding of representation and search remains essential to any serious practitioner of artifi-

artificial intelligence. Not only do such techniques as knowledge engineering, case-based

reasoning, theorem proving, and planning depend directly on symbol-based models of rea-

reason, but also the skills acquired through the study of representation and search are invalu-

invaluable tools for analyzing such aspects of non-symbolic AI as the expressive power of a

neural network or the progression of candidate problem solutions through the fitness land-

landscape of a genetic algorithm.

The third commitment we made at the beginning of this book's life cycle, to "place

artificial intelligence within the context of empirical science," has remained unchanged. To

quote from the preface to the second edition, we continue to believe that AI is not

... some strange aberration from the scientific tradition, but... part of a general quest for

knowledge about, and the understanding of intelligence itself. Furthermore, our AI pro-

programming tools, along with the exploratory programming methodology ... are ideal for

exploring an environment. Our tools give us a medium for both understanding and ques-

questions. We come to appreciate and know phenomena constructively, that is, by progressive

approximation.

Thus we see each design and program as an experiment with nature: we propose a

representation, we generate a search algorithm, and then we question the adequacy of our

characterization to account for part of the phenomenon of intelligence. And the natural

world gives a response to our query. Our experiment can be deconstructed, revised,

extended, and run again. Our model can be refined, our understanding extended.

New in This Edition

We revised the introductory and summary sections of this book to recognize the growing

importance of agent-based problem solving as an application for AI techniques. In discus-

discussions of the foundations of AI we recognize intelligence as physically embodied and situ-

situated in a natural and social world. There are four entirely new areas presented in this

edition: an extension of knowledge-intensive problem solving to the case-based and

model-based approaches, a chapter presenting models for reasoning under conditions of

ignorance and uncertainty, an extension of the natural language chapter to include stochas-

stochastic approaches to language understanding, and finally, two new chapters devoted to neural

and evolutionary models of learning.

Besides our previous analysis of data-driven and goal driven rule-based systems,

Chapter 6 now contains case-based and model-based reasoning systems. The chapter con-

concludes with a section relating the strengths and weaknesses of each of these approaches to

knowledge-intensive problem solving.

Chapter 7 describes reasoning with uncertain or incomplete information. A number of

important approaches to this problem are presented, including Bayesian reasoning, belief

networks, the Dempster-Shafer model, the Stanford certainty factor algebra, and causal

PREFACE IX

models. Techniques for truth maintenance in nonmonotonic situations are also presented,

as well as reasoning with minimal models and logic-based abduction.

Chapter 11, presenting issues in natural language understanding, now includes a sec-

section on stochastic models for language comprehension. The presentation includes Markov

models, CART trees, mutual information clustering, and statistic-based parsing. The chap-

chapter closes with several examples applying the theoretical approaches presented to data-

databases and other query systems.

Machine learning, currently a very important research topic in the AI community, is

the third major addition to this edition. The ability to learn must be part of any system that

would claim to possess general intelligence. Learning is also an important component of

practical AI applications, such as expert systems. The learning models presented in

Chapter 13 include explicitly represented knowledge where information is encoded in a

symbol system and learning takes place through algorithmic manipulation, or search, of

these structures. We present the sub-symbolic or connectionist approaches to learning in

Chapter 14. In a neural net, for instance, information is implicit in the organization and

weights on a set of connected processors, and learning is a rearrangement and modifica-

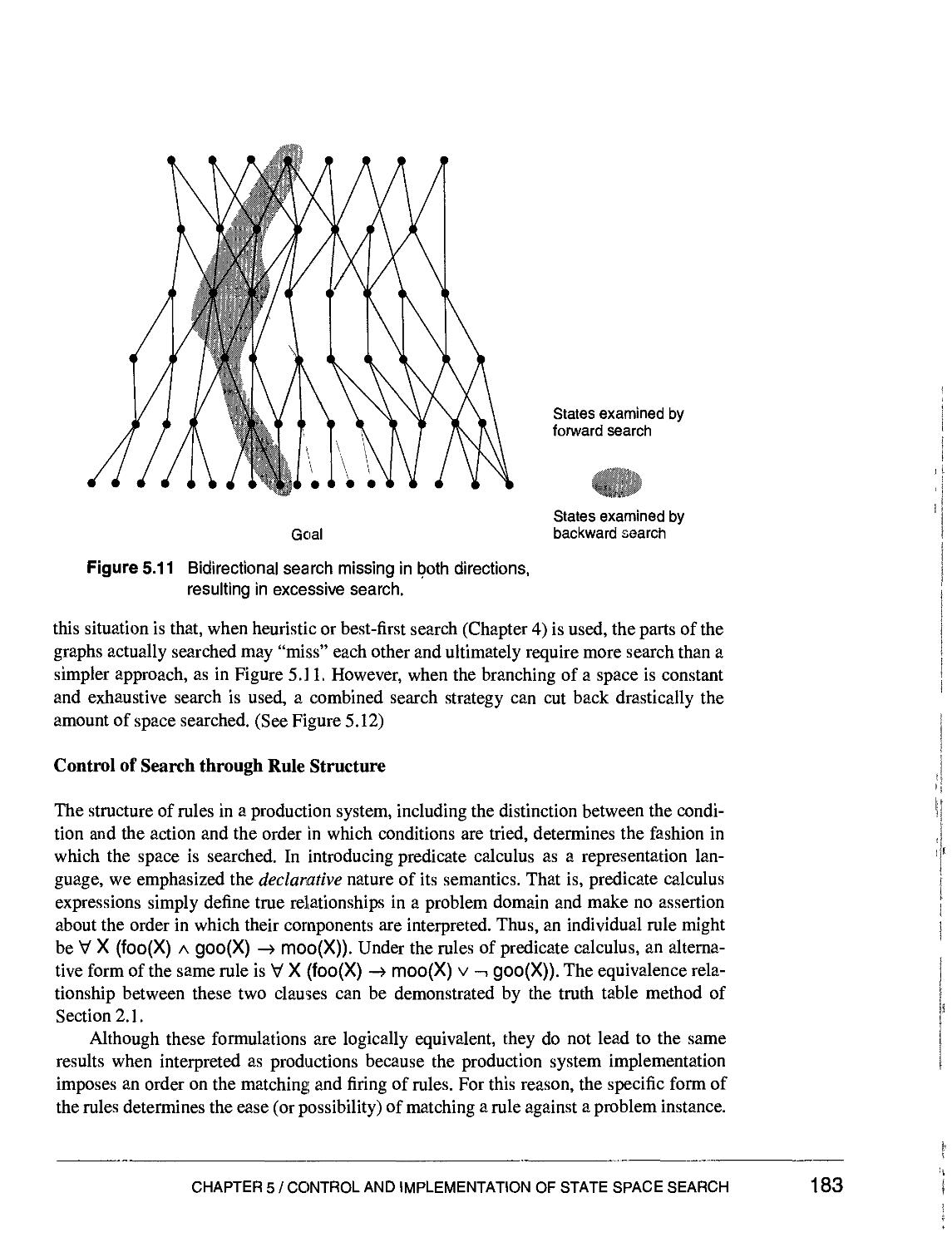

modification of the overall structure of the system. We introduce genetic algorithms and evolution-

evolutionary models of learning in Chapter 15, where learning is cast as an emerging and adaptive

process. We compare and contrast the directions and results of learning in Chapter 16,

where we also return to the deeper questions about the nature of intelligence and the possi-

possibility of intelligent machines that were posed in Chapter 1.

The Contents

Chapter 1 introduces artificial intelligence, beginning with a brief history of attempts to

understand mind and intelligence in philosophy, psychology, and other areas of research.

In a very important sense, AI is an old science, tracing its roots back at least to Aristotle.

An appreciation of this background is essential for an understanding of the issues

addressed in modern research. We also present an overview of some of the important

application areas in AI. Our goal in Chapter 1 is to provide both background and a

motivation for the theory and applications that follow.

Chapters 2, 3, 4, and 5 (Part II) introduce the research tools for AI problem solving.

These include the predicate calculus to describe the essential features of a domain

(Chapter 2), search to reason about these descriptions (Chapter 3) and the algorithms and

data structures used to implement search. In Chapters 4 and 5, we discuss the essential role

of heuristics in focusing and constraining search-based problem solving. We also present a

number of architectures, including the blackboard and production system, for building

these search algorithms.

Chapters 6, 7, and 8 make up Part III of the book: representations for

knowledge-based problem solving. In Chapter 6 we present the rule-based expert system

along with case-based and model-based reasoning systems. These models for problem

solving are presented as a natural evolution of the material in the first five chapters: using a

PREFACE

production system of predicate calculus expressions to orchestrate a graph search. We end

the chapter with an analysis of the strengths and weaknesses of each of these approaches.

Chapter 7 presents models for reasoning with uncertainty as well as unreliable infor-

information. We discuss Bayesian models, belief networks, the Dempster-Shafer approach,

causal models, and the Stanford certainty algebra. Chapter 7 also contains algorithms for

truth maintenance, reasoning with minimum models, and logic-based abduction.

Chapter 8 presents AI techniques for modeling semantic meaning, with a particular

focus on natural language applications. We begin with a discussion of semantic networks

and extend this model to include conceptual dependency theory, conceptual graphs,

frames, and scripts. Class hierarchies and inheritance are important representation tools;

we discuss both the benefits and difficulties of implementing inheritance systems for real-

realistic taxonomies. This material is strengthened by an in-depth examination of a particular

formalism, conceptual graphs. This discussion emphasizes the epistemological issues

involved in representing knowledge and shows how these issues are addressed in a modern

representation language. In Chapter 11, we show how conceptual graphs can be used to

implement a natural language database front end.

Part IV presents AI languages. These languages are first compared with each other

and with traditional programming languages to give an appreciation of the AI approach to

problem solving. Chapter 9 covers PROLOG, and Chapter 10, LISP. We demonstrate these

languages as tools for AI problem solving by building on the search and representation

techniques of the earlier chapters, including breadth-first, depth-first, and best-first search

algorithms. We implement these search techniques in a problem-independent fashion so

they may later be extended to form shells for search in rule-based expert systems, seman-

semantic network and frame systems, as well as in other applications.

Part V, Chapters 11 through 15, continues our presentation of important AI applica-

application areas. Chapter 11 presents natural language understanding. Our traditional approach

to language understanding, exemplified by many of the semantic structures presented in

Chapter 8, is complemented with stochastic models. These include Markov models,

CART trees, mutual information clustering, and statistic-based parsing. The chapter con-

concludes with examples applying these techniques to query systems.

Theorem proving, often referred to as automated reasoning, is one of the oldest areas

of AI research. In Chapter 12, we discuss the first programs in this area, including the

Logic Theorist and the General Problem Solver. The primary focus of the chapter is binary

resolution proof procedures, especially resolution refutations. More advanced inferencing

with hyper-resolution and paramodulation is also discussed. Finally, we describe the PRO-

PROLOG interpreter as a Horn clause inferencing system, and compare PROLOG computing

to full logic programming.

Chapters 13 through 15 are an extensive presentation of issues in machine learning. In

Chapter 13 we offer a detailed look at algorithms for symbol-based machine learning, a

fruitful area of research spawning a number of different problems and solution

approaches. The learning algorithms vary in their goals, the training data considered, the

learning strategies, and the knowledge representations they employ. Symbol-based learn-

learning includes induction, concept learning, version space search, and ID3. The role of induc-

inductive bias is considered, generalizations from patterns of data, as well as the effective use of

PREFACE XI

knowledge to learn from a single example in explanation-based learning. Category learn-

learning, or conceptual clustering, is presented with unsupervised learning.

In Chapter 14, we present neural networks, often referred to as sub-symbolic or con-

nectionist models of learning. In a neural net, for instance, information is implicit in the

organization and weights on a set of connected processors, and learning involves a re-

rearrangement and modification of the overall structure of the system. We present a number

of connectionist architectures, including perceptron learning, backpropagation, and coun-

terpropagation. We demonstrate examples of Kohonen, Grossberg, and Hebbian networks.

We also present associative learning and attractor models, including Hopfield networks.

We introduce genetic algorithms and evolutionary models of learning in Chapter 15.

In these models, learning is cast as an emerging and adaptive process. After several exam-

examples of problem solutions based on genetic algorithms, we introduce the application of

genetic techniques to more general problem solvers. These include classifier systems and

genetic programming. We then describe society-based learning with examples from artifi-

artificial life, or a-life, research. We conclude the chapter with an example of emergent compu-

computation from research at the Santa Fe Institute. We compare and contrast the three

approaches we present to machine learning (symbol-based, connectionist, social and

emergent) in a subsection of Chapter 16.

Finally, Chapter 16 serves as an epilogue for the book. It introduces the discipline of

cognitive science, addresses contemporary challenges to AI, discusses AI's current

limitations, and examines what we feel is its exciting future.

Using This Book

Artificial intelligence is a big field; consequently, this is a big book. Although it would

require more than a single semester to cover all of the material in the text, we have

designed it so that a number of paths may be taken through the material. By selecting

subsets of the material, we have used this text for single semester and full year (two

semester) courses.

We assume that most students will have had introductory courses in discrete

mathematics, including predicate calculus and graph theory. If this is not true the

instructor should spend more time on these concepts in the sections at the beginning of the

text B.1, 3.1). We also assume that students have had courses in data structures including

trees, graphs, and recursion-based search using stacks, queues, and priority queues. If they

have not, they should spend more time on the beginning sections of Chapters 3, 4, and 5.

In a one semester course, we go quickly through the first two parts of the book. With

this preparation, students are able to appreciate the material in Part III. We then consider

the PROLOG and LISP in Part IV and require students to build many of the representation

and search techniques of the earlier sections. Alternatively, one of the languages, PRO-

PROLOG, for example, can be introduced early in the course and be used to test out the data

structures and search techniques as they are encountered. We feel the meta-interpreters

presented in the language chapters are very helpful for building rule-based and other

knowledge-intensive problem solvers.

XII PREFACE

In a two semester course, we are able to cover the application areas of Part V, espe-

especially the machine learning chapters, in appropriate detail. We also expect a much more

detailed programming project from students. We think that it is very important in the sec-

second semester for students to revisit many of the primary sources in the AI literature. It is

crucial for students to see both where we are, as well as how we got here, to have an appre-

appreciation of the future promise of our work. We use a collected set of readings for this pur-

purpose, Computation and Intelligence (Luger 1995).

The algorithms of our book are described using a Pascal-like pseudo-code. This nota-

notation uses the control structures of Pascal along with English descriptions of the tests and

operations. We have added two useful constructs to the Pascal control structures. The first

is a modified case statement that, rather than comparing the value of a variable with con-

constant case labels, as in standard Pascal, lets each item be labeled with an arbitrary boolean

test. The case evaluates these tests in order until one of them is true and then perfonns the

associated action; all other actions are ignored. Those familiar with LISP will note that

this has the same semantics as the LISP cond statement.

The other addition to the language is a return statement which takes one argument

and can appear anywhere within a procedure or function. When the return is encountered,

it causes the program to immediately exit the function, returning its argument as a result.

Other than these modifications we used Pascal structure, with a reliance on the English

descriptions, to make the algorithms clear.

Supplemental Material Available via the Internet

The PROLOG and LISP code in the book as well as a public domain C-PROLOG

interpreter are available via ftp and www. To retrieve software: ftp aw.com and log in as

"anonymous," using your e-mail address as the password. Change directories by typing:

cd aw/luger. View the "readme" file (get README) for current ftp status. File names are

also available using the UNIX "Is" or the DOS "dir" command. Using ftp and de-archiving

files can get complicated. Instructions vary for Macintosh, DOS, or UNIX files. Consult

your local wizard if you have questions, www sites include those for Addison-Wesley at

www.aw.com/cseng/ and www.awl-he.com/computing. George Luger's web site is

www.cs.unm.edu/CS_Dept/faculty/homepage/luger.html and e-mail is luger@cs.unm.edu.

Bill Stubblefield's e-mail is wastubb@sandia.gov.

Acknowledgments

First, we would like to thank the many reviewers that have helped us develop our three

editions. Their comments often suggested important additions of material and focus.

These include Dennis Bahler, Skona Brittain, Philip Chan, Peter Collingwood, John

Donald, Sarah Douglas, Christophe Giraud-Carrier, Andrew Kosmorosov, Ray Mooney,

Bruce Porter, Jude Shavlik, Carl Stern, Marco Valtorta, and Bob Veroff. We also appreciate

the numerous suggestions for building this book received both from reviews solicited by

Addison-Wesley, as well as comments sent directly to us by e-mail. Andrew Kosmorosov,

PREFACE XIII

Melanie Mitchell, Jared Saia, Jim Skinner, Carl Stern, and Bob Veroff, colleagues at the

University of New Mexico, have been especially helpful in the technical editing of this

book. In particular we wish to thank Carl Stern for his major role in developing the chap-

chapters on reasoning under uncertain situations and connectionist networks, Jared Saia for

developing the stochastic models of Chapter 11, and Bob Veroff for his contribution to the

chapter on automated reasoning. We thank Academic Press for permission to reprint, in an

altered form, much of the material of Chapter 14; this appeared earlier in the book Cogni-

Cognitive Science: The Science of Intelligent Systems (Luger 1994). Finally, we especially thank

more than a decade of students, including Andy Dofasky, Steve Kleban, Bill Klein, and

Steve Verzi, who have used this text and software in its preliminary stages, for their help in

expanding our horizons, as well as in removing typos and bugs.

We thank our many friends at Addison-Wesley for their support and encouragement in

completing our writing task, especially Alan Apt in helping us with the first edition, Lisa

Moller and Mary Tudor for their help on the second, and Victoria Henderson, Louise Wil-

Wilson, and Karen Mosman for their assistance on the third. We thank Linda Cicarella of the

University of New Mexico for her help in preparing both text and figures for publication.

We wish to thank Thomas Barrow, internationally recognized artist and University of

New Mexico Professor of Art, who created the six photograms especially for this book

after reading an early draft of the manuscript.

In a number of places, we have used figures or quotes from the work of other authors.

We would like to thank the authors and publishers for their permission to use this material.

These contributions are listed at the end of the text.

Artificial intelligence is an exciting and oftentimes rewarding discipline; may you

enjoy your study as you come to appreciate its power and challenges.

George F. Luger

William A. Stubblefield

1 July 1997

Albuquerque

XIV PREFACE

CONTENTS

Preface vii

PARTI

ARTIFICIAL INTELLIGENCE: ITS ROOTS

AND SCOPE

Artificial Intelligence—An Attempted Definition 1

1 Al: HISTORY AND APPLICATIONS 3

1.1 From Eden to ENIAC: Attitudes toward Intelligence, Knowledge, and

Human Artifice 3

1.1.1 Historical Foundations 4

1.1.2 The Development of Logic 7

1.1.3 The Turing Test 10

1.1.4 B iological and Social Models of Intelligence: Agent-Oriented

Problem Solving 13

1.2 Overview of AI Application Areas 17

1.2.1 Game Playing 18

1.2.2 Automated Reasoning and Theorem Proving 19

1.2.3 Expert Systems 20

1.2.4 Natural Language Understanding and Semantic Modeling 22

1.2.5 Modeling Human Performance 23

XV

1.2.6 Planning and Robotics 23

1.2.7 Languages and Environments for AI 25

1.2.8 Machine Learning 25

1.2.9 Parallel Distributed Processing (PDP) and Emergent Computation 26

1.2.10 AI and Philosophy 27

1.3 Artificial Intelligence—A Summary 28

1.4 Epilogue and References 29

1.5 Exercises 30

PART II

ARTIFICIAL INTELLIGENCE AS REPRESENTATION

AND SEARCH

Knowledge Representation 34

Problem Solving as Search 41

2 THE PREDICATE CALCULUS 47

2.0 Introduction 47

2.1 The Prepositional Calculus 47

2.1.1 Symbols and Sentences 47

2.1.2 The Semantics of the Prepositional Calculus 49

2.2 The Predicate Calculus 52

2.2.1 The Syntax of Predicates and Sentences 52

2.2.2 A Semantics for the Predicate Calculus 58

2.3 Using Inference Rules to Produce Predicate Calculus Expressions 64

2.3.1 Inference Rules 64

2.3.2 Unification 68

2.3.3 A Unification Example 72

2.4 Application: A Logic-Based Financial Advisor 75

XVi CONTENTS

2.5 Epilogue and References 79

2.6 Exercises 79

3 STRUCTURES AND STRATEGIES FOR STATE SPACE SEARCH 81

3.0 Introduction 81

3.1 Graph Theory 84

3.1.1 Structures for State Space Search 84

3.1.2 State Space Representation of Problems 87

3.2 Strategies for State Space Search 93

3.2.1 Data-Driven and Goal-Driven Search 93

3.2.2 Implementing Graph Search 96

3.2.3 Depth-First and Breadth-First Search 99

3.2.4 Depth-First Search with Iterative Deepening 106

3.3 Using the State Space to Represent Reasoning with the Predicate Calculus 107

3.3.1 State Space Description of a Logical System 107

3.3.2 And/Or Graphs 109

3.3.3 Further Examples and Applications 111

3.4 Epilogue and References 121

3.5 Exercises 121

4 HEURISTIC SEARCH 123

4.0 Introduction 123

4.1 An Algorithm for Heuristic Search 127

4.1.1 Implementing "Best-First" Search 127

4.1.2 Implementing Heuristic Evaluation Functions 131

4.1.3 Heuristic Search and Expert Systems 136

4.2 Admissibility, Monotonicity, and Informedness 139

CONTENTS XVii

4.2.1 Admissibility Measures 139

4.2.2 Monotonicity 141

4.2.3 When One Heuristic Is Better: More Informed Heuristics 142

4.3 Using Heuristics in Games 144

4.3.1 The Minimax Procedure on Exhaustively Searchable Graphs 144

4.3.2 Minimaxing to Fixed Ply Depth 147

4.3.3 The Alpha-Beta Procedure 150

4.4 Complexity Issues 152

4.5 Epilogue and References 156

4.6 Exercises 156

5 CONTROL AND IMPLEMENTATION OF STATE SPACE SEARCH 159

5.0 Introduction 159

5.1 Recursion-Based Search 160

5.1.1 Recursion 160

5.1.2 Recursive Search 161

5.2 Pattern-Directed Search 164

5.2.1 Example: Recursive Search in the Knight's Tour Problem 165

5.2.2 Refining the Pattern-search Algorithm 168

5.3 Production Systems 171

5.3.1 Definition and History 171

5.3.2 Examples of Production Systems 174

5.3.3 Control of Search in Production Systems 180

5.3.4 Advantages of Production Systems for AI 184

5.4 Predicate Calculus and Planning 186

5.5 The Blackboard Architecture for Problem Solving 196

5.6 Epilogue and References 198

5.7 Exercises 199

xviii contents

PART III

REPRESENTATIONS FOR KNOWLEDGE-BASED

PROBLEM SOLVING

6 KNOWLEDGE-INTENSIVE PROBLEM SOLVING 207

6.0 Introduction 207

6.1 Overview of Expert System Technology 210

6.1.1 The Design of Rule-Based Expert Systems 210

6.1.2 Selecting a Problem for Expert System Development 212

6.1.3 The Knowledge Engineering Process 214

6.1.4 Conceptual Models and Their Role in Knowledge Acquisition 216

6.2 Rule-based Expert Systems 219

6.2.1 The Production System and Goal-driven Problem Solving 220

6.2.2 Explanation and Transparency in Goal-driven Reasoning 224

6.2.3 Using the Production System for Data-driven Reasoning 226

6.2.4 Heuristics and Control in Expert Systems 229

6.2.5 Conclusions: Rule-Based Reasoning 230

6.3 Model-based Reasoning 231

6.3.1 Introduction 231

6.4 Case-based Reasoning 235

6.4.1 Introduction 235

6.5 The Knowledge-Representation Problem 240

6.6 Epilogue and References 245

6.7 Exercises 246

CONTENTS XIX

7 REASONING WITH UNCERTAIN OR INCOMPLETE

INFORMATION 247

7.0 Introduction 247

7.1 The Statistical Approach to Uncertainty 249

7.1.1 Bayesian Reasoning 250

7.1.2 Bayesian Belief Networks 254

7.1.3 The Dempster-Shafer Theory of Evidence 259

7.1.4 The Stanford Certainty Factor Algebra 263

7.1.5 Causal Networks 266

7.2 Introduction to Nonmonotonic Systems 269

7.2.1 Logics for Nonmonotonic Reasoning 269

7.2.2 Logics Based on Minimum Models 273

7.2.3 Truth Maintenance Systems 275

7.2.4 Set Cover and Logic Based Abduction (Stern 1996) 281

7.3 Reasoning with Fuzzy Sets 284

7.4 Epilogue and References 289

7.5 Exercises 290

8 KNOWLEDGE REPRESENTATION 293

8.0 Knowledge Representation Languages 293

8.1 Issues in Knowledge Representation 295

8.2 A Survey of Network Representation 297

8.2.1 Associationist Theories of Meaning 297

8.2.2 Early Work in Semantic Nets 301

8.2.3 Standardization of Network Relationships 303

8.3 Conceptual Graphs: A Network Representation Language 309

8.3.1 Introduction to Conceptual Graphs 309

XX CONTENTS

8.3.2 Types, Individuals, and Names 311

8.3.3 The Type Hierarchy 313

8.3.4 Generalization and Specialization 314

8.3.5 Prepositional Nodes 317

8.3.6 Conceptual Graphs and Logic 318

8.4 Structured Representations 320

8.4.1 Frames 320

8.4.2 Scripts 324

8.5 Issues in Knowledge Representation 328

8.5.1 Hierarchies, Inheritance, and Exceptions 328

8.5.2 Naturalness, Efficiency, and Plasticity 331

8.6 Epilogue and References 334

8.7 Exercises 335

PART IV

LANGUAGES AND PROGRAMMING TECHNIQUES

FOR ARTIFICIAL INTELLIGENCE

Languages, Understanding, and Levels of Abstraction 340

Desired Features of AI Language 342

An Overview of LISP and PROLOG 349

Object-Oriented Programming 352

Hybrid Environments 353

A Hybrid Example 354

Selecting an Implementation Language 356

CONTENTS XXI

9 AN INTRODUCTION TO PROLOG 357

9.0 Introduction 357

9.1 Syntax for Predicate Calculus Programming 358

9.1.1 Representing Facts and Rules 358

9.1.2 Creating, Changing, and Monitoring the PROLOG Environment 362

9.1.3 Recursion-Based Search in PROLOG 364

9.1.4 Recursive Search in PROLOG 366

9.1.5 The Use of Cut to Control Search in PROLOG 369

9.2 Abstract Data Types (ADTs) in PROLOG 371

9.2.1 The ADT Stack 371

9.2.2 The ADT Queue 373

9.2.3 The ADT Priority Queue 373

9.2.4 The ADT Set 374

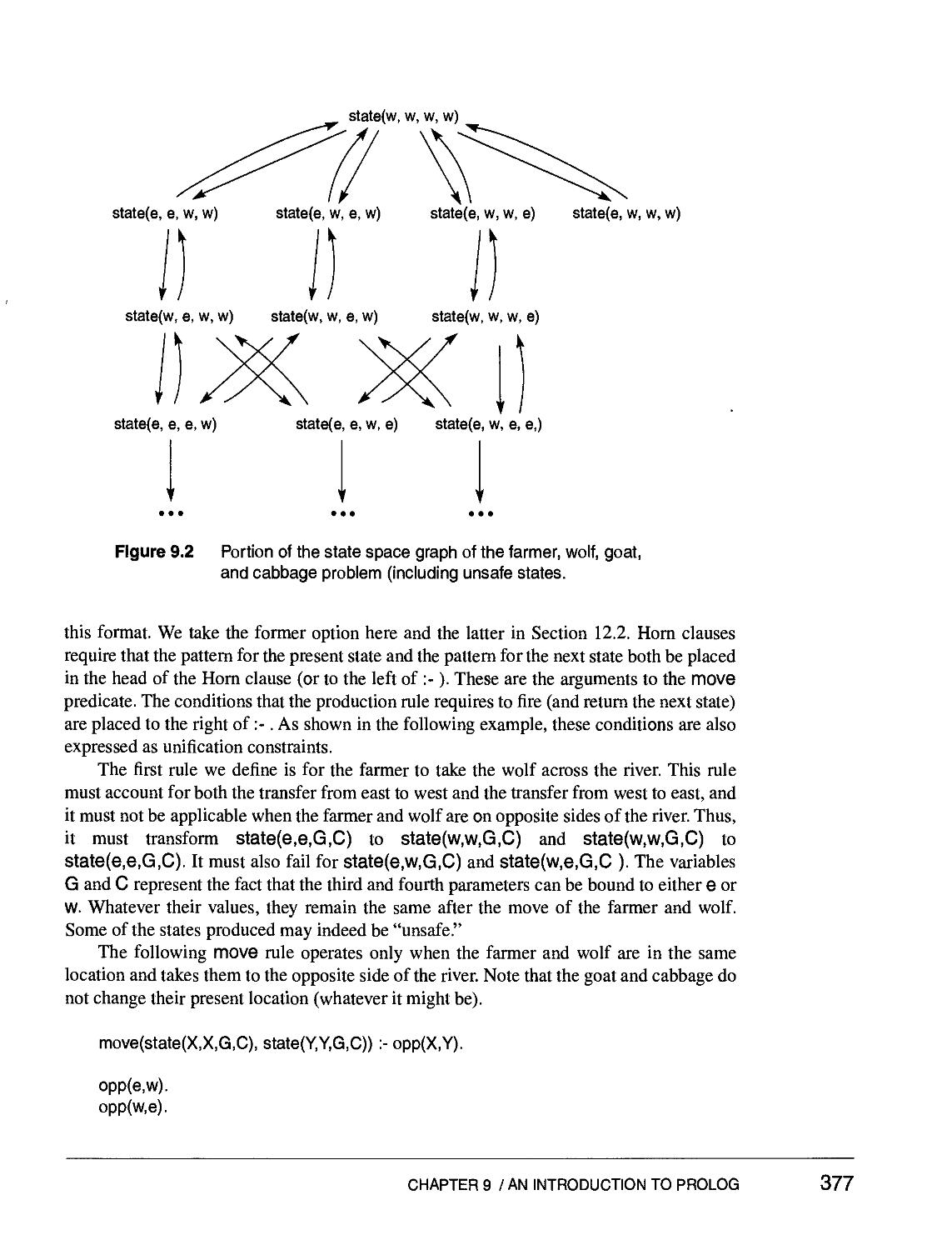

9.3 A Production System Example in PROLOG 375

9.4 Designing Alternative Search Strategies 381

9.4.1 Depth-First Search Using the Closed List 381

9.4.2 Breadth-First Search in PROLOG 383

9.4.3 Best-First Search in PROLOG 384

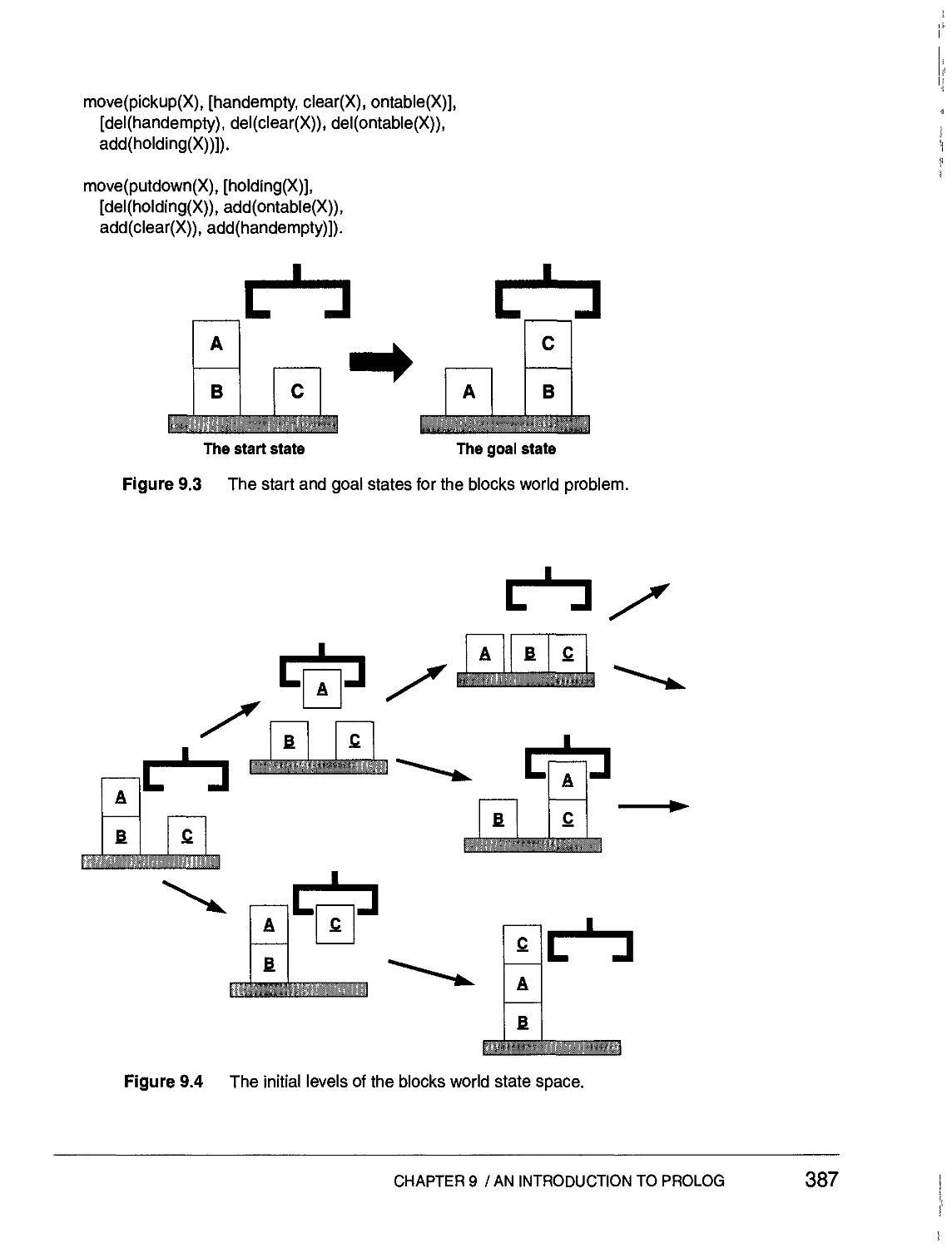

9.5 A PROLOG Planner 386

9.6 PROLOG: Meta-Predicates, Types, and Unification 389

9.6.1 Meta-Logical Predicates 389

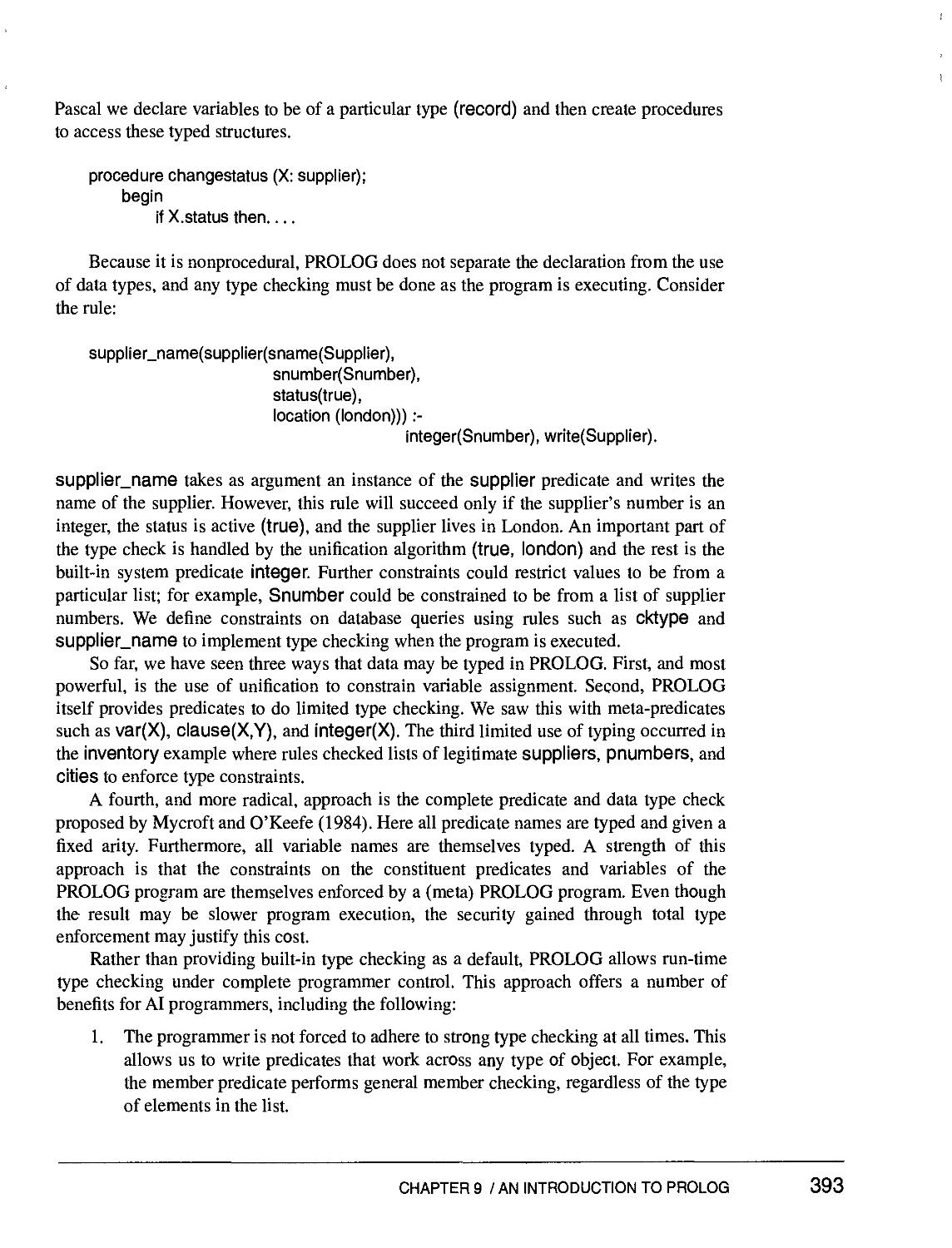

9.6.2 Types in PROLOG 391

9.6.3 Unification, the Engine for Predicate Matching and Evaluation 394

9.7 Meta-Interpreters in PROLOG 397

9.7.1 An Introduction to Meta-Interpreters: PROLOG in PROLOG 397

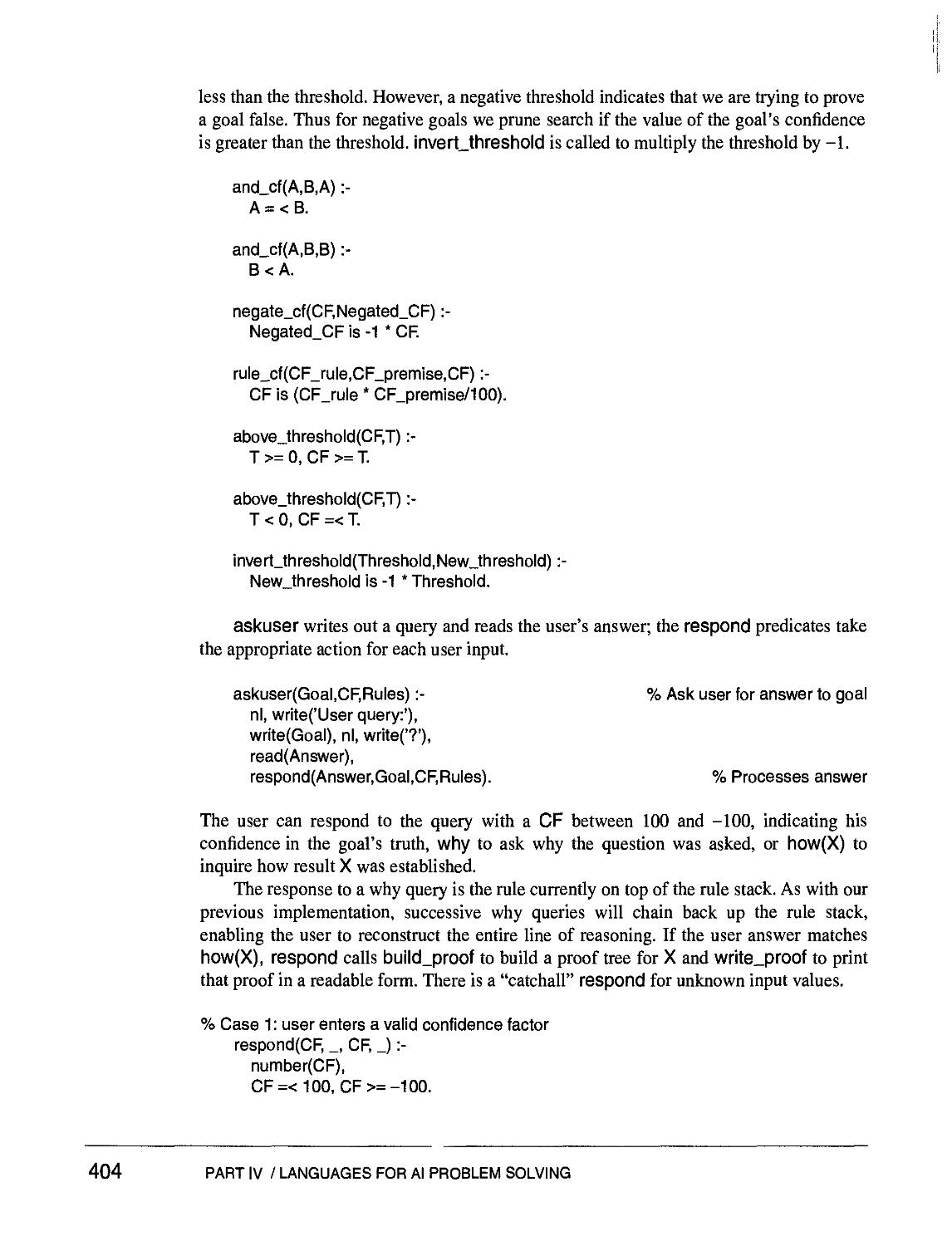

9.7.2 Shell for a Rule-B ased Expert System 401

9.7.3 Semantic Nets in PROLOG 410

9.7.4 Frames and Schemata in PROLOG 412

XXII CONTENTS

9.8 PROLOG: Towards Nonprocedural Computing 415

9.9 Epilogue and References 421

9.10 Exercises 422

10 AN INTRODUCTION TO LISP 425

10.0 Introduction 425

10.1 LISP: A Brief Overview 426

10.1.1 Symbolic Expressions, the Syntactic Basis of LISP 426

10.1.2 Control of LISP Evaluation: quote and eval 430

10.1.3 Programming in LISP: Creating New Functions 431

10.1.4 Program Control in LISP: Conditionals and Predicates 433

10.1.5 Functions, Lists, and Symbolic Computing 436

10.1.6 Lists as Recursive Structures 438

10.1.7 Nested Lists, Structure, and car/cdr Recursion 441

10.1.8 Binding Variables Using set 444

10.1.9 Defining Local Variables Using let 446

10.1.10 Data Types in Common LISP 448

10.1.11 Conclusion 449

10.2 Search in LISP: A Functional Approach to the Farmer, Wolf, Goat,

and Cabbage Problem 449

10.3 Higher-Order Functions and Procedural Abstraction 455

10.3.1 Maps and Filters 455

10.3.2 Functional Arguments and Lambda Expressions 457

10.4 Search Strategies in LISP 459

10.4.1 Breadth-First and Depth-First Search 459

10.4.2 Best-First Search 462

10.5 Pattern Matching in LISP 463

10.6 A Recursive Unification Function 465

CONTENTS XXIII

10.6.1 Implementing the Unification Algorithm 465

10.6.2 Implementing Substitution Sets Using Association Lists 467

10.7 Interpreters and Embedded Languages 469

10.8 Logic Programming in LISP 472

10.8.1 A Simple Logic Programming Language 472

10.8.2 Streams and Stream Processing 474

10.8.3 A Stream-Based Logic Programming Interpreter 477

10.9 Streams and Delayed Evaluation 482

10.10 An Expert System Shell in LISP 486

10.10.1 Implementing Certainty Factors 486

10.10.2 Architecture of lisp-shell 488

10.10.3 User Queries and Working Memory 490

10.10.4 Classification Using lisp-shell 491

10.11 Network Representations and Inheritance 494

10.11.1 Representing Semantic Nets in LISP 494

10.11.2 Implementing Inheritance 497

10.12 Object-Oriented Programming Using CLOS 497

10.12.1 Defining Classes and Instances in CLOS 499

10.12.2 Defining Generic Functions and Methods 501

10.12.3 Inheritance in CLOS 503

10.12.4 Advanced Features of CLOS 505

10.12.5 Example: A Thermostat Simulation 505

10.13 Epilogue and References 511

10.14 Exercises 511

XXIV CONTENTS

PARTV

ADVANCED TOPICS FOR Al PROBLEM SOLVING

Natural Language, Automated Reasoning, and Learning 517

11 UNDERSTANDING NATURAL LANGUAGE 519

11.0 Role of Knowledge in Language Understanding 519

11.1 Language Understanding: A Symbolic Approach 522

11.1.1 Introduction 522

11.1.2 Stages of Language Analysis 523

11.2 Syntax 524

11.2.1 Specification and Parsing Using Context-Free Grammars 524

11.2.2 Transition Network Parsers 527

11.2.3 The Chomsky Hierarchy and Context-Sensitive Grammars 531

11.3 Combining Syntax and Semantics in ATN Parsers 534

11.3.1 Augmented Transition Network Parsers 534

11.3.2 Combining Syntax and Semantics 538

11.4 Stochastic Tools for Language Analysis 543

11.4.1 Introduction 543

11.4.2 A Markov Model Approach 545

11.4.3 A CART Tree Approach 546

11.4.4 Mutual Information Clustering 547

11.4.5 Parsing 548

11.4.6 Other Language Applications for Stochastic Techniques 550

11.5 Natural Language Applications 550

11.5.1 Story Understanding and Question Answering 550

11.5.2 A Database Front End 551

11.6 Epilogue and References 555

11.7 Exercises 557

CONTENTS XXV

12 AUTOMATED REASONING 559

12.0 Introduction to Weak Methods in Theorem Proving 559

12.1 The General Problem Solver and Difference Tables 560

12.2 Resolution Theorem Proving 566

12.2.1 Introduction 566

12.2.2 Producing the Clause Form for Resolution Refutations 568

12.2.3 The Binary Resolution Proof Procedure 573

12.2.4 Strategies and Simplification Techniques for Resolution 578

12.2.5 Answer Extraction from Resolution Refutations 583

12.3 PROLOG and Automated Reasoning 587

12.3.1 Introduction 587

12.3.2 Logic Programming and PROLOG 588

12.4 Further Issues in Automated Reasoning 593

12.4.1 Uniform Representations for Weak Method Solutions 593

12.4.2 Alternative Inference Rules 597

12.4.3 Search Strategies and Their Use 599

12.5 Epilogue and References 600

12.6 Exercises 601

13 MACHINE LEARNING: SYMBOL-BASED 603

13.0 Introduction 603

13.1 A Framework for Symbol-based Learning 606

13.2 Version Space Search 612

13.2.1 Generalization Operators and the Concept Space 612

13.2.2 The Candidate Elimination Algorithm 613

13.2.3 LEX: Inducing Search Heuristics 620

13.2.4 Evaluating Candidate Elimination 623

XXVi CONTENTS

13.3 The ID3 Decision Tree Induction Algorithm 624

13.3.1 Top-Down Decision Tree Induction 627

13.3.2 Information Theoretic Test Selection 628

13.3.3 Evaluating ID3 632

13.3.4 Decision Tree Data Issues: Bagging, Boosting 632

13.4 Inductive Bias and Learnability 633

13.4.1 Inductive Bias 634

13.4.2 The Theory of Learnability 636

13.5 Knowledge and Learning 638

13.5.1 Meta-DENDRAL 639

13.5.2 Explanation-Based Learning 640

13.5.3 EBL and Knowledge-Level Learning 645

13.5.4 Analogical Reasoning 646

13.6 Unsupervised Learning 649

13.6.1 Discovery and Unsupervised Learning 649

13.6.2 Conceptual Clustering 651

13.6.3 COBWEB and the Structure of Taxonomic Knowledge 653

13.7 Epilogue and References 658

13.8 Exercises 659

14 MACHINE LEARNING: CONNECTIONIST 661

14.0 Introduction 661

14.1 Foundations for Connectionist Networks 663

14.1.1 Early History 663

14.2 Perceptron Learning 666

14.2.1 The Perceptron Training Algorithm 666

14.2.2 An Example: Using a Perceptron Network to Classify 668

14.2.3 The Delta Rule 672

CONTENTS XXVii

14.3 Backpropagation Learning 675

14.3.1 Deriving the Backpropagation Algorithm 675

14.3.2 Backpropagation Example l:NETtalk 679

14.3.3 Backpropagation Example 2: Exclusive-or 681

14.4 Competitive Learning 682

14.4.1 Winner-Take-All Learning for Classification 682

14.4.2 A Kohonen Network for Learning Prototypes 684

14.4.3 Grossberg Learning and Counterpropagation 686

14.5 Hebbian Coincidence Learning 690

14.5.1 Introduction 690

14.5.2 An Example of Unsupervised Hebbian Learning 691

14.5.3 Supervised Hebbian Learning 694

14.5.4 Associative Memory and the Linear Associator 696

14.6 Attractor Networks or "Memories" 701

14.6.1 Introduction 701

14.6.2 BAM, the Bi-directional Associative Memory 702

14.6.3 Examples of BAM Processing 704

14.6.4 Autoassociative Memory and Hopfield Nets 706

14.7 Epilogue and References 711

14.8 Exercises 712

15 MACHINE LEARNING: SOCIAL AND EMERGENT 713

15.0 Social and Emergent Models of Learning 713

15.1 The Genetic Algorithm 715

15.1.3 Two Examples: CNF Satisfaction and the Traveling Salesperson 717

15.1.4 Evaluating the Genetic Algorithm 721

15.2 Classifier Systems and Genetic Programming 725

15.2.1 Classifier Systems 725

15.2.2 Programming with Genetic Operators 730

xxviii contents

15.3 Artificial Life and Society-based Learning 736

15.3.1 The "Game of Life" 737

15.3.2 Evolutionary Programming 740

15.3.3 A Case Study in Emergence (Crutchfield and Mitchell 1994) 743

15.4 Epilogue and References 747

15.5 Exercises 748

PART VI

EPILOGUE

Reflections on the Nature of Intelligence 751

16 ARTIFICIAL INTELLIGENCE AS EMPIRICAL

ENQUIRY 753

16.0 Introduction 753

16.1 Artificial Intelligence: A Revised Definition 755

16.1.1 Intelligence and the Physical Symbol System 756

16.1.2 Minds, Brains, and Neural Computing 759

16.1.3 Agents, Emergence, and Intelligence 761

16.1.4 Situated Actors and the Existential Mind 764

16.2 Cognitive Science: An Overview 766

16.2.1 The Analysis of Human Performance 766

16.2.2 The Production System and Human Cognition 767

16.3 Current Issues in Machine Learning 770

16.4 Understanding Intelligence: Issues and Directions 775

16.5 Epilogue and References 780

Bibliography 781

Author Index 803

Subject Index 809

Acknowledgements 823

CONTENTS XXJX

PARTI

ARTIFICIAL INTELLIGENCE:

ITS ROOTS AND SCOPE

Everything must have a beginning, to speak in Sanchean phrase; and that beginning must

be linked to something that went before. Hindus give the world an elephant to support it,

but they make the elephant stand upon a tortoise. Invention, it must be humbly admitted,

does not consist in creating out of void, but out of chaos; the materials must, in the first

place, be afforded....

—Mary Shelley, Frankenstein

Artificial Intelligence: An Attempted Definition

Artificial intelligence (AI) may be defined as the branch of computer science that is

concerned with the automation of intelligent behavior. This definition is particularly

appropriate to this text in that it emphasizes our conviction that AI is a part of computer

science and, as such, must be based on sound theoretical and applied principles of that

field. These principles include the data structures used in knowledge representation, the

algorithms needed to apply that knowledge, and the languages and programming

techniques used in their implementation.

However, this definition suffers from the fact that intelligence itself is not very well

defined or understood. Although most of us are certain that we know intelligent behavior

when we see it, it is doubtful that anyone could come close to defining intelligence in a

way that would be specific enough to help in the evaluation of a supposedly intelligent

computer program, while still capturing the vitality and complexity of the human mind.

Thus the problem of defining artificial intelligence becomes one of defining

intelligence itself: is intelligence a single faculty, or is it just a name for a collection of

distinct and unrelated abilities? To what extent is intelligence learned as opposed to having

an a priori existence? Exactly what does happen when learning occurs? What is

creativity? What is intuition? Can intelligence be inferred from observable behavior, or

does it require evidence of a particular internal mechanism? How is knowledge

represented in the nerve tissue of a living being, and what lessons does this have for the

design of intelligent machines? What is self-awareness, and what role does it play in

intelligence? Is it necessary to pattern an intelligent computer program after what is

known about human intelligence, or is a strict "engineering" approach to the problem

sufficient? Is it even possible to achieve intelligence on a computer, or does an intelligent

entity require the richness of sensation and experience that might be found only in a

biological existence?

These are all unanswered questions, and all of them have helped to shape the

problems and solution methodologies that constitute the core of modern AI. In fact, part of

the appeal of artificial intelligence is that it offers a unique and powerful tool for exploring

exactly these questions. AI offers a medium and a test-bed for theories of intelligence:

such theories may be stated in the language of computer programs and consequently

verified through the execution of these programs on an actual computer.

For these reasons, our initial definition of artificial intelligence seems to fall short of

unambiguously defining the field. If anything, it has only led to further questions and the

paradoxical notion of a field of study whose major goals include its own definition. But

this difficulty in arriving at a precise definition of AI is entirely appropriate. Artificial

intelligence is still a young discipline, and its structure, concerns, and methods are less

clearly defined than those of a more mature science such as physics.

Artificial intelligence has always been more concerned with expanding the capabili-

capabilities of computer science than with defining its limits. Keeping this exploration grounded

in sound theoretical principles is one of the challenges facing AI researchers in general

and this text in particular.

Because of its scope and ambition, artificial intelligence defies simple definition. For

the time being, we will simply define it as the collection of problems and methodologies

studied by artificial intelligence researchers. This definition may seem silly and meaning-

meaningless, but it makes an important point: artificial intelligence, like every science, is a human

endeavor and may best be understood in that context.

There are reasons that any science, AI included, concerns itself with a certain set of

problems and develops a particular body of techniques for approaching these problems. A

short history of artificial intelligence and the people and assumptions that have shaped it

will explain why a certain set of questions have come to dominate the field and why the

methods discussed in this text have been taken for their solution.

PART I / ARTIFICIAL INTELLIGENCE: ITS ROOTS AND SCOPE

Al: HISTORY AND

APPLICATIONS

Hear the rest, and you will marvel even more at the crafts and resources I have contrived.

Greatest was this: in the former times if a man fell sick he had no defense against the

sickness, neither healing food nor drink, nor unguent; but through the lack of drugs men

wasted away, until I showed them the blending of mild simples wherewith they drive out

all manner of diseases....

It was I who made visible to men's eyes the flaming signs of the sky that were before dim.

So much for these. Beneath the earth, man's hidden blessing, copper, iron, silver, and

gold—will anyone claim to have discovered these before I did? No one, I am very sure,

who wants to speak truly and to the purpose. One brief word will tell the whole story: all

arts that mortals have come from Prometheus.

—Aeschylus, Prometheus Bound

1.1 From Eden to ENIAC: Attitudes toward

Intelligence, Knowledge, and Human Artifice

Prometheus speaks of the fruits of his transgression against the gods of Olympus: his

purpose was not merely to steal fire for the human race but also to enlighten humanity

through the gift of intelligence or nous: the "rational mind." This intelligence forms the

foundation for all of human technology and ultimately all human civilization. The work of

the classical Greek dramatist illustrates a deep and ancient awareness of the extraordinary

power of knowledge. Artificial intelligence, in its very direct concern for Prometheus's

gift, has been applied to all the areas of his legacy—medicine, psychology, biology,

astronomy, geology—and many areas of scientific endeavor that Aeschylus could not have

imagined.

Though Prometheus's action freed humanity from the sickness of ignorance, it also

earned him the wrath of Zeus. Outraged over this theft of knowledge that previously

belonged only to the gods of Olympus, Zeus commanded that Prometheus be chained to a

barren rock to suffer the ravages of the elements for eternity. The notion that human efforts

to gain knowledge constitute a transgression against the laws of God or nature is deeply

ingrained in Western thought. It is the basis of the story of Eden and appears in the work of

Dante and Milton. Both Shakespeare and the ancient Greek tragedians portrayed

intellectual ambition as the cause of disaster. The belief that the desire for knowledge must

ultimately lead to disaster has persisted throughout history, enduring the Renaissance, the

Age of Enlightenment, and even the scientific and philosophical advances of the

nineteenth and twentieth centuries. Thus, we should not be surprised that artificial

intelligence inspires so much controversy in both academic and popular circles.

Indeed, rather than dispelling this ancient fear of the consequences of intellectual

ambition, modern technology has only made those consequences seem likely, even

imminent. The legends of Prometheus, Eve, and Faustus have been retold in the language

of technological society. In her introduction to Frankenstein (subtitled, interestingly

enough, The Modern Prometheus), Mary Shelley writes:

Many and long were the conversations between Lord Byron and Shelley to which I was a

devout and silent listener. During one of these, various philosophical doctrines were discussed,

and among others the nature of the principle of life, and whether there was any probability of its

ever being discovered and communicated. They talked of the experiments of Dr. Darwin (I

speak not of what the doctor really did or said iliat he did, but, as more to my purpose, of what

was then spoken of as having been done by him), who preserved a piece of vermicelli in a glass

case till by some extraordinary means it began to move with a voluntary motion. Not thus, after

all, would life be given. Perhaps a corpse would be reanimated; galvanism had given token of

such things: perhaps the component parts of a creature might be manufactured, brought

together, and endued with vital warmth.

Shelley shows us the extent to which scientific advances such as the work of Darwin

and the discovery of electricity had convinced even nonscientists that the workings of

nature were not divine secrets, but could be broken down and understood systematically.

Frankenstein's monster is not the product of shamanistic incantations or unspeakable

transactions with the underworld: it is assembled from separately "manufactured"

components and infused with the vital force of electricity. Although nineteenth-century

science was inadequate to realize the goal of understanding and creating a fully intelligent

agent, it affirmed the notion that the mysteries of life and intellect might be brought into

the light of scientific analysis.

1.1.1 Historical Foundations

By the time Mary Shelley finally and perhaps irrevocably joined modern science with the

Promethean myth, the philosophical foundations of modern work in artificial intelligence

had been developing for several thousand years. Although the moral and cultural issues

raised by artificial intelligence are both interesting and important, our introduction is more

properly concerned with AI's intellectual heritage. The logical starting point for such a

history is the genius of Aristotle, or, as Dante refers to him, "the master of those who

PART I / ARTIFICIAL INTELLIGENCE: ITS ROOTS AND SCOPE

know." Aristotle wove together the insights, wonders, and fears of the early Greek

tradition with the careful analysis and disciplined thought that were to become the

standard for more modern science.

For Aristotle, the most fascinating aspect of nature was change. In his Physics, he

defined his "philosophy of nature" as the "study of things that change." He distinguished

between the "matter" and "form" of things: a sculpture is fashioned from the "material"

bronze and has the "form" of a human. Change occurs when the bronze is molded to a new

form. The matter/form distinction provides a philosophical basis for modern notions such

as symbolic computing and data abstraction. In computing (even with numbers) we are

manipulating patterns that are the forms of electromagnetic material, with the changes of

form of this material representing aspects of the solution process. Abstracting the form

from the medium of its representation not only allows these forms to be manipulated

computationally but also provides the promise of a theory of data structures, the heart of

modern computer science.

In his Metaphysics (located just after, meta, the Physics in his writing), Aristotle

developed a science of things that never change, including his cosmology and theology.

More relevant to artificial intelligence, however, was Aristotle's epistemology or science

of knowing, discussed in his Logic. Aristotle referred to his logic as the "instrument"

(organon), because he felt that the study of thought itself was at the basis of all

knowledge. In his Logic, he investigated whether certain propositions can be said to be

"true" because they are related to other things that are known to be true. Thus if we know

that "all men are mortal" and that "Socrates is a man," then we can conclude that "Socrates

is mortal." This argument is an example of what Aristotle referred to as a syllogism using

the deductive form modus ponens. Although the formal axiomatization of reasoning

needed another two thousand years for its full flowering in the works of Gottlob Frege,

Bertrand Russell, Kurt Godel, Alan Turing, Alfred Tarski, and others, its roots may be

traced to Aristotle.

Renaissance thought, building on the Greek tradition, initiated the evolution of a

different and powerful way of thinking about humanity and its relation to the natural

world. Empiricism began to replace mysticism as a means of understanding nature. Clocks

and, eventually, factory schedules superseded the rhythms of nature for thousands of city

dwellers. Most of the modern social and physical sciences found their origin in the notion

that processes, whether natural or artificial, could be mathematically analyzed and

understood. In particular, scientists and philosophers realized that thought itself, the way

that knowledge was represented and manipulated in the human mind, was a difficult but

essential subject for scientific study.

Perhaps the major event in the development of the modern world view was the

Copernican revolution, the replacement of the ancient Earth-centered model of the

universe with the idea that the Earth and other planets actually rotate around the sun. After

centuries of an "obvious" order, in which the scientific explanation of the nature of the

cosmos was consistent with the teachings of religion and common sense, a drastically

different and not at all obvious model was proposed to explain the motions of heavenly

bodies. For perhaps the first time, our ideas about the world were seen as fundamentally

distinct from its appearance. This split between the human mind and its surrounding

reality, between ideas about things and things themselves, is essential to the modern study

CHAPTER 1 / Al: HISTORY AND APPLICATIONS

of the mind and its organization. This breach was widened by the writings of Galileo,

whose scientific observations further contradicted the "obvious" truths about the natural

world and whose development of mathematics as a tool for describing that world

emphasized the distinction between the world and our ideas about it. It is out of this

breach that the modern notion of the mind evolved: introspection became a common motif ■•

in literature, philosophers began to study epistemology and mathematics, and the

systematic application of the scientific method rivaled the senses as tools for \

understanding the world. I

Although the seventeenth and eighteenth centuries saw a great deal of work in '

epistemology and related fields, here we have space only to discuss the work of Rene

Descartes. Descartes is a central figure in the development of the modern concepts of

thought and the mind. In his famous Meditations, Descartes attempted to find a basis for

reality purely through cognitive introspection. Systematically rejecting the input of his t

senses as untrustworthy, Descartes was forced to doubt even the existence of the physical

world and was left with only the reality of thought; even his own existence had to be

justified in terms of thought: "Cogito ergo sum" (I think, therefore I am). After he

established his own existence purely as a thinking entity, Descartes inferred the existence

of God as an essential creator and ultimately reasserted the reality of the physical universe

as the necessary creation of a benign God.

We can make two interesting observations here: first, the schism between the mind

and the physical world had become so complete that the process of thinking could be

discussed in isolation from any specific sensory input or worldly subject matter; second,

the connection between mind and the physical world was so tenuous that it required the

intervention of a benign God to allow reliable knowledge of the physical world. This view

of the duality between the mind and the physical world underlies all of Descartes's

thought, including his development of analytic geometry. How else could he have unified 1

such a seemingly worldly branch of mathematics as geometry with such an abstract ;

mathematical framework as algebra?

Why have we included this philosophical discussion in a text on artificial

intelligence? There are two consequences of this analysis that are essential to the

enterprise of artificial intelligence:

1. By separating the mind and the physical world, Descartes and related thinkers

established that the structure of ideas about the world was not necessarily the

same as the structure of their subject matter. This underlies the methodology of

the field of AI, along with the fields of epistemology, psychology, much of higher

mathematics, and most of modern literature: mental processes had an existence

of their own, obeyed their own laws, and could be studied in and of themselves.

2. Once the mind and the body were separated, philosophers found it necessary to

find a way to reconnect the two, because interaction between the mental and the

physical is essential for human existence.

Although millions of words have been written on the mind-body problem, and

numerous solutions proposed, no one has successfully explained the obvious interactions

PART I / ARTIFICIAL INTELLIGENCE: ITS ROOTS AND SCOPE

between mental states and physical actions while affirming a fundamental difference

between them. The most widely accepted response to this problem, and the one that

provides an essential foundation for the study of AI, holds that the mind and the body are

not fundamentally different entities at all. In this view, mental processes are indeed

achieved by physical systems such as brains (or computers). Mental processes, like

physical processes, can ultimately be characterized through formal mathematics. Or, as

stated by the Scots philosopher David Hume, "Cognition is computation."

1.1.2 The Development of Logic

Once thinking had come to be regarded as a form of computation, its formalization and

eventual mechanization were logical next steps. In the seventeenth century, Gottfried Wil-

helm von Leibniz introduced the first system of formal logic and constructed machines for

automating calculation (Leibniz 1887). Euler, in the eighteenth century, with his analysis

of the "connectedness" of the bridges joining the riverbanks and islands of the city of

Konigsberg (see the introduction to Chapter 3), introduced the study of representations

that abstractly capture the structure of relationships in the world (Euler 1735).

The formalization of graph theory also afforded the possibility of state space search,

a major conceptual tool of artificial intelligence. We can use graphs to model the deeper

structure of a problem. The nodes of a state space graph represent possible stages of a

problem solution; the arcs of the graph represent inferences, moves in a game, or other

steps in a problem solution. Solving the problem is a process of searching the state space

graph for a path to a solution (Section 1.3 and Chapter 3). By describing the entire space

of problem solutions, state space graphs provide a powerful tool for measuring the struc-

structure and complexity of problems and analyzing the efficiency, correctness, and generality

of solution strategies.

As one of the originators of the science of operations research, as well as the designer

of the first programmable mechanical computing machines, Charles Babbage, a nineteenth

century mathematician, is arguably the earliest practitioner of artificial intelligence (Mor-

(Morrison and Morrison 1961). Babbage's "difference engine" was a special-purpose machine

for computing the values of certain polynomial functions and was the forerunner of his

"analytical engine." The analytical engine, designed but not successfully constructed dur-

during Babbage's lifetime, was a general-purpose programmable computing machine that

presaged many of the architectural assumptions underlying the modern computer.

In describing the analytical engine, Ada Lovelace A961), Babbage's friend, sup-

supporter, and collaborator, said:

We may say most aptly that the Analytical Engine weaves algebraical patterns just as the Jac-

quard loom weaves flowers and leaves. Here, it seems to us, resides much more of

originality than the difference engine can be fairly entitled to claim.

Babbage's inspiration was his desire to apply the technology of his day to liberate

humans from the drudgery of arithmetic calculation. In this sentiment, as well as his

conception of his computers as mechanical devices, Babbage was thinking in purely

CHAPTER 1 / AI: HISTORY AND APPLICATIONS

nineteenth-century terms. His analytical engine, however, included many modern notions,

such as the separation of memory and processor (the "store" and the "mill" in Babbage's

terms), the concept of a digital rather than analog machine, and programmability based on

the execution of a series of operations encoded on punched pasteboard cards. The most

striking feature of Ada Lovelace's description, and of Babbage's work in general, is its

treatment of the "pattern" of an intellectual activity as an entity that may be studied,

characterized, and finally implemented mechanically without concern for the particular

values that are finally passed through the "mill" of the calculating machine. This is an

implementation of the "abstraction and manipulation of form" first described by Aristotle.

The goal of creating a formal language for thought also appears in the work of George

Boole, another nineteenth-century mathematician whose work must be included in any

discussion of the roots of artificial intelligence (Boole 1847, 1854). Although he made

contributions to a number of areas of mathematics, his best known work was in the

mathematical formalization of the laws of logic, an accomplishment that forms the very

heart of modern computer science. Though the role of Boolean algebra in the design of

logic circuitry is well known, Boole's own goals in developing his system seem closer to

those of contemporary AI researchers. In the first chapter of An Investigation of the Laws

of Thought, on which are founded the Mathematical Theories of Logic and Probabilities,

Boole described his goals as

to investigate the fundamental laws of those operations of the mind by which reasoning is

performed: to give expression to them in the symbolical language of a Calculus, and upon this

foundation to establish the science of logic and instruct its method; ...and finally to collect

from the various elements of truth brought to view in the course of these inquiries some proba-

probable intimations concerning the nature and constitution of the human mind.

The greatness of Boole's accomplishment is in the extraordinary power and simplicity

of the system he devised: three operations, "AND" (denoted by * or a), "OR" (denoted by

+ or v), and "NOT" (denoted by -0, formed the heart of his logical calculus. These

operations have remained the basis for all subsequent developments in formal logic,

including the design of modern computers. While keeping the meaning of these symbols

nearly identical to the corresponding algebraic operations, Boole noted that "the Symbols

of logic are further subject to a special law, to which the symbols of quantity, as such, are

not subject." This law states that for any X, an element in the algebra, X*X=X (or that once

something is known to be true, repetition cannot augment that knowledge). This led to the

characteristic restriction of Boolean values to the only two numbers that may satisfy this

equation: 1 and 0. The standard definitions of Boolean multiplication (AND) and addition

(OR) follow from this insight. Boole's system not only provided the basis of binary

arithmetic but also demonstrated that an extremely simple formal system was adequate to

capture the full power of logic. This assumption and the system Boole developed to

demonstrate it form the basis of all modern efforts to formalize logic, from Russell and

Whitehead's Principia Mathematica (Whitehead and Russell 1950), through the work of

Turing and Godel, up to modern automated reasoning systems.

Gottlob Frege, in his Foundations of Arithmetic (Frege 1879, 1884), created a

mathematical specification language for describing the basis of arithmetic in a clear and

8 PART I / ARTIFICIAL INTELLIGENCE: ITS ROOTS AND SCOPE

precise fashion. With this language Frege formalized many of the issues first addressed by

Aristotle's Logic. Frege's language, now called the first-order predicate calculus, offers a

tool for describing the propositions and truth value assignments that make up the elements

of mathematical reasoning and describes the axiomatic basis of "meaning" for these

expressions. The formal system of the predicate calculus, which includes predicate

symbols, a theory of functions, and quantified variables, was intended to be a language for

describing mathematics and its philosophical foundations. It also plays a fundamental role

in creating a theory of representation for artificial intelligence (Chapter 2). The first-order

predicate calculus offers the tools necessary for automating reasoning: a language for

expressions, a theory for assumptions related to the meaning of expressions, and a

logically sound calculus for inferring new true expressions.

Russell and Whitehead's A950) work is particularly important to the foundations of

AI, in that their stated goal was to derive the whole of mathematics through formal opera-

operations on a collection of axioms. Although many mathematical systems have been con-

constructed from basic axioms, what is interesting is Russell and Whitehead's commitment to

mathematics as a purely formal system. This meant that axioms and theorems would be

treated solely as strings of characters: proofs would proceed solely through the application

of well-defined rules for manipulating these strings. There would be no reliance on intu-

intuition or the meaning of theorems as a basis for proofs. Every step of a proof followed from

the strict application of formal (syntactic) rules to either axioms or previously proven the-

theorems, even where traditional proofs might regard such a step as "obvious." What "mean-

"meaning" the theorems and axioms of the system might have in relation to the world would be

independent of their logical derivations. This treatment of mathematical reasoning in

purely formal (and hence mechanical) terms provided an essential basis for its automation

on physical computers. The logical syntax and formal rules of inference developed by

Russell and Whitehead are still a basis for automatic theorem-proving systems as well as

for the theoretical foundations of artificial intelligence.

Alfred Tarski is another mathematician whose work is essential to the foundations of

AI. Tarski created a theory of reference wherein the well-formed formulae of Frege or

Russell and Whitehead can be said to refer, in a precise fashion, to the physical world (Tar-

(Tarski 1944, 1956; see Chapter 2). This insight underlies most theories of formal semantics.

In his paper "The semantic conception of truth and the foundation of semantics," Tarski

describes his theory of reference and truth value relationships. Modern computer scien-

scientists, especially Scott, Strachey, Burstall (Burstall and Darlington 1977), and Plotkin have

related this theory to programming languages and other specifications for computing.

Although in the eighteenth, nineteenth, and early twentieth centuries the

formalization of science and mathematics created the intellectual prerequisite for the study

of artificial intelligence, it was not until the twentieth century and the introduction of the

digital computer that AI became a viable scientific discipline. By the end of the 1940s

electronic digital computers had demonstrated their potential to provide the memory and

processing power required by intelligent programs. It was now possible to implement

formal reasoning systems on a computer and empirically test their sufficiency for

exhibiting intelligence. An essential component of the science of artificial intelligence is

this commitment to digital computers as the vehicle of choice for creating and testing

theories of intelligence.

CHAPTER 1 / AI: HISTORY AND APPLICATIONS 9

Digital computers are not merely a vehicle for testing theories of intelligence. Their

architecture also suggests a specific paradigm for such theories: intelligence is a form of

information processing. The notion of search as a problem-solving methodology, for

example, owes more to the sequential nature of computer operation than it does to any

biological model of intelligence. Most AI programs represent knowledge in some formal

language that is then manipulated by algorithms, honoring the separation of data and

program fundamental to the von Neumann style of computing. Formal logic has emerged

as the lingua franca of AI research, whereas graph theory plays an indispensable role in the

analysis of problem spaces as well as providing a basis for semantic networks and

similar models of semantic meaning. These techniques and formalisms are discussed in

detail throughout the body of this text; we mention them here to emphasize the symbiotic

relationship between the digital computer and the theoretical underpinnings of artificial

intelligence.

We often forget that the tools we create for our own purposes tend to shape our

conception of the world through their structure and limitations. Although seemingly

restrictive, this interaction is an essential aspect of the evolution of human knowledge: a

tool (and scientific theories are ultimately only tools) is developed to solve a particular

problem. As it is used and refined, the tool itself seems to suggest other applications,

leading to new questions and, ultimately, the development of new tools.

1.1.3 The Turing Test

One of the earliest papers to address the question of machine intelligence specifically in

relation to the modern digital computer was written in 1950 by the British mathematician

Alan Turing. "Computing machinery and intelligence" (Turing 1950) remains timely in

both its assessment of the arguments against the possibility of creating an intelligent

computing machine and its answers to those arguments. Turing, known mainly for his con-

contributions to the theory of computability, considered the question of whether or not a

machine could actually be made to think. Noting that the fundamental ambiguities in the

question itself (what is thinking? what is a machine?) precluded any rational answer, he

proposed that the question of intelligence be replaced by a more clearly defined

empirical test.

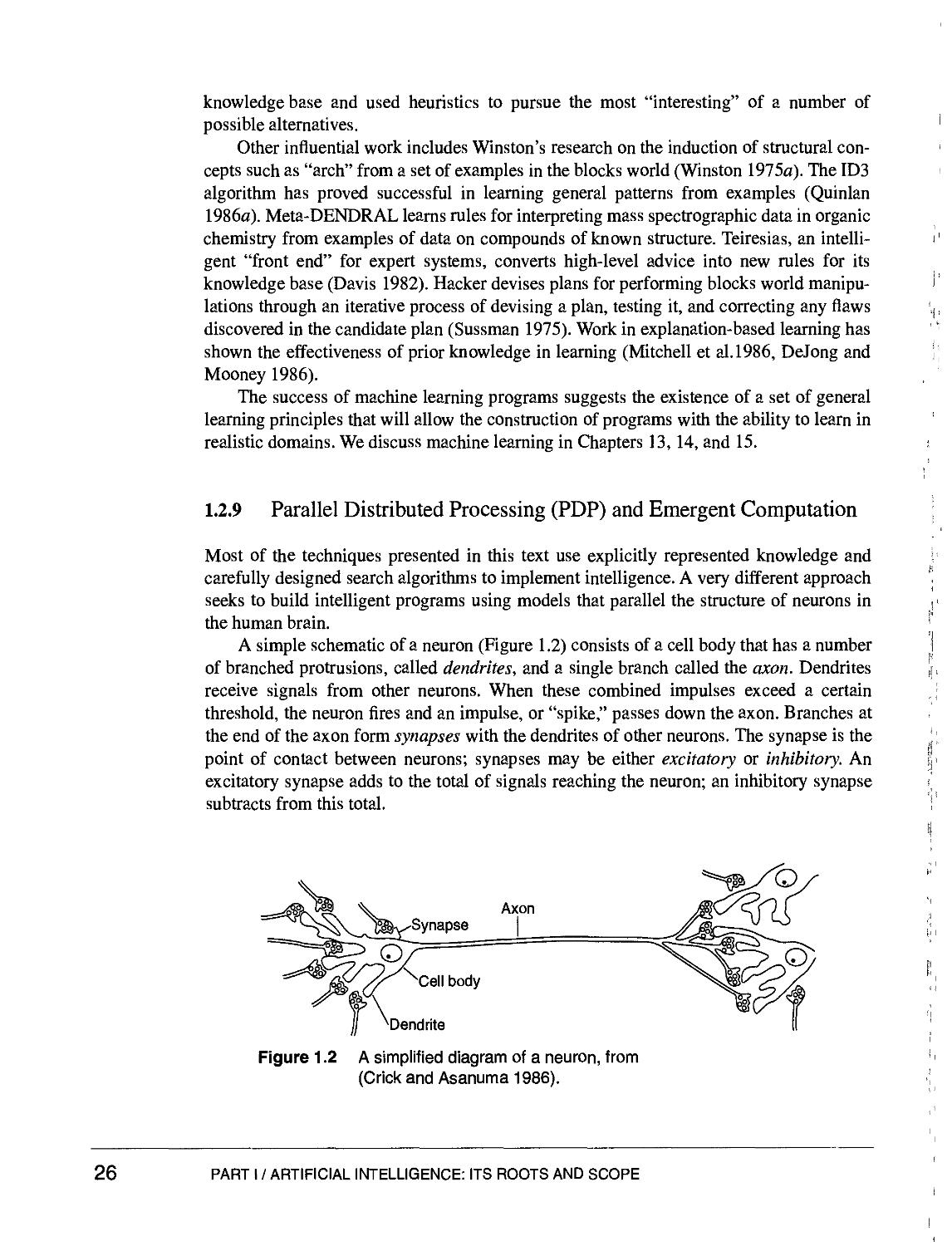

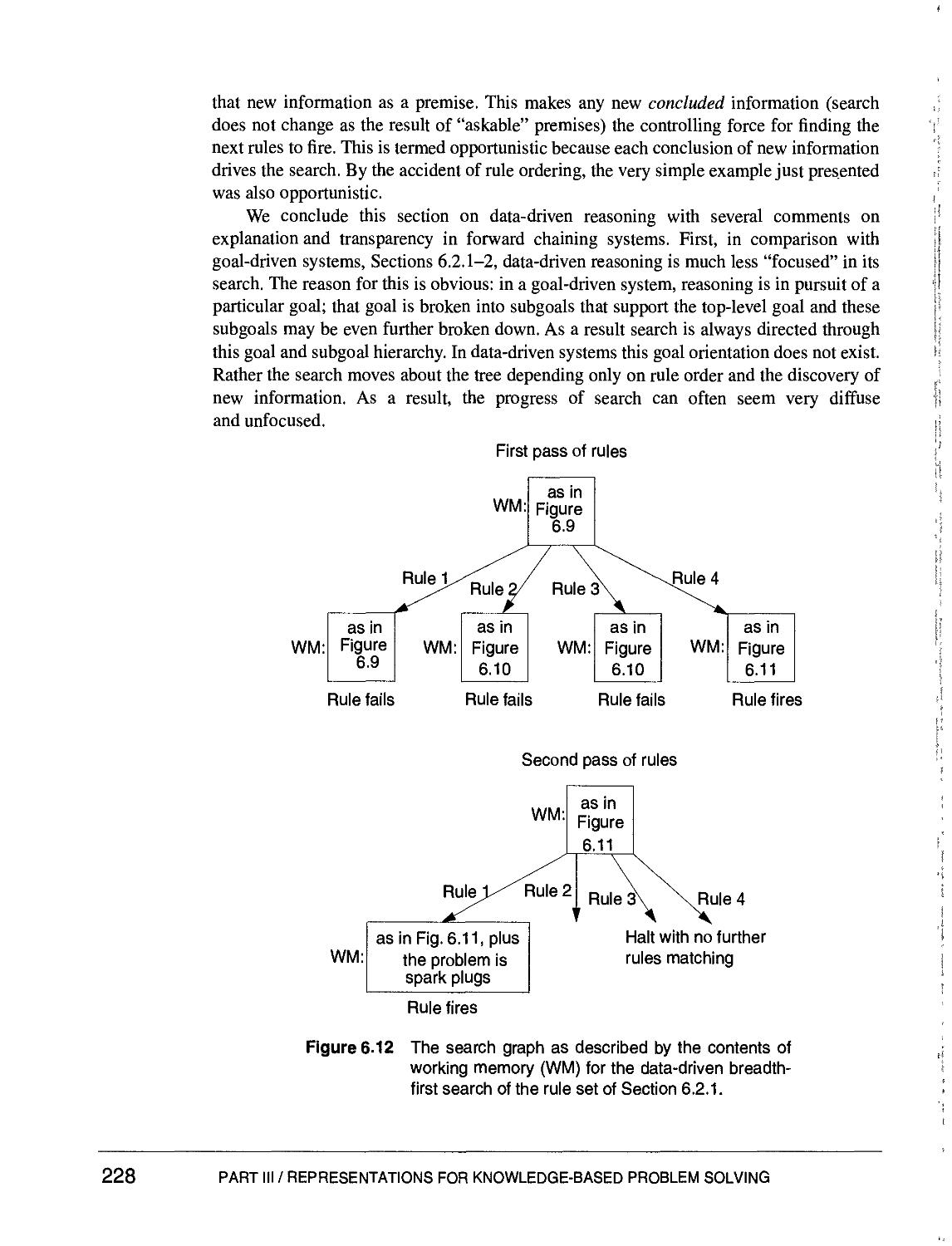

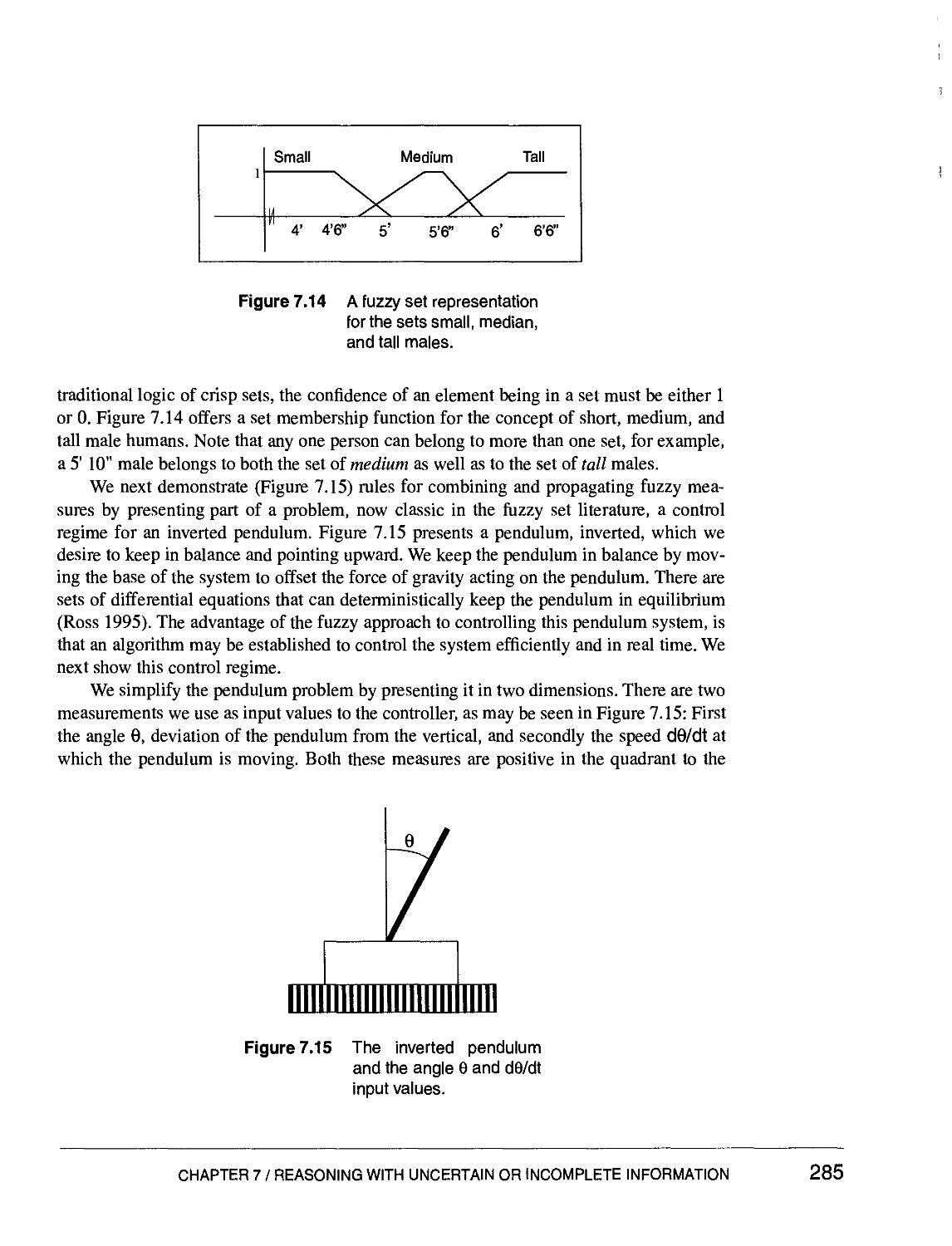

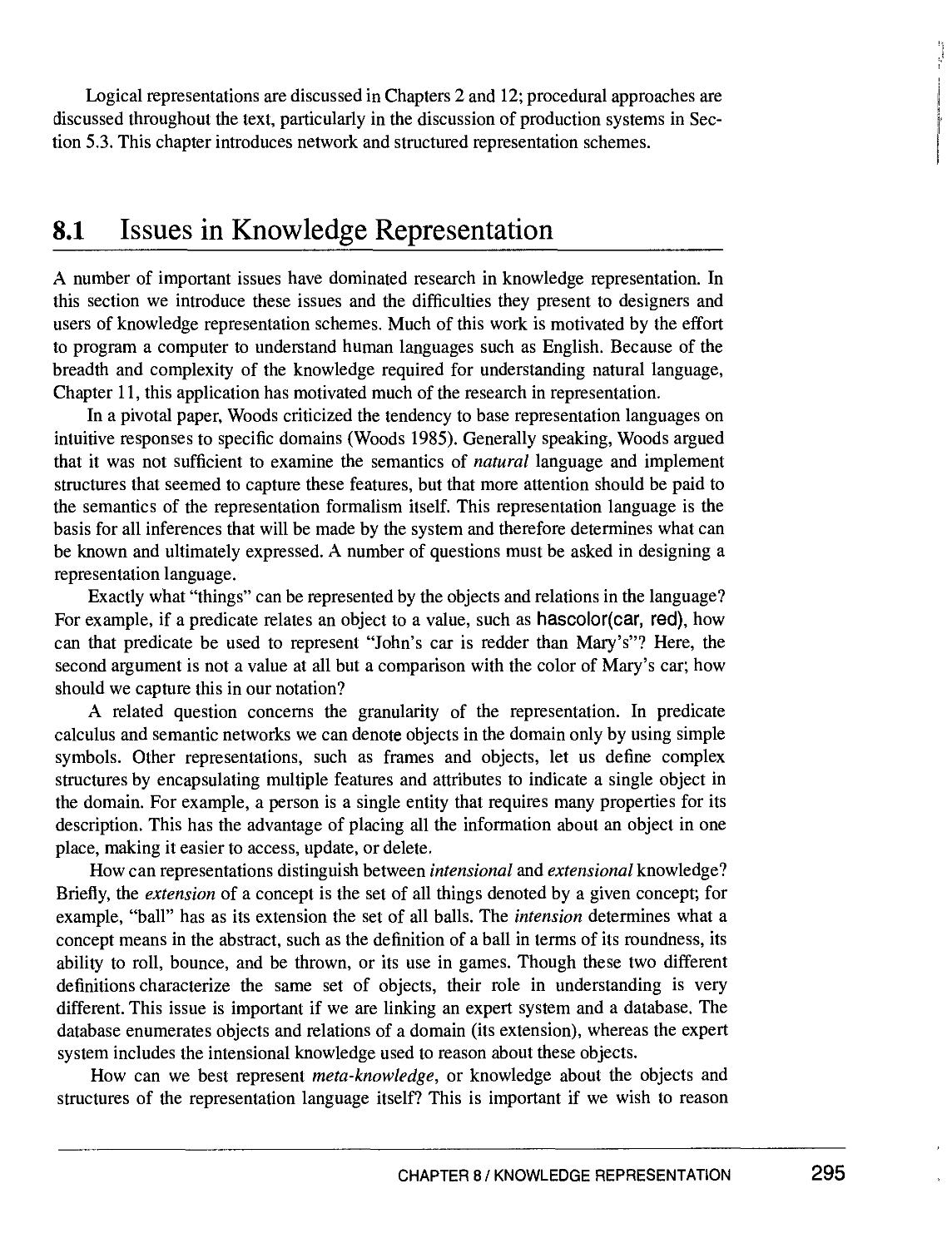

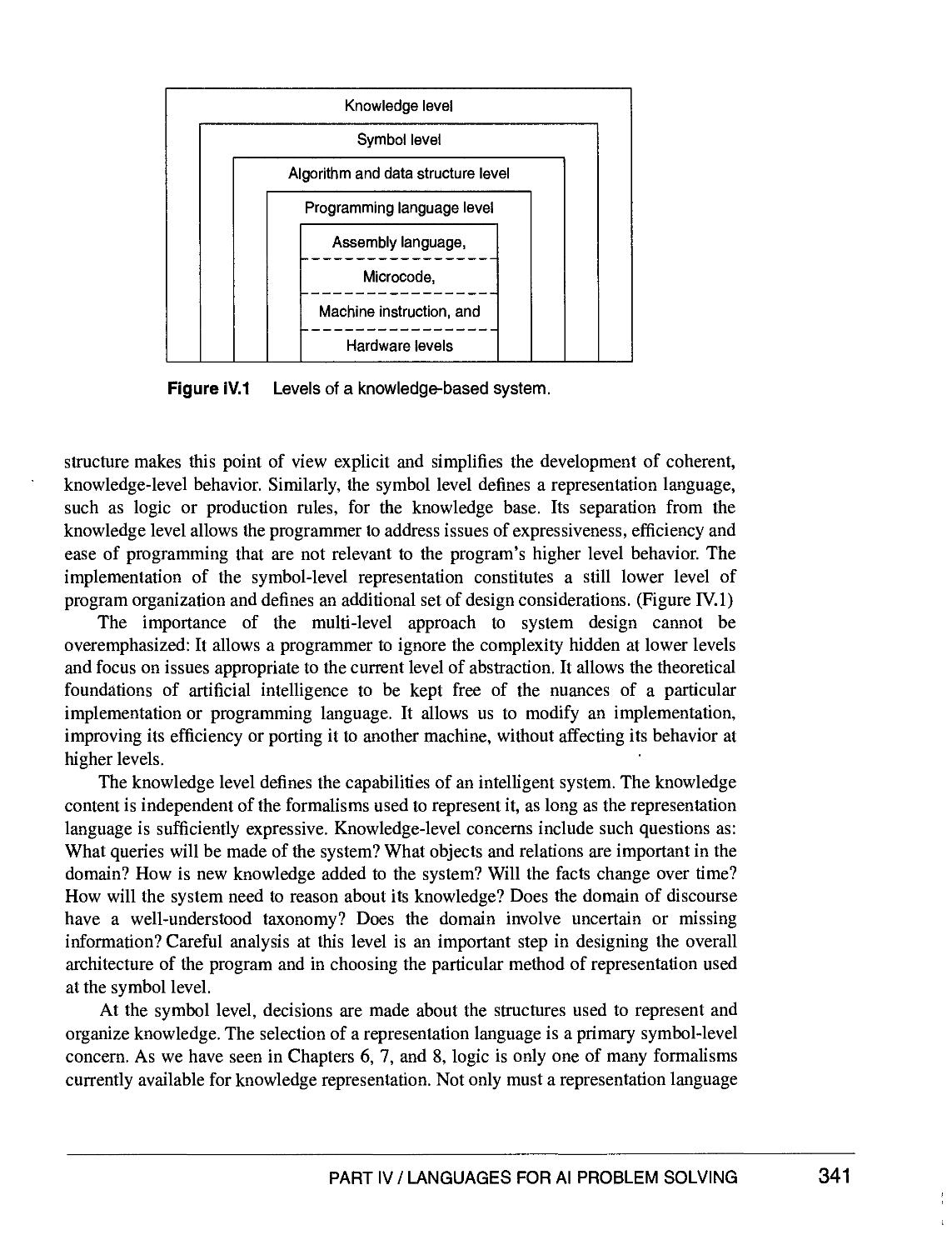

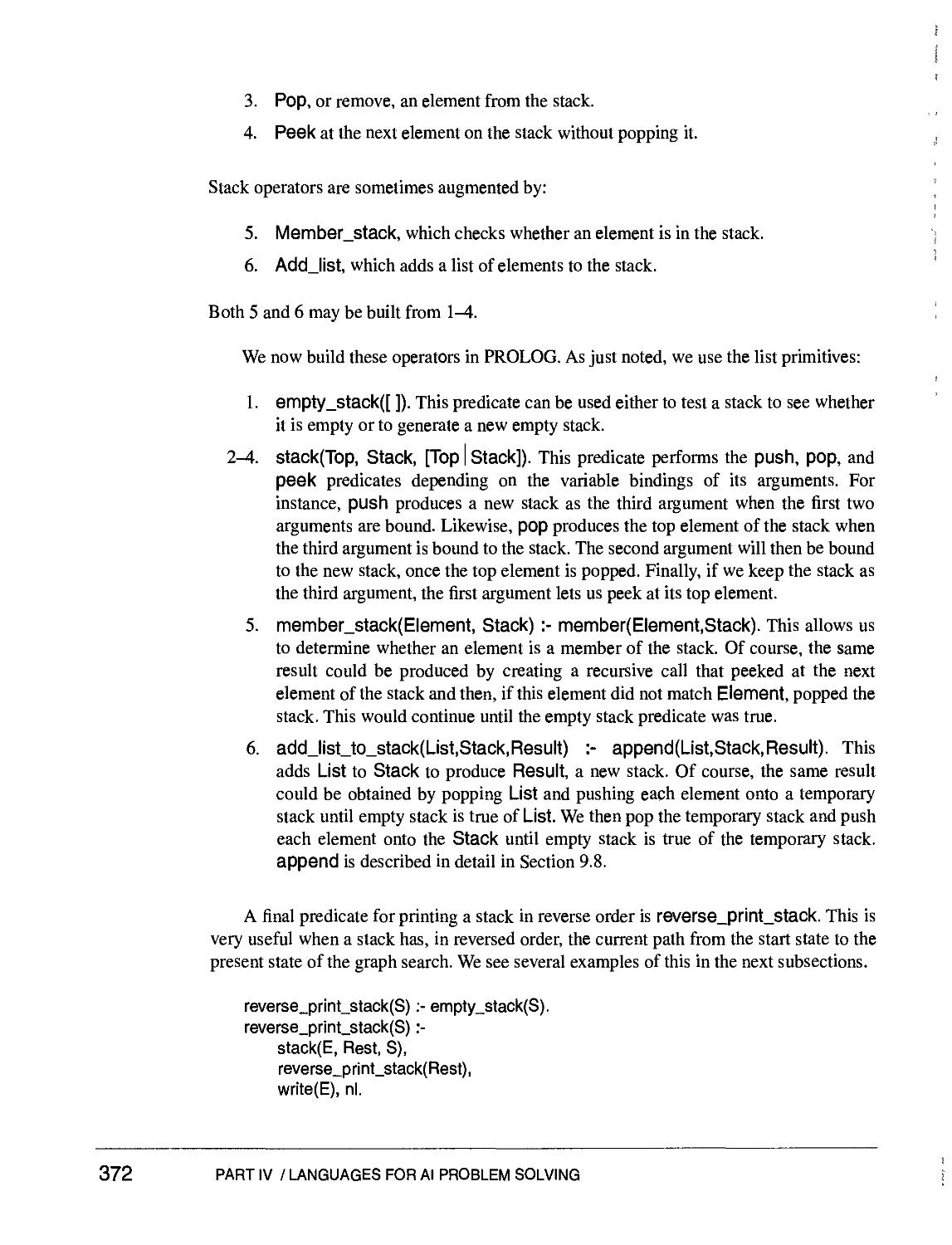

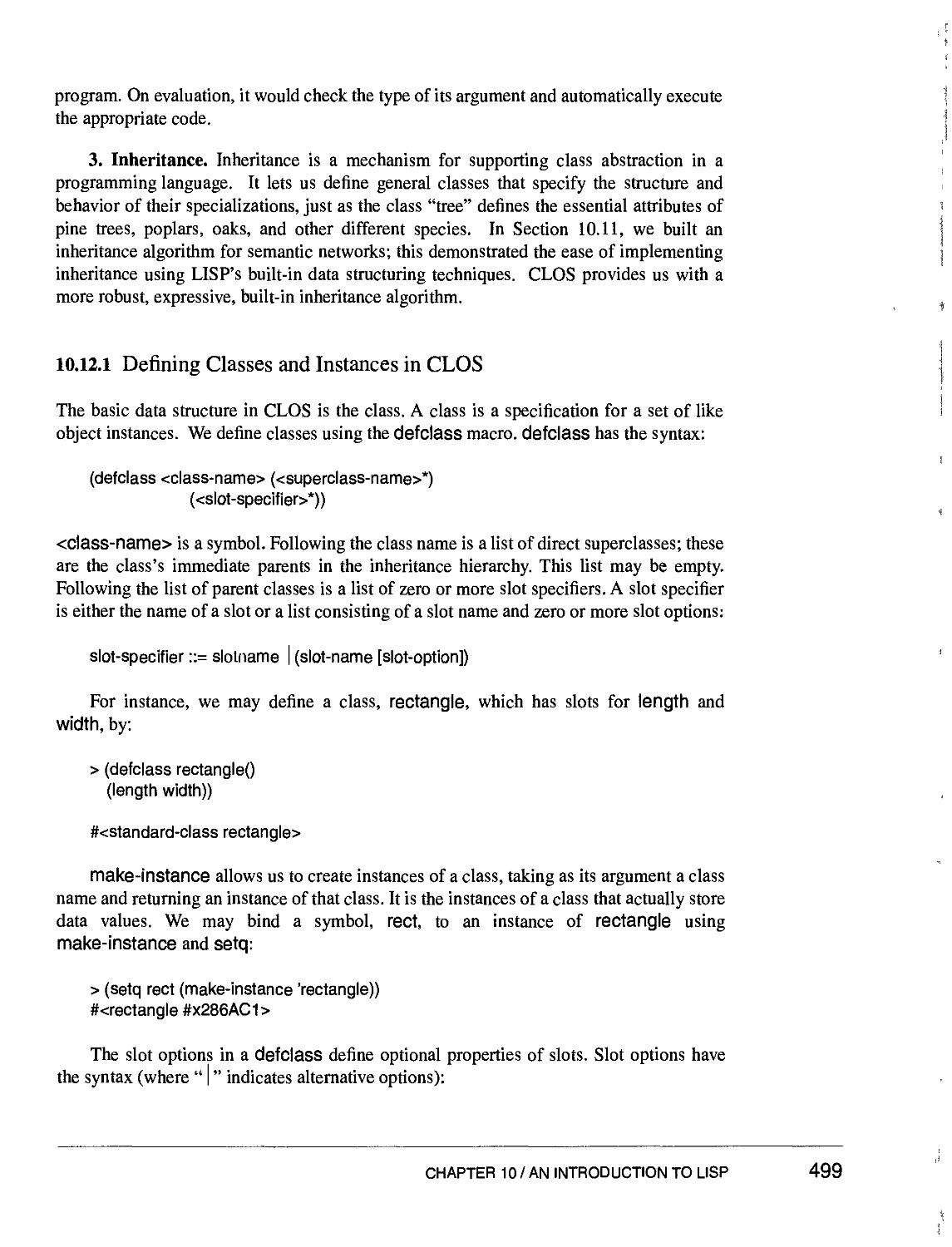

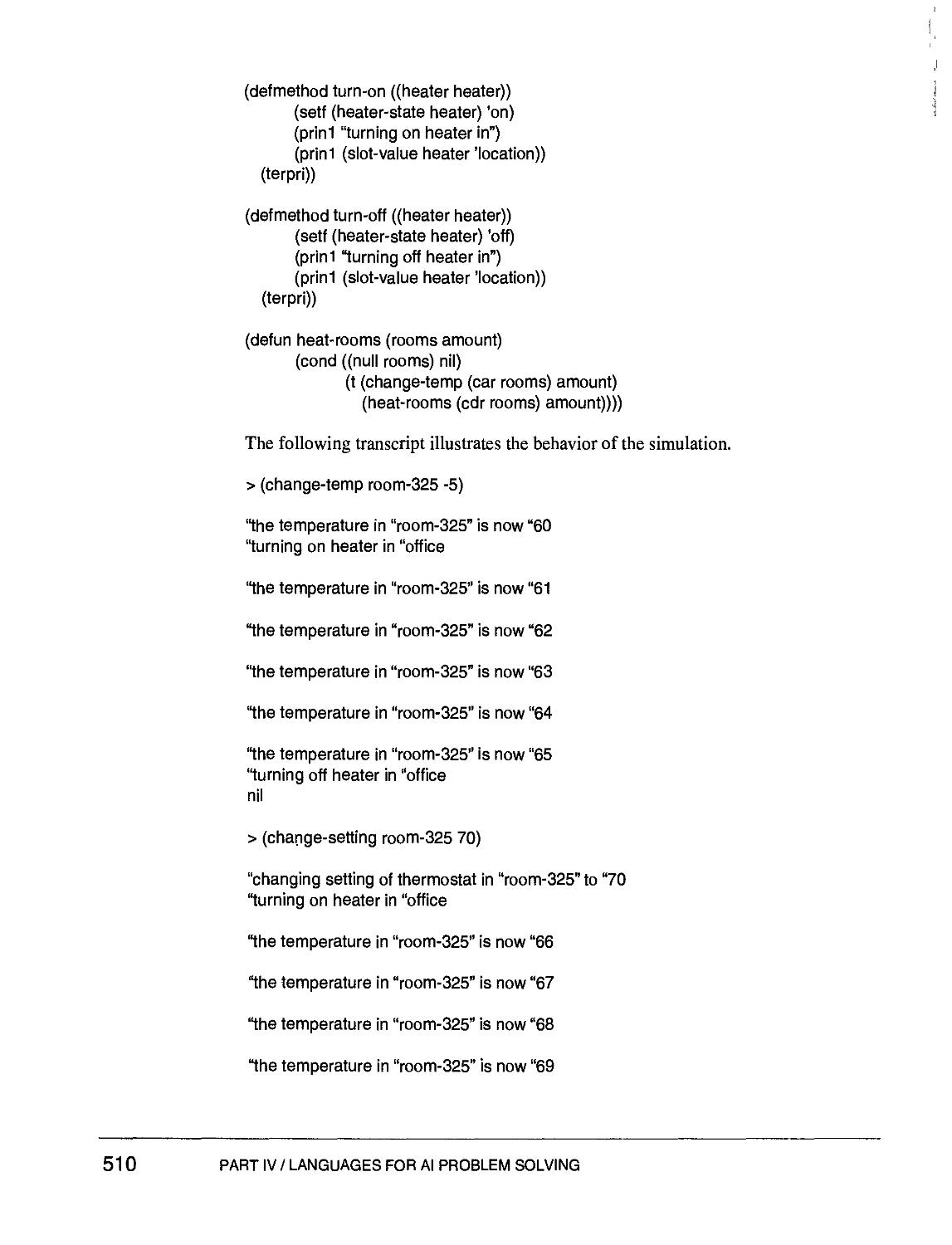

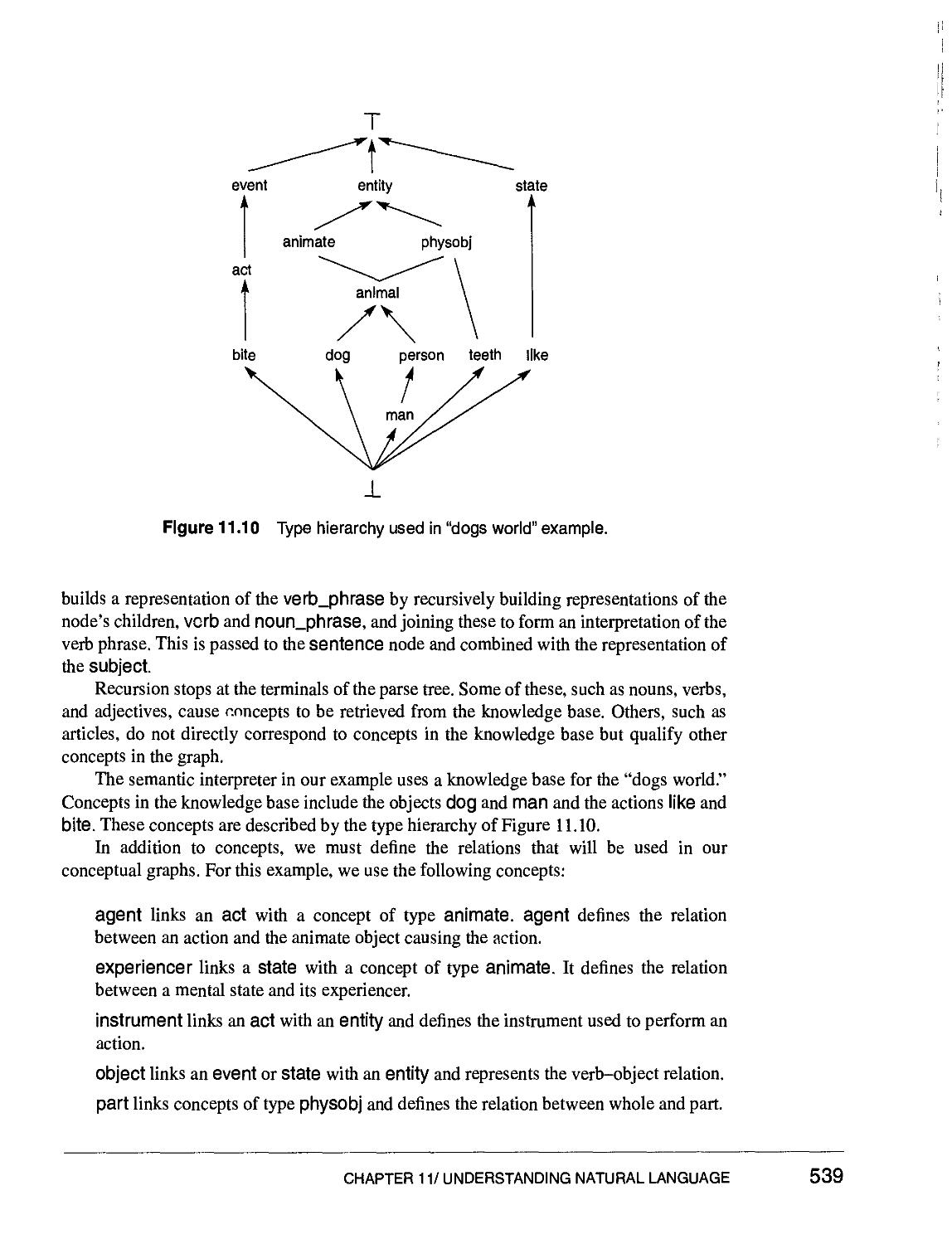

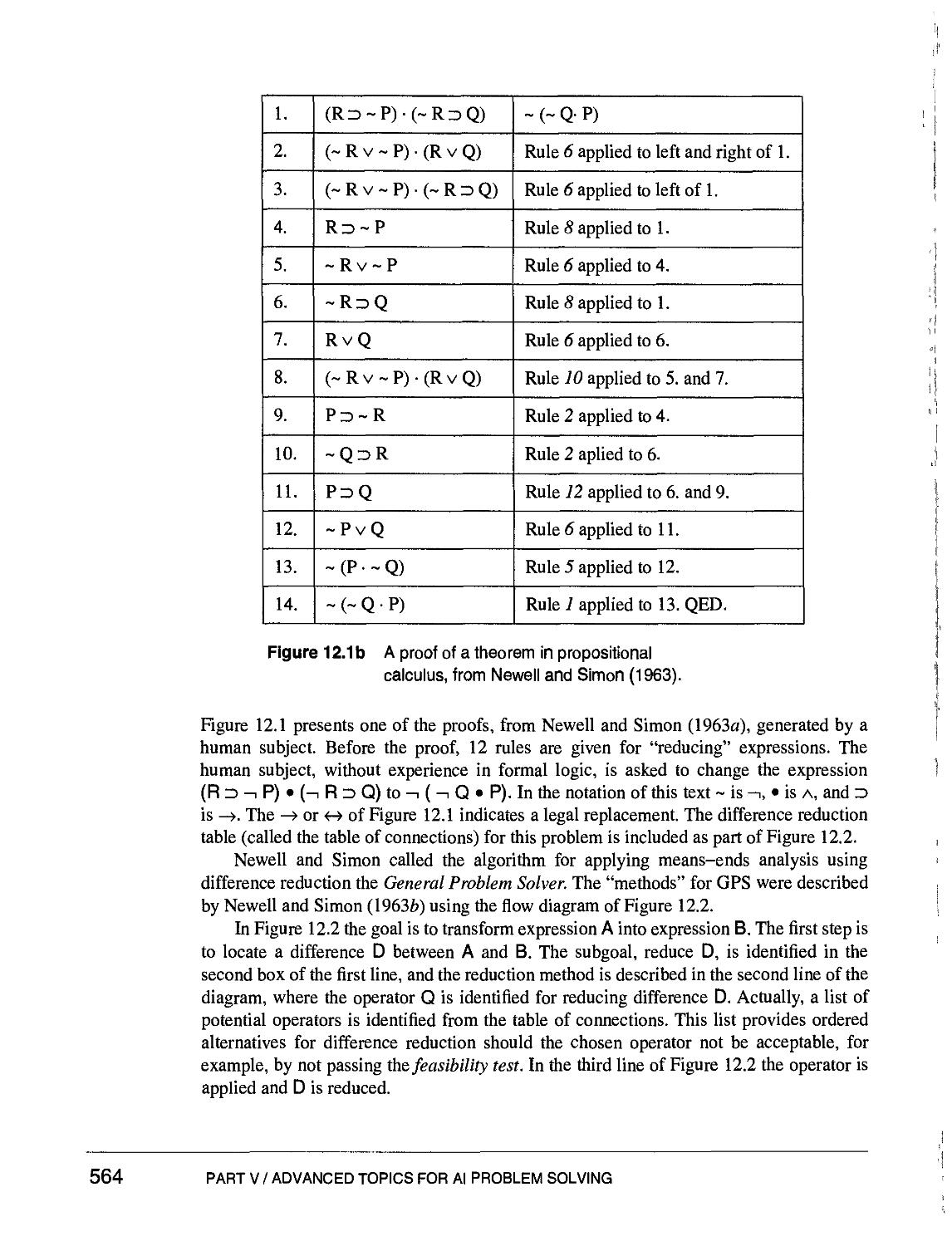

The Turing test measures the performance of an allegedly intelligent machine against